Julia Gygax

@juliagygax4

ID: 1395645504365776897

21-05-2021 07:41:02

10 Tweet

63 Takipçi

90 Takip Edilen

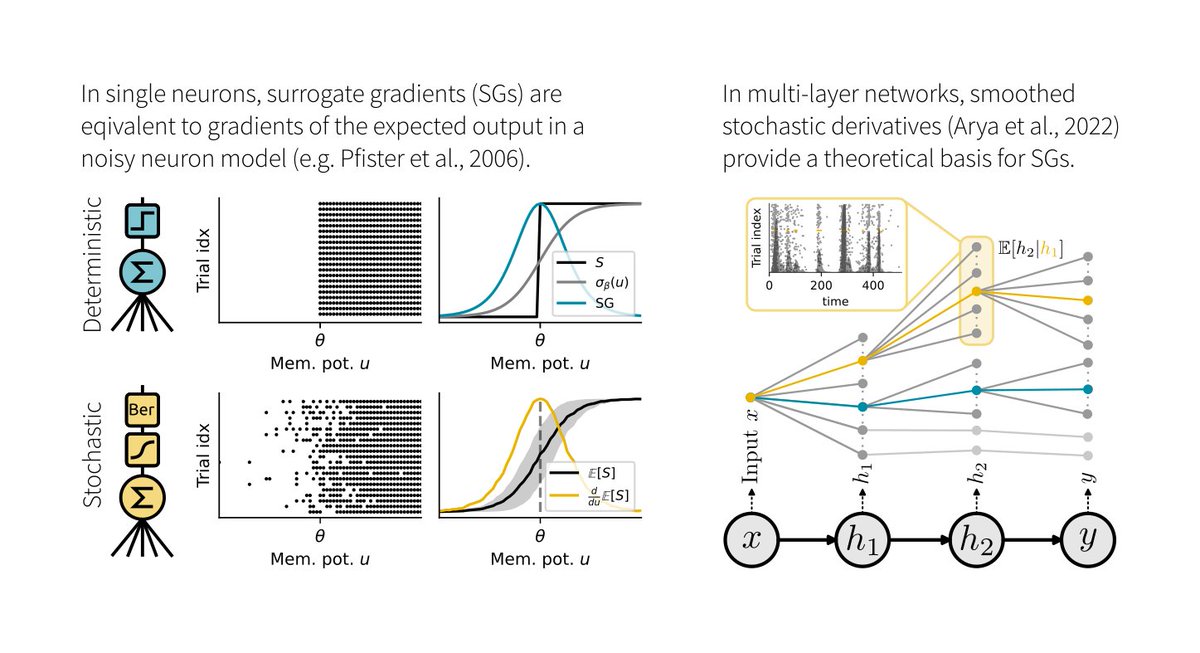

1/4 Surrogate gradients are a great tool for training spiking neural networks in comp neuro and neuromorphic engineering, but what is a good initialization? In our new preprint co-led by Julian Rossbroich and Julia Gygax, we lay out practical strategies: arxiv.org/abs/2206.10226

1/6 We updated “Implicit variance regularization in non-contrastive SSL,” by Manu Halvagal and Axel Laborieux with new results for NeurIPS camera-ready: arxiv.org/abs/2212.04858 neurips.cc/virtual/2023/p…

Very excited to share this new paper, in which we propose a possible role for (dis-)inhibitory microcircuits in coordinating plasticity. If you want to discuss, come find me NeurIPS Conference or drop us a message.

1/6 Surrogate gradients (SGs) are empirically successful at training spiking neural networks (SNNs). But why do they work so well, and what is their theoretical basis? In our new preprint led by Julia Gygax, we provide the answers: arxiv.org/abs/2404.14964

We're hiring! Come build models of how the brain learns and simulates a world model. We have several openings at PhD and postdoc levels, including a collab with Georg Keller lab on designing regulatory elements to target distinct neuronal cell types. zenkelab.org/jobs

Thank you so much for choosing our work for the Ruth Chiquet Prize, it's a big honour. And thanks to Tengjun Liu and Julian Rossbroich for the wonderful collaboration.