Jean Kaddour

@jeankaddour

pyspur.dev

PhD Student in ML @ UCL

ID: 1863618842

https://www.jeankaddour.com/ 14-09-2013 12:05:44

650 Tweet

1,1K Followers

2,2K Following

I really enjoyed my Machine Learning Street Talk chat with Tim at #NeurIPS2024 about some of the research we've been doing on reasoning, robustness and human feedback. If you have an hour to spare and are interested in some semi-coherent thoughts revolving around AI robustness, it may be worth

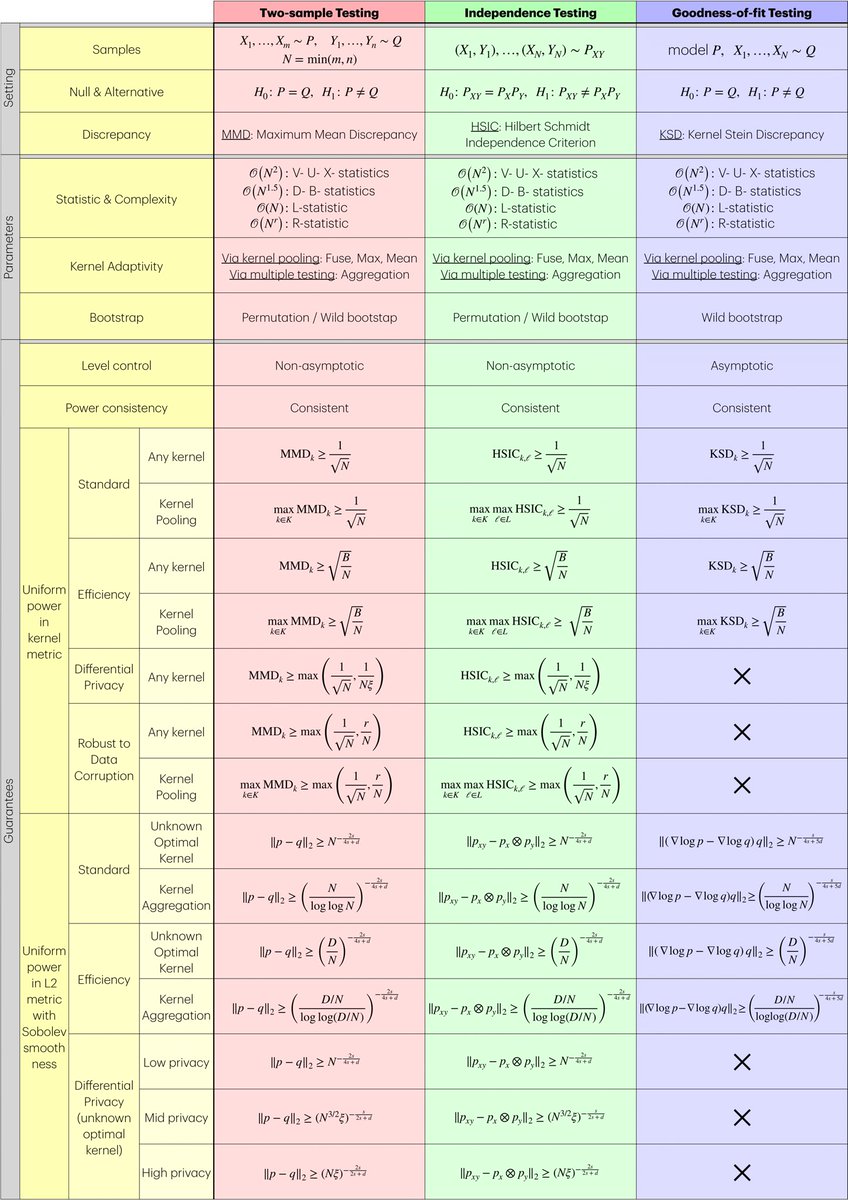

🎓PhD in Foundational AI done☑️ UCL Centre for Artificial Intelligence Gatsby Computational Neuroscience Unit Huge thanks to my supervisors Benjamin Guedj Arthur Gretton & to all collaborators! Check out my article & summary table unifying all my PhD works together! A Unified View of Optimal Kernel Hypothesis Testing arxiv.org/abs/2503.07084

ThePrimeagen every time i try a non-IDE, CLI-only coding tool