Harry Mellor

@hmellor_

ML Engineer @huggingface maintaining @vllm_project, prev @graphcoreai, @uniofoxford

ID: 1570092590204129287

14-09-2022 16:50:39

20 Tweet

111 Followers

21 Following

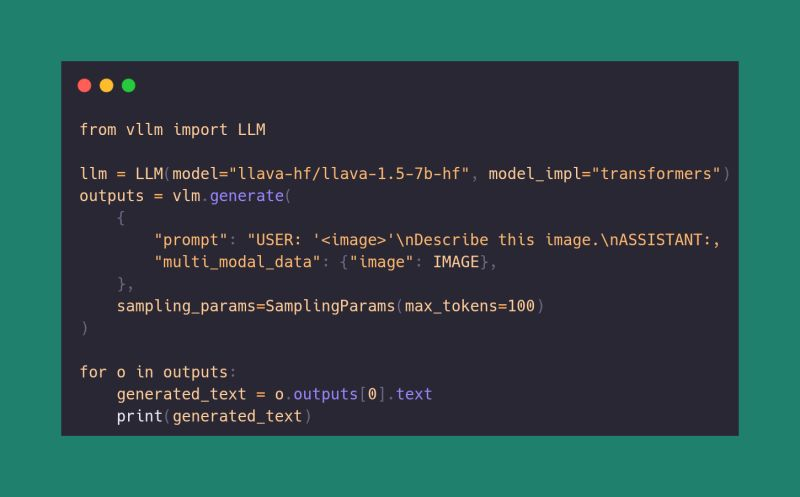

The Hugging Face Transformers ↔️ vLLM integration just leveled up: Vision-Language Models are now supported out of the box! If the model is integrated into Transformers, you can now run it directly with vLLM. github.com/vllm-project/v… Great work Raushan Turganbay 👏

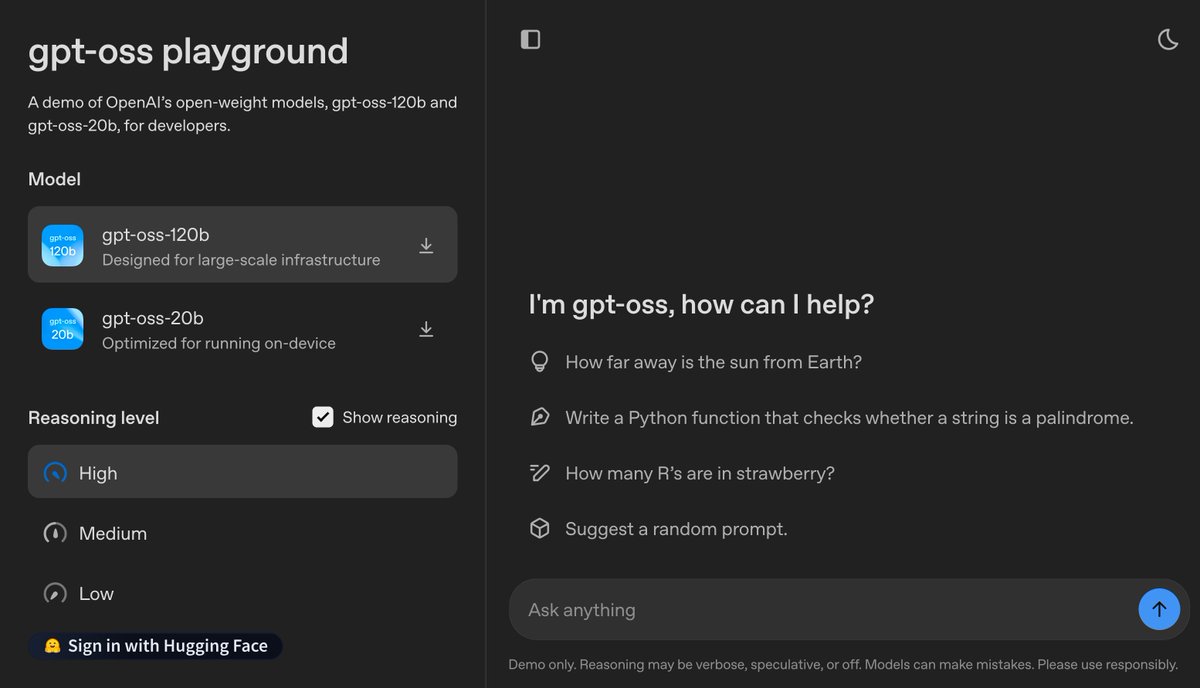

When Sam Altman told me at the AI summit in Paris that they were serious about releasing open-source models & asked what would be useful, I couldn’t believe it. But six months of collaboration later, here it is: Welcome to OSS-GPT on Hugging Face! It comes in two sizes, for both

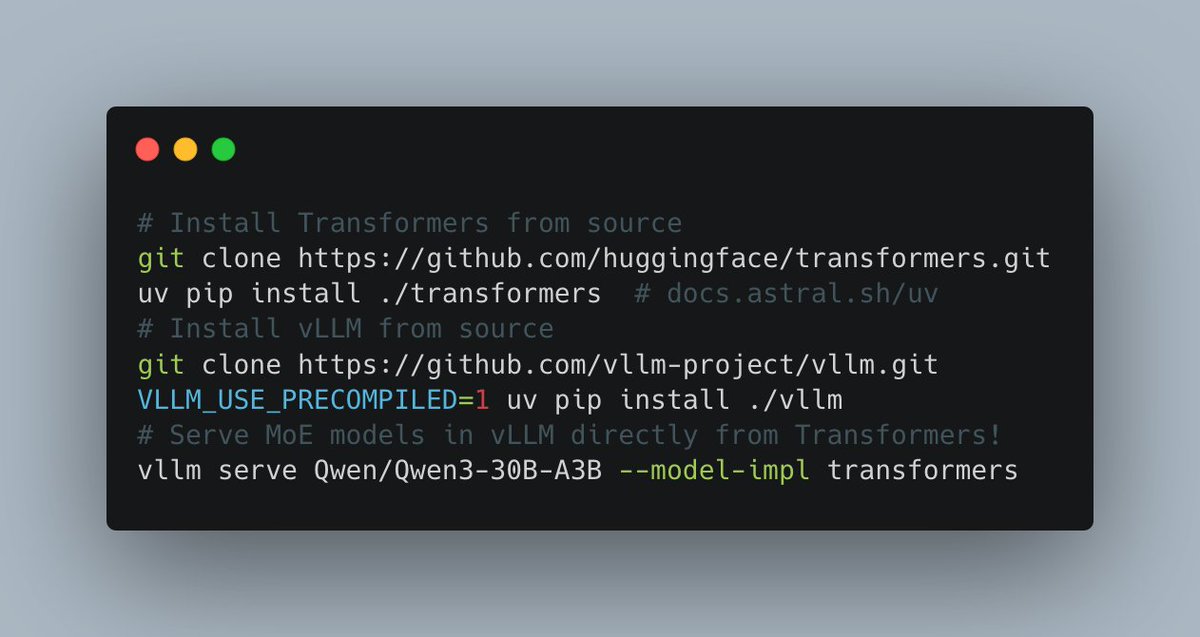

Want to deploy open models using vLLM as the inference engine? We just released a step-by-step guide on how to do it with Hugging Face Inference Endpoints, now available in the vLLM docs. let the gpus go brrr