Haitham Bou Ammar

@hbouammar

RL team leader @Huawei R&D UK H. Assistant Professor @UCL | Ex-@Princeton, Upenn (thou/thine)

ID: 1364749022

19-04-2013 15:51:58

3,3K Tweet

4,4K Followers

368 Following

Amazing team from Astera Institute at Seeed Studio NVIDIA Robotics LeRobot home robot hackathon! ✨ VR app with stream & arm mapping 🎮 Isaac Sim BEHAVIOR env + RL 🗺️ MUSt3R-based navi & 3D reconstr 🧠 GR00T 1.5 training with Jetson Thor All open source:

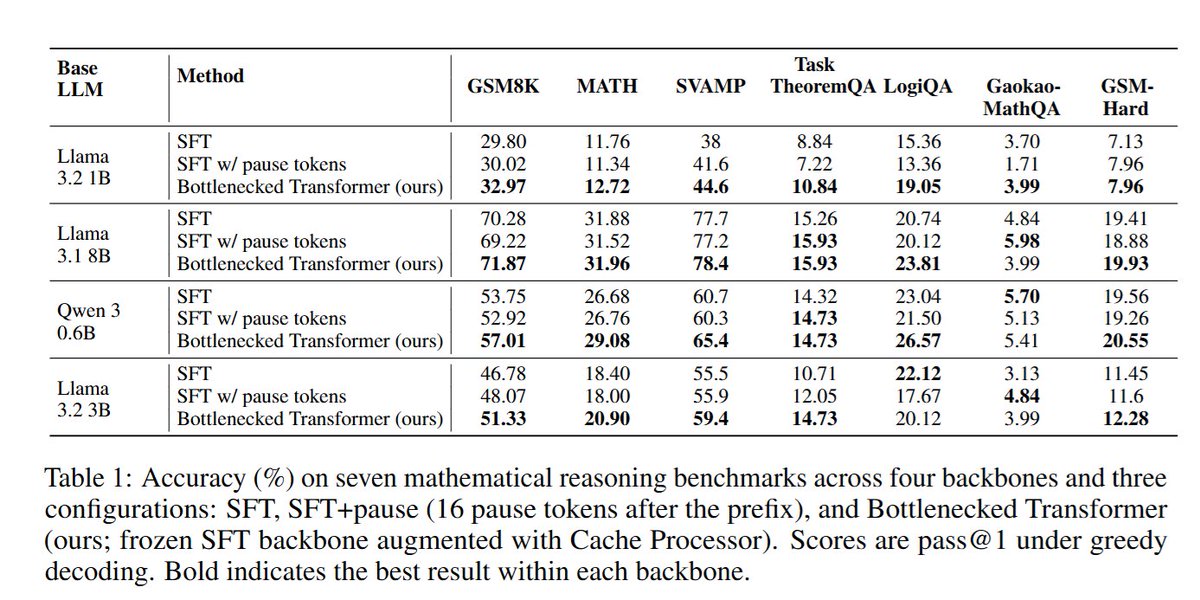

Very interesting results from Cyrus (Cyrus Wai-Chung Kwan) -- training on generated math reasoning problems within an open-ended self-play framework can yield more accurate results than training on "gold" datasets like GSM8K or MATH!

I agree with Jeremy Howard

Chris Murphy 🟧 You're being played by people who want regulatory capture. They are scaring everyone with dubious studies so that open source models are regulated out of existence.