Haven (Haiwen) Feng

@havenfeng

PhD student @MPI_IS, visiting @berkeley_ai now. Interested in machine learning, computer vision, computer graphic, and how to understand the physical world.

ID: 1450717795948367877

http://havenfeng.github.io 20-10-2021 06:58:06

129 Tweet

946 Followers

873 Following

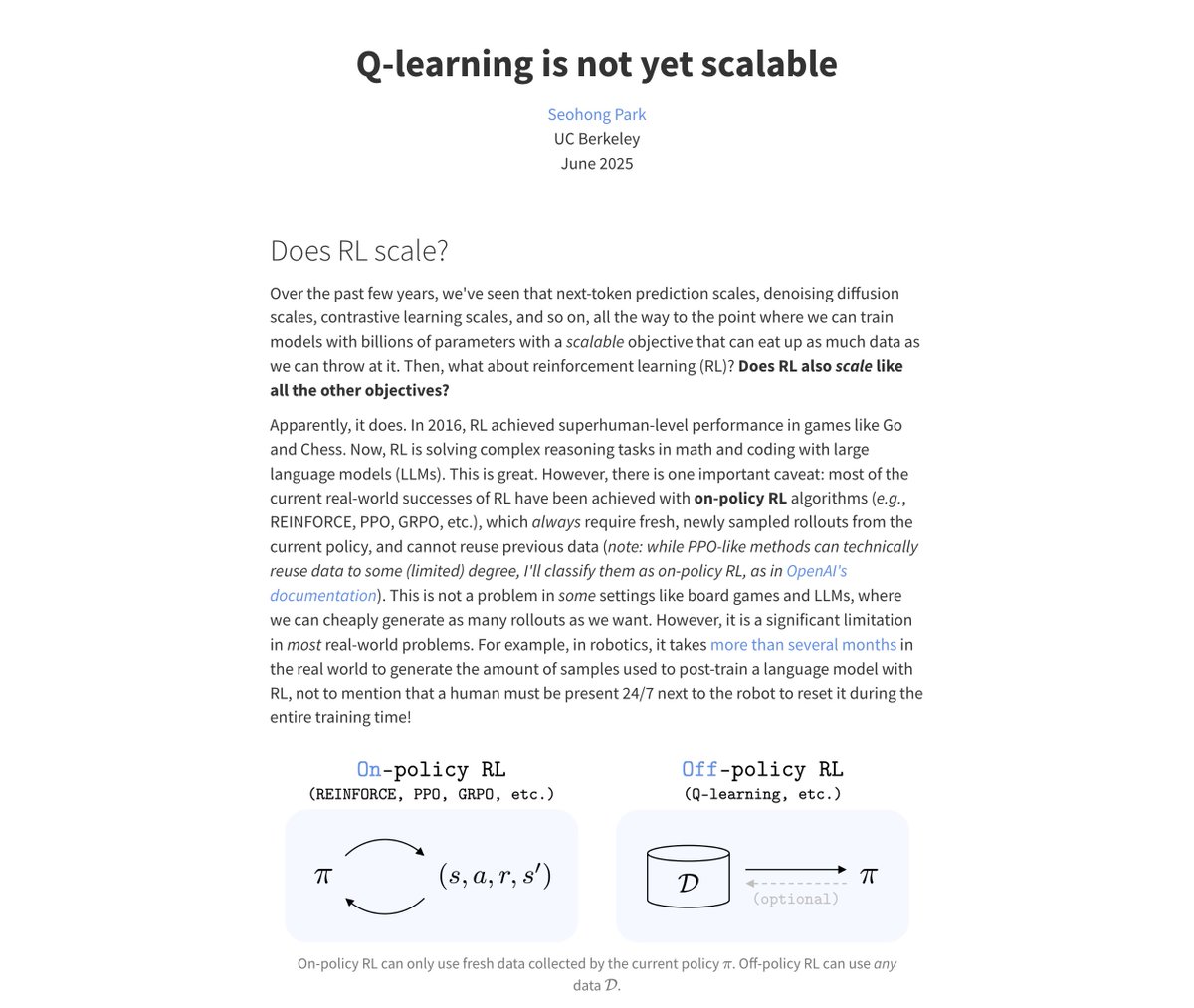

Check out our blog post at flowreinforce.github.io We developed interactive plots that explain the connection between flow/diffusion models and RL. w/ a great team of collaborators! Songwei Ge Brent Yi Chung Min Kim Ethan Weber Hongsuk Benjamin Choi Haiwen (Haven) Feng Angjoo Kanazawa

![Ruilong Li (@ruilong_li) on Twitter photo For everyone interested in precise 📷camera control 📷 in transformers [e.g., video / world model etc]

Stop settling for Plücker raymaps -- use camera-aware relative PE in your attention layers, like RoPE (for LLMs) but for cameras!

Paper & code: liruilong.cn/prope/ For everyone interested in precise 📷camera control 📷 in transformers [e.g., video / world model etc]

Stop settling for Plücker raymaps -- use camera-aware relative PE in your attention layers, like RoPE (for LLMs) but for cameras!

Paper & code: liruilong.cn/prope/](https://pbs.twimg.com/media/Gv6T8JdXIAUSmiE.jpg)