graphmanic

@graphmanic

Life is short. Use Python.

ID: 1802285907766054912

16-06-2024 10:25:17

3,3K Tweet

28 Followers

50 Following

Holy shit, even David Sacks - who built White House AI policy based on strong beliefs in scaling - has come around to saying what I had been saying for years. Scaling as we knew it is done.

Want to train a GPT model with your own data and deploy it fast? 🚀 With #HuggingFace Transformers in PyCharm, you can: ✔️ Browse and add models in your IDE ✔️ Fine-tune models with custom datasets ✔️ Deploy models via FastAPI See the step-by-step guide by Cheuk Ting Ho here:

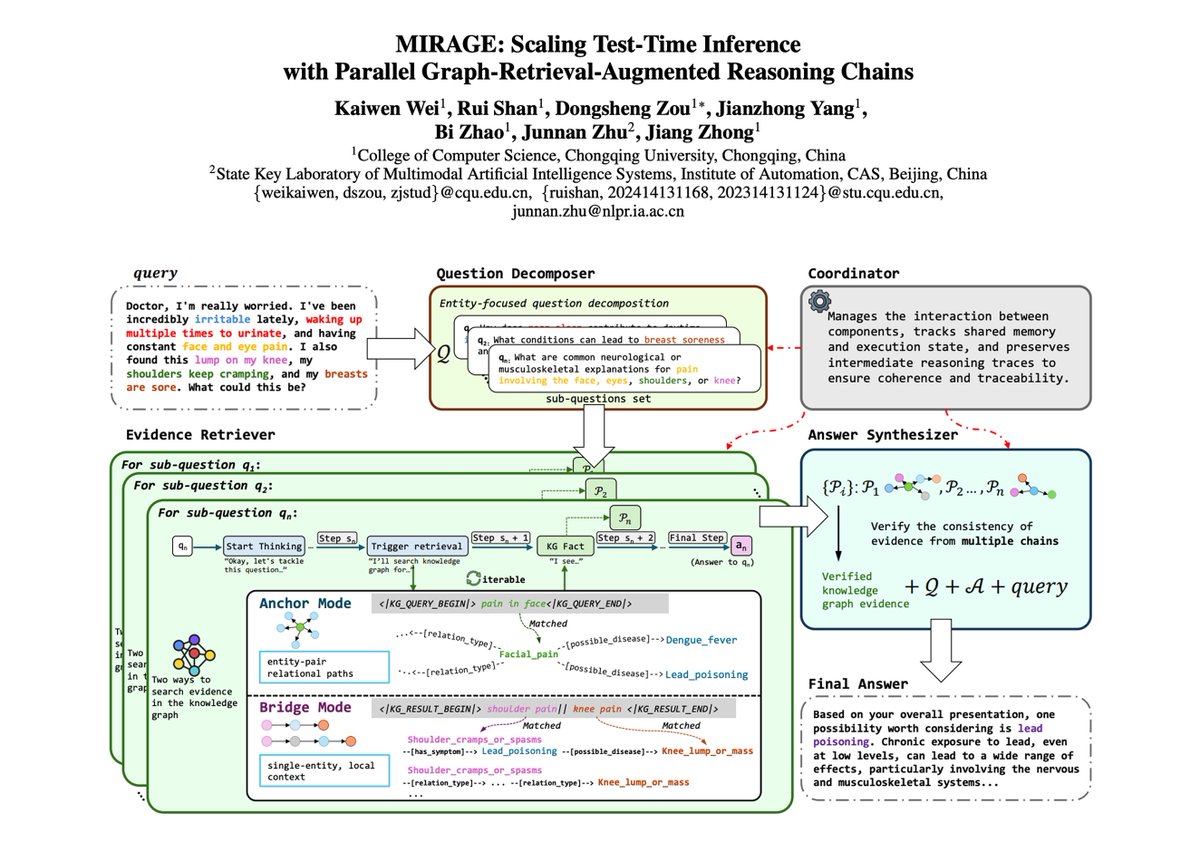

Build better RAG by letting a team of agents extract and connect your reference materials into a knowledge graph. Our new short course, “Agentic Knowledge Graph Construction,” taught by @Neo4j Innovation Lead Andreas Kollegger, shows you how. Knowledge graphs are an important way to