Eureka

@eurekates

Engineer

#DataScience

ID: 1894884626

22-09-2013 19:45:09

41 Tweet

106 Takipçi

969 Takip Edilen

It is only rarely that, after reading a research paper, I feel like giving the authors a standing ovation. But I felt that way after finishing Direct Preference Optimization (DPO) by Rafael Rafailov @ NeurIPS Archit Sharma Eric Stefano Ermon Christopher Manning and Chelsea Finn. This

Happy to see our WP w Shogo Sakabe and David Weinstein (so many years in the making!) out. We examine the role of codifying knowledge in the spread of the Industrial Revolution. A little thread. 1/N

Steve McCormick It is fairly rare that you can point at an equation and say "this is obviously wrong" like in this case. More often you know they're wrong because an assumption they made disagrees with established results. Eg, I remember a case 15 years ago or so when someone repeated a

Erik Bernhardsson It's surprising how bad UX most CI providers have.

What if you could make physics diagrams come alive? At #UIST2024, we will be presenting our paper, Augmented Physics, an ML-Integrated Authoring Tool for Creating Interactive Physics Simulations from Static Diagrams Co-authors: Yi Wen Nandi Zhang Jarin Rubaiat Habib Ryo Suzuki

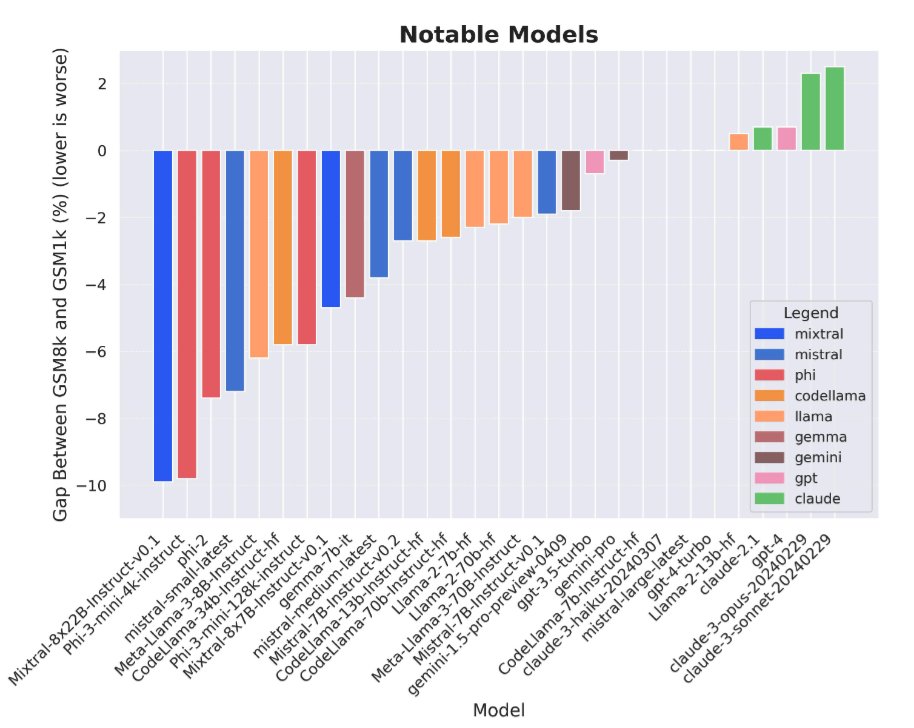

Unpopular opinion: benchmarks like these are moving the field in the wrong direction No I don't want an AI to be able to memorize (useless?) questions like "How many paired tendons are supported by a sesamoid bone?" in its weights I want the "intern", as Andrej Karpathy is suggesting