Xiaochuang Han

@xiaochuanghan

PhD student at the University of Washington

ID: 4916685123

http://xhan77.github.io 16-02-2016 00:47:56

93 Tweet

567 Followers

730 Following

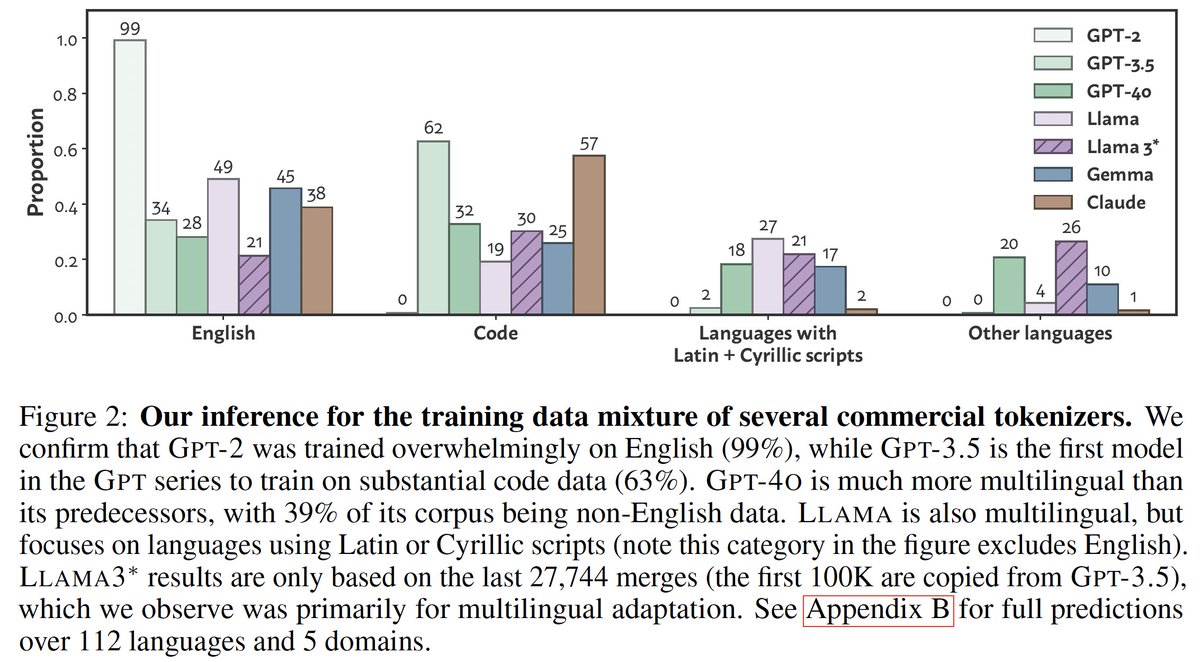

What do BPE tokenizers reveal about their training data?🧐 We develop an attack🗡️ that uncovers the training data mixtures📊 of commercial LLM tokenizers (incl. GPT-4o), using their ordered merge lists! Co-1⃣st Jonathan Hayase arxiv.org/abs/2407.16607 🧵⬇️

🤯One of the most outside-the-box-thinking usages of LLMs I have seen. Interesting work from Xiaochuang Han 🎉

Huge congrats to Oreva Ahia and Shangbin Feng for winning awards at #ACL2024! DialectBench Best Social Impact Paper Award arxiv.org/abs/2403.11009 Don't Hallucinate, Abstain Area Chair Award, QA track & Outstanding Paper Award arxiv.org/abs/2402.00367

🚀 Excited to share our latest work: Transfusion! A new multi-modal generative training combining language modeling and image diffusion in a single transformer! Huge shout to Chunting Zhou Omer Levy Michi Yasunaga Arun Babu Kushal Tirumala and other collaborators.

Check out JPEG-LM, a fun idea led by Xiaochuang Han -- we generate images simply by training an LM on raw JPEG bytes and show that it outperforms much more complicated VQ models, especially on rare inputs.