Thomas Walker

@thomas_m_walker

BSc Mathematics ICL - PhD ECE Rice - Mathematics for Machine Learning - Open for Research Collaborations

ID: 1459565168833220609

https://thomaswalker1.github.io/ 13-11-2021 16:55:00

62 Tweet

17 Followers

226 Following

PhD student at Rice University under the supervision of Professor Richard Baraniuk; exploring the geometry of machine learning models. thomaswalker1.github.io

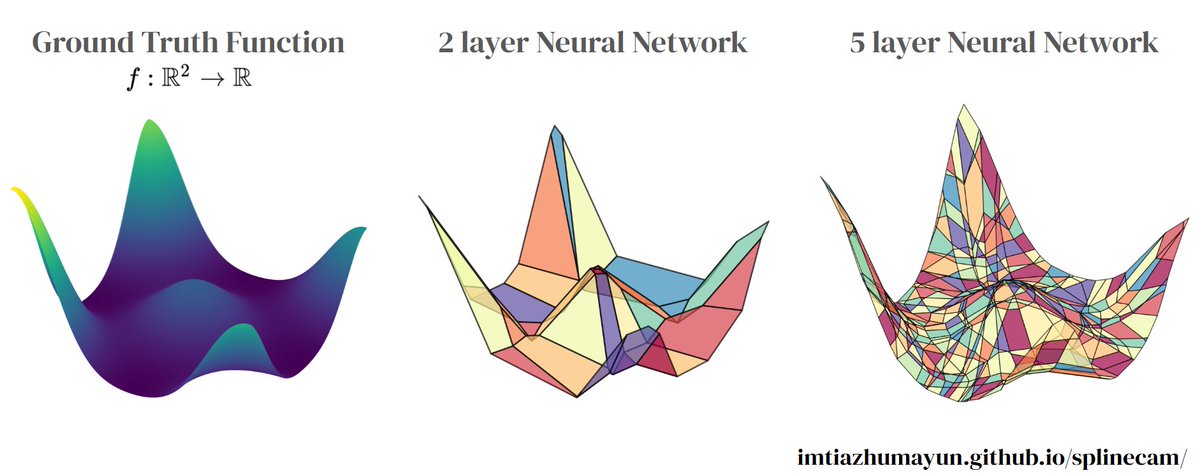

Professor Randall Balestriero discussing some exciting research he has been working on recently, in particular around spline-based interpretability. • Why reconstruction learning can fail for perception • How deep nets partition space like a crystal • Spline theory new insights

Very happy to have presented my poster on “GrokAlign: Geometric Characterisation and Acceleration of Grokking” at the High Dimensional Learning Workshop of ICML Conference thomaswalker1.github.io/blog/grokalign…