Hossein A. (Saeed) Rahmani

@srahmanidashti

PhD Student at WI (@ucl_wi_group) | @FAICDT1 | @UCL

ID: 975115762712096768

http://rahmanidashti.github.io 17-03-2018 21:04:49

735 Tweet

925 Followers

2,2K Following

Proud to announce that Dr Laura Ruis defended her PhD thesis titled "Understanding and Evaluating Reasoning in Large Language Models" last week 🥳. Massive thanks to Noah Goodman and Emine Yilmaz for examining! As is customary, Laura received a personal mortarboard from

Congrats, Dr. Laura Ruis! Laura is amazing, and I'm very happy my PhD journey overlapped with hers. I've learned so much from her, from working together on a project to the many times we discussed my research and challenges, and that's just a few examples.

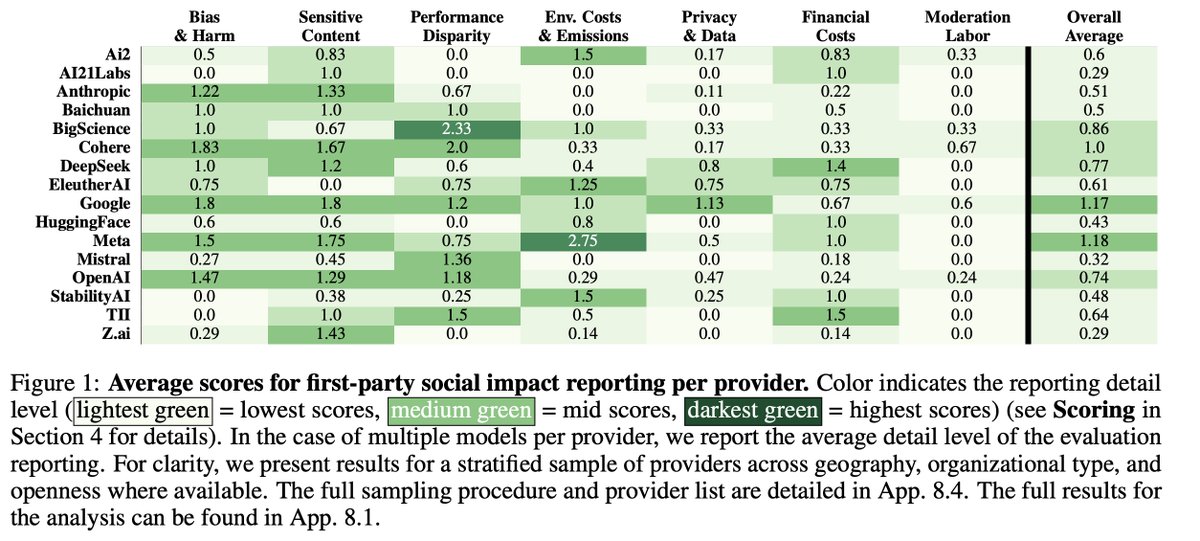

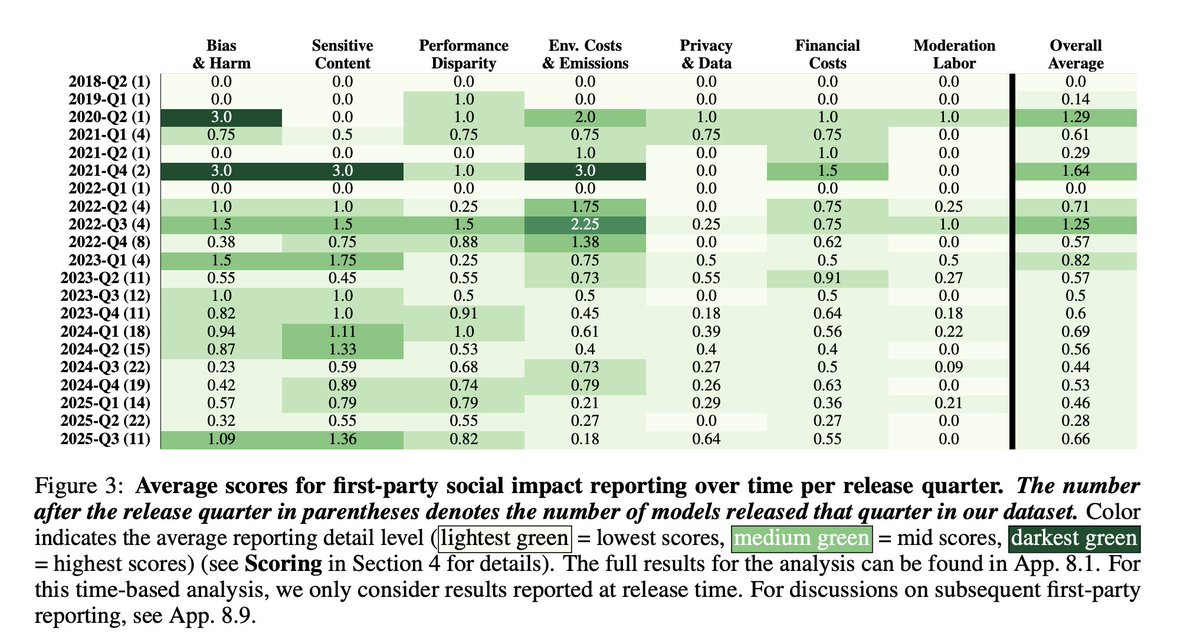

It was a pleasure to contribute to this EvalEval Coalition project on social impact evaluation: “Who Evaluates AI’s Social Impacts? Mapping Coverage and Gaps in First and Third Party Evaluations”, led by Avijit Ghosh ➡️ Neurips, Anka Reuel | @ankareuel.bsky.social, and Jenny Chim! More details and preprint👇.