Arkadiy Saakyan

@rkdsaakyan

PhD student @ColumbiaCompSci @columbianlp working on human-AI collaboration, AI creativity and explainability. prev. intern @GoogleDeepMind, @AmazonScience

ID: 1439915410263101446

http://asaakyan.github.io 20-09-2021 11:33:00

40 Tweet

147 Followers

532 Following

New paper with students Barnard College on testing orthogonal thinking / abstract reasoning capabilities of Large Language Models using the fascinating yet frustratingly difficult The New York Times Connections game. #NLProc #LLMs #GPT4o #Claude3opus 🧵(1/n)

Thanks to everyone who attended FigLangWorkshop at #naacl2024 ! If you weren't able to make it, we've made recordings of the panel and keynote available! ☕️ Panel on creativity in the age of LLMs: sites.google.com/view/figlang20… 🎤 Vered Shwartz 's keynote: sites.google.com/view/figlang20…

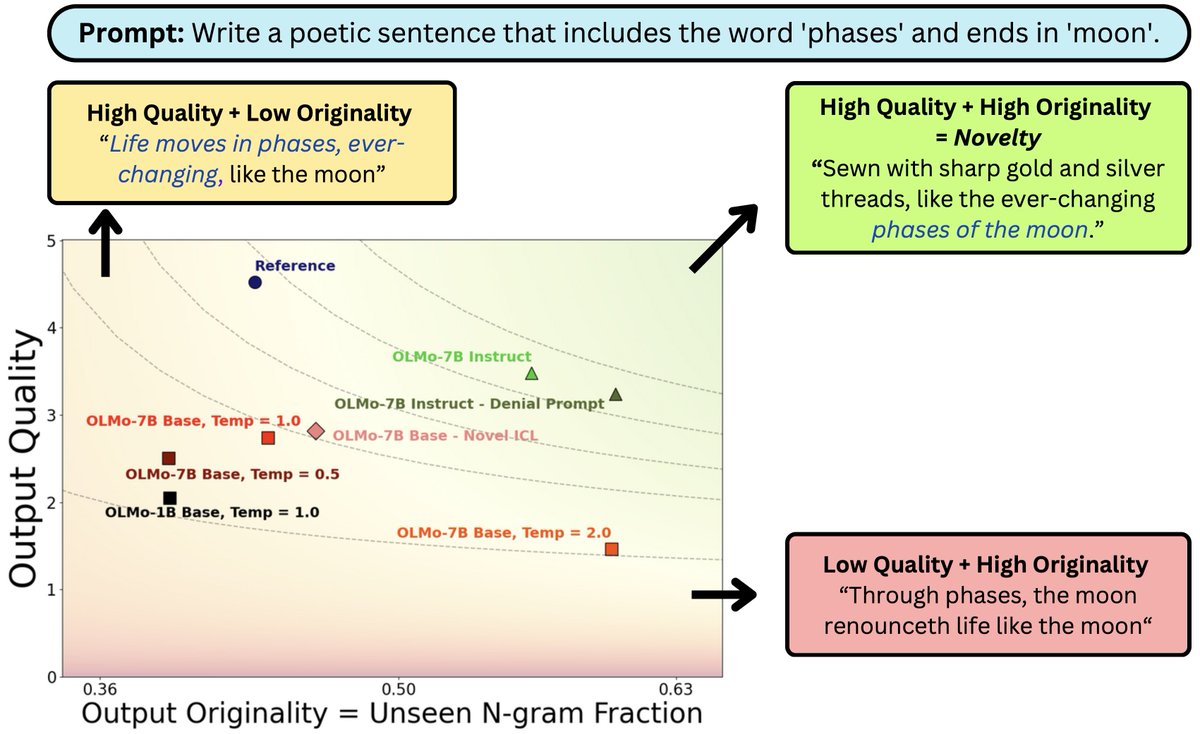

What does it mean for #LLM output to be novel? In work w/ John(Yueh-Han) Chen, Jane Pan, Valerie Chen, He He we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

A bit late to announce, but I’m excited to share that I'll be starting as an assistant professor at the University of Maryland UMD Department of Computer Science this August. I'll be recruiting PhD students this upcoming cycle for fall 2026. (And if you're a UMD grad student, sign up for my fall seminar!)