Peng (Richard) Xia ✈️ ICLR 2025

@richardxp888

PhD Student @UNC @unccs | Formerly @MonashUni | Multimodal, Agent, RAG, Healthcare

ID: 1517724052894683136

https://richard-peng-xia.github.io/ 23-04-2022 04:36:39

61 Tweet

496 Followers

933 Following

Thanks for posting our work Rohan Paul!

Thanks Robert Youssef for sharing our work! Nice summary!

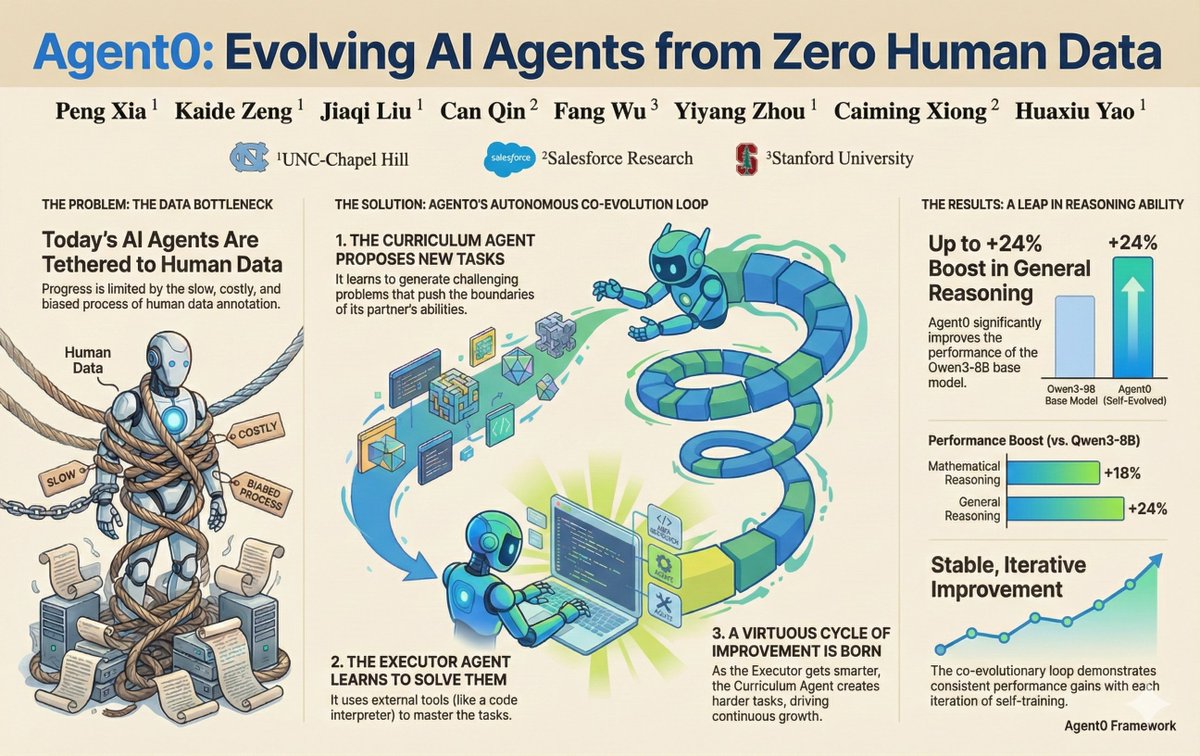

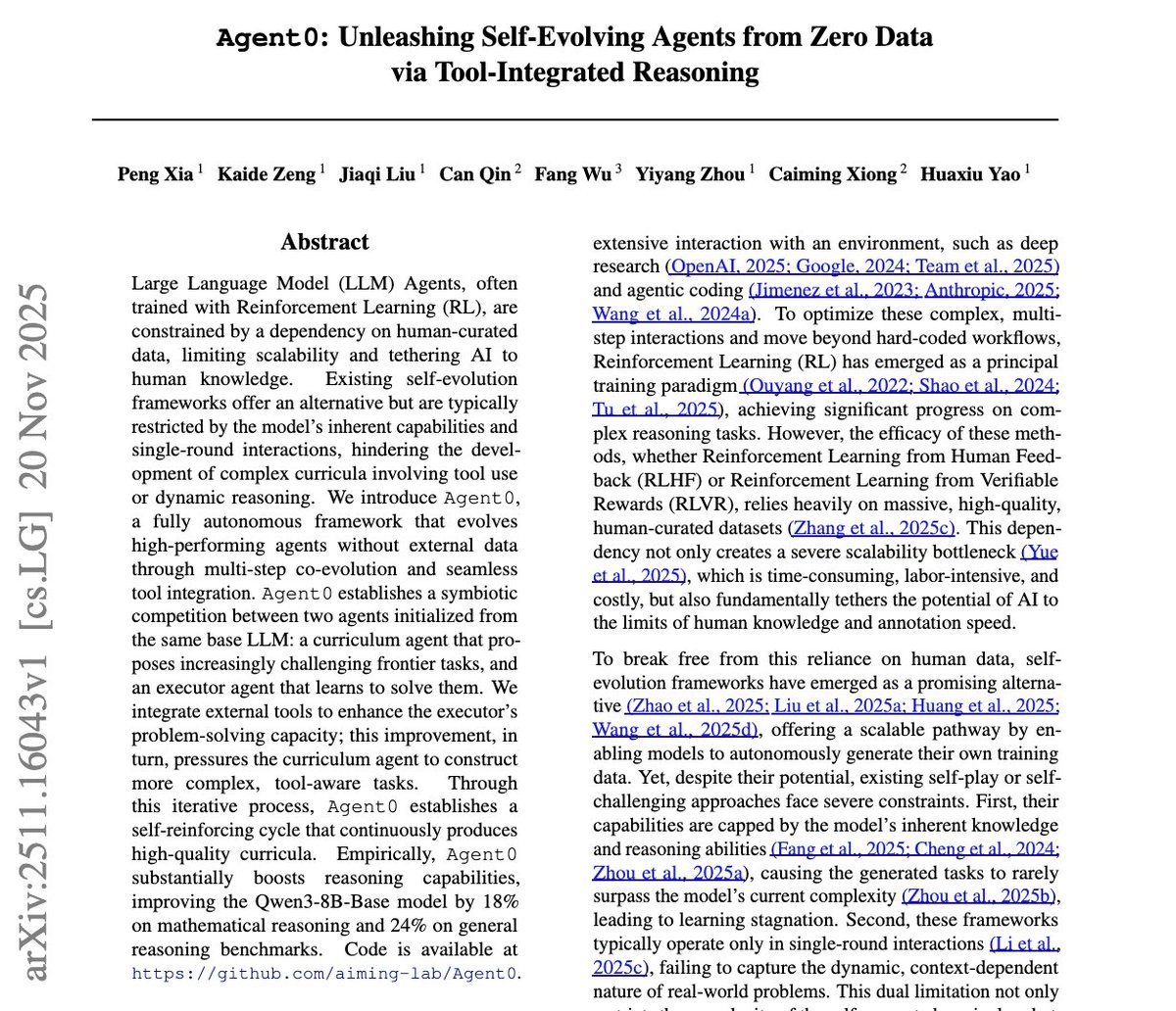

Peng (Richard) Xia ✈️ NeurIPS 25 Huaxiu Yao ✈️ NeurIPS 2025 Jiaqi Liu ✈️ NeurIPS 2025 Yiyang Zhou Can Qin Caiming Xiong Fang Wu Another week, another paper claiming we finally cracked autonomous AI. Agent0: two copies of the same base model play an elaborate game of curricular ping-pong inside their context window, using a Python REPL as the ball. No external data, no human labels, no lasting updates

![Yiping Wang (@ypwang61) on Twitter photo 8B model can outperform AlphaEvolve on open optimization problems by scaling compute for inference or test-time RL🚀!

⭕Circle packing:

AlphaEvolve (Gemini-2.0-Flash/Pro)

: 2.63586276

Ours (DeepSeek-R1-0528-Qwen3-8B)

: 2.63598308

🔗in🧵

[1/n] 8B model can outperform AlphaEvolve on open optimization problems by scaling compute for inference or test-time RL🚀!

⭕Circle packing:

AlphaEvolve (Gemini-2.0-Flash/Pro)

: 2.63586276

Ours (DeepSeek-R1-0528-Qwen3-8B)

: 2.63598308

🔗in🧵

[1/n]](https://pbs.twimg.com/media/G7Fq7wfbEAA74S1.jpg)