Pavan Kapanipathi

@pavankaps

Researcher at IBM Research (Views are my own)

ID: 40900291

https://researcher.watson.ibm.com/researcher/view.php?person=us-kapanipa 18-05-2009 15:52:32

819 Tweet

410 Followers

763 Following

Happy to see that our chemical language foundation model, MoLFormer is highlighted in Nature Computational Science. In addition to showing competitive performance in standard prediction benchmarks, it also shows first-of-a-kind emergent behavior with scaling, e.g. learning of geometry and taste

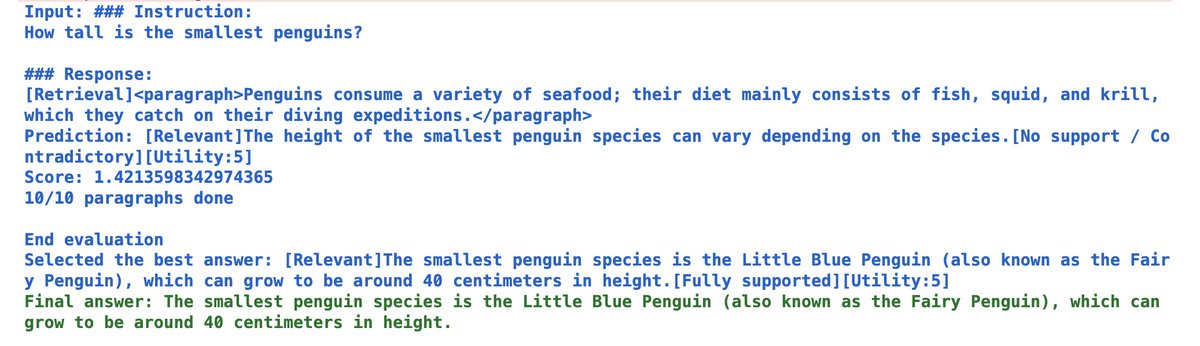

Self-RAG in LlamaIndex 🦙 We’re excited to feature Self-RAG, a special RAG technique where an LLM can do self-reflection for dynamic retrieval, critique, and generation (Akari Asai et al.). It’s implemented in LlamaIndex 🦙 as a custom query engine with

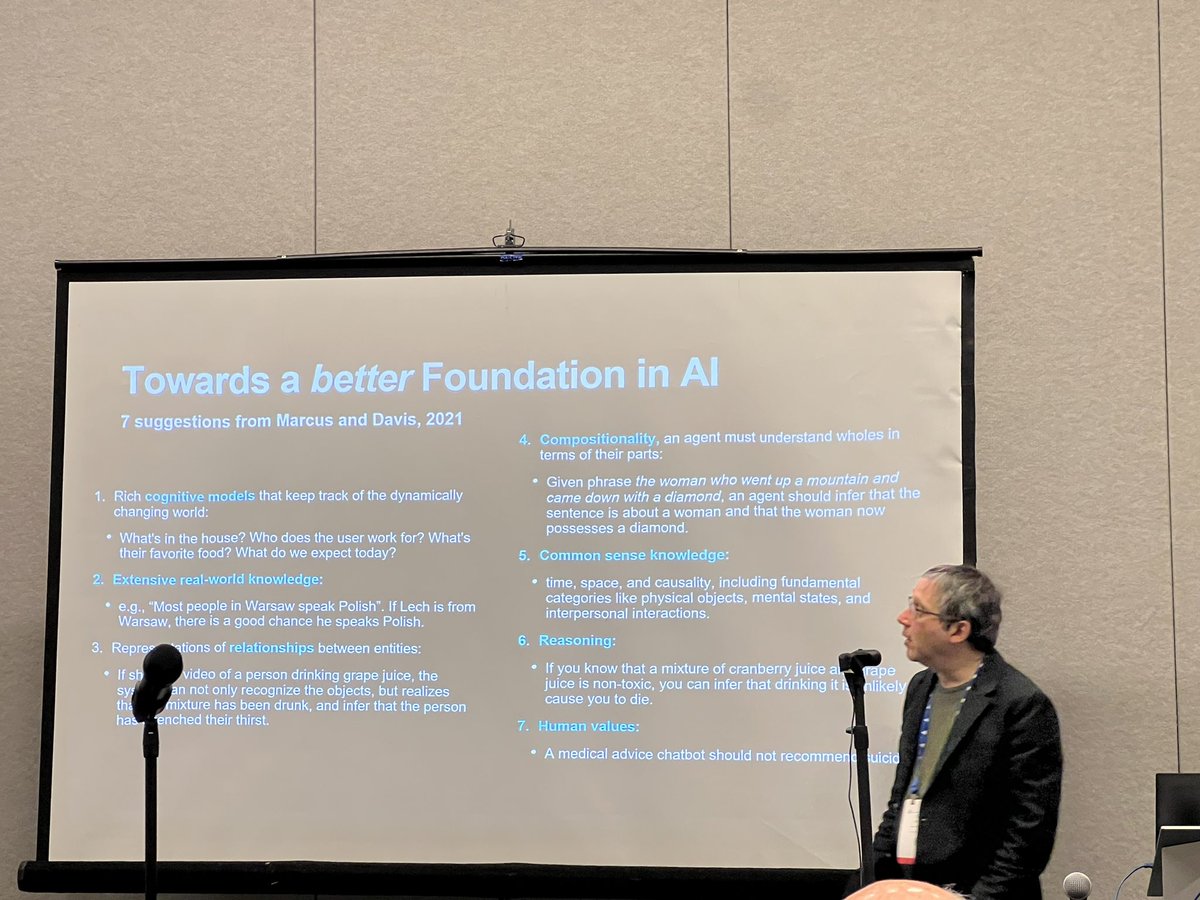

AAAI nuclear-workshop.github.io workshop Neuro-Symbolic Learning and Reasoning in the Era of Large Language Models Gary Marcus talk on “No AGI without Neurosymbolic AI.” Asim Munawar Artur d'Avila Garcez Francesca Rossi

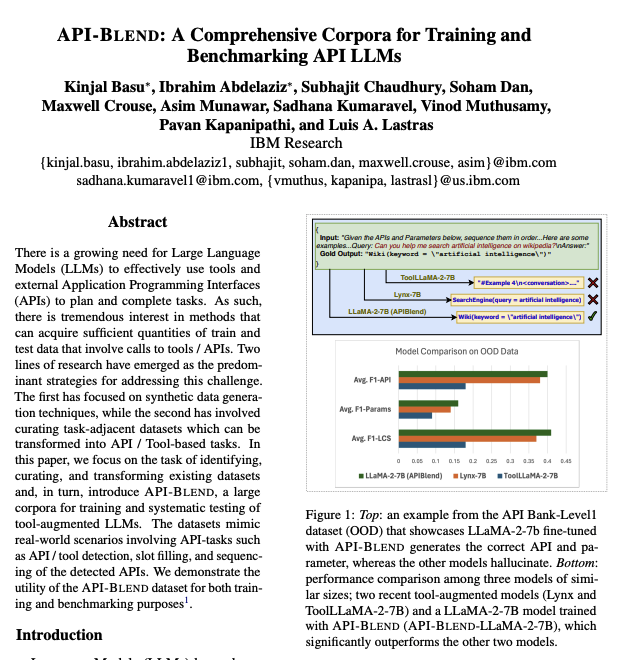

New IBM Research paper builds a judge for tool calls that makes tool using LLMs more accurate. It reports up to 25% higher accuracy on tool calling. Current judges rate normal text, not tool calls, so they miss wrong names, bad or missing parameters, and extra calls. The

An exciting milestone for AI in science: Our C2S-Scale 27B foundation model, built with Yale University and based on Gemma, generated a novel hypothesis about cancer cellular behavior, which scientists experimentally validated in living cells. With more preclinical and clinical tests,