Minsu Kim

@minsuuukim

AI Researcher at Mila and KAIST

ID: 1897933692162772992

https://minsuukim.github.io/ 07-03-2025 08:53:59

8 Tweet

26 Followers

96 Following

Is there a universal strategy to turn any generative model—GANs, VAEs, diffusion models, or flows—into a conditional sampler, or finetuned to optimize a reward function? Yes! Outsourced Diffusion Sampling (ODS) accepted to ICML Conference , does exactly that!

Every frontier AI system should be grounded in a core commitment: to protect human joy and endeavour. Today, we launch LawZero - LoiZéro, a nonprofit dedicated to advancing safe-by-design AI. lawzero.org

Today marks a big milestone for me. I'm launching LawZero - LoiZéro, a nonprofit focusing on a new safe-by-design approach to AI that could both accelerate scientific discovery and provide a safeguard against the dangers of agentic AI.

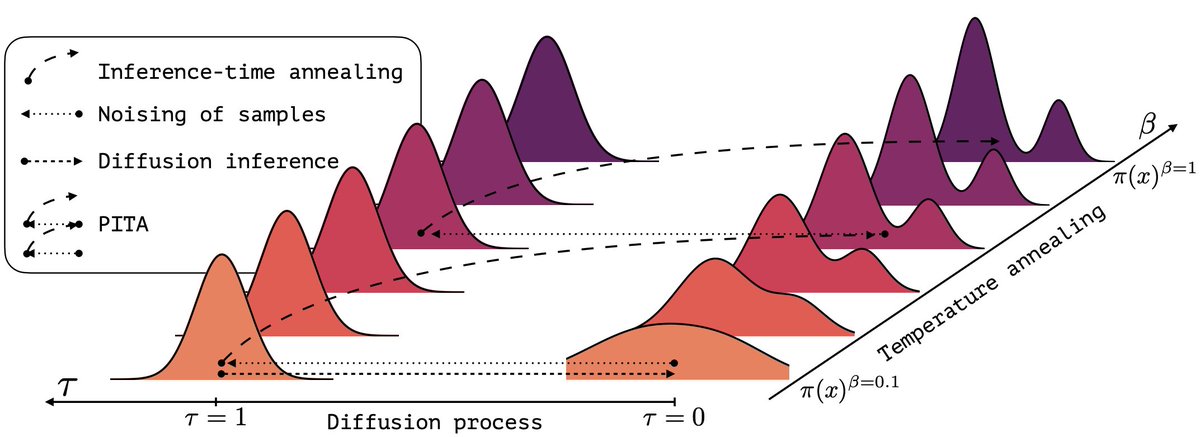

(1/n) Sampling from the Boltzmann density better than Molecular Dynamics (MD)? It is possible with PITA 🫓 Progressive Inference Time Annealing! A spotlight GenBio Workshop @ ICML25 of ICML Conference 2025! PITA learns from "hot," easy-to-explore molecular states 🔥 and then cleverly "cools"

Come check out our poster this Wednesday at 4:30pm ICML Conference !! Happy to chat about diffusion, GFlowNets and RL!

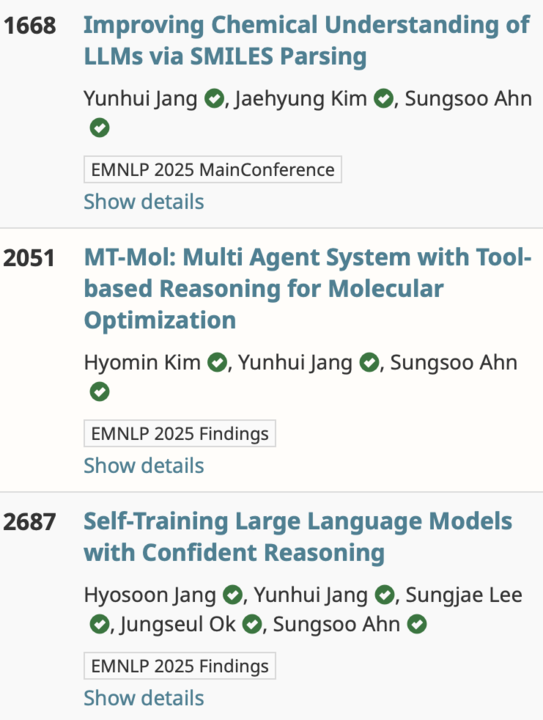

🎉3 papers are accepted to #EMNLP2025! Huge thanks to my co-authors Sungsoo Ahn, Jaehyung, Hyomin Kim, and Hyosoon. 1️⃣CLEANMOL: SMILES parsing for pre-training (or for RL?) LLM 2️⃣ MT-Mol: agent for molecular optimization 3️⃣ CORE-PO: RL to prefer high-confidence reasoning path

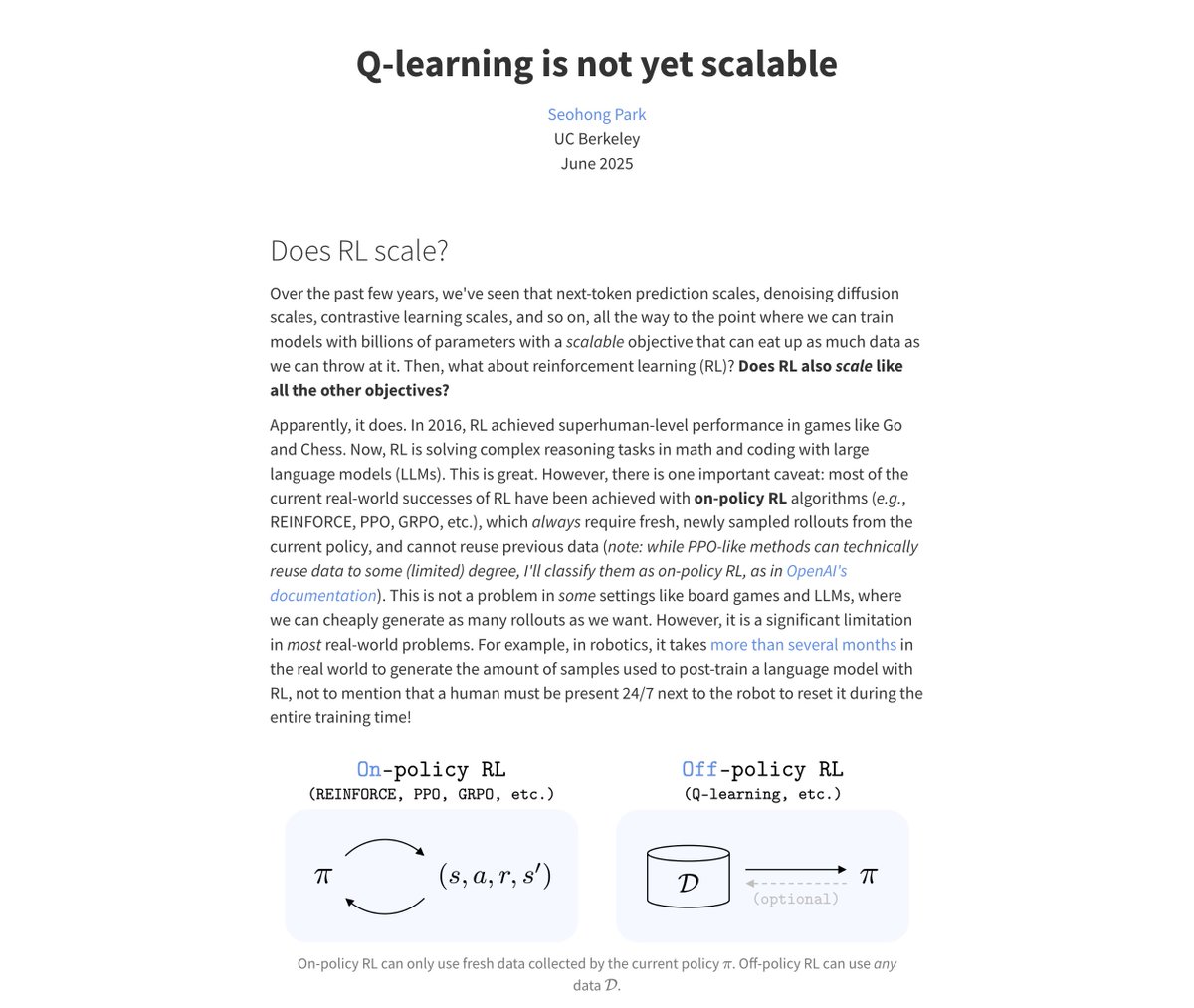

![fly51fly (@fly51fly) on Twitter photo [LG] Fast Monte Carlo Tree Diffusion: 100x Speedup via Parallel Sparse Planning

J Yoon, H Cho, Y Bengio, S Ahn [KAIST & Mila – Quebec AI Institute] (2025)

arxiv.org/abs/2506.09498 [LG] Fast Monte Carlo Tree Diffusion: 100x Speedup via Parallel Sparse Planning

J Yoon, H Cho, Y Bengio, S Ahn [KAIST & Mila – Quebec AI Institute] (2025)

arxiv.org/abs/2506.09498](https://pbs.twimg.com/media/GtRgzjDbMAAbC9N.jpg)