Kenny Peng

@kennylpeng

CS PhD student at Cornell Tech. Interested in interactions between algorithms and society. Princeton math '22.

ID: 1145703952417218562

http://kennypeng.me 01-07-2019 14:41:23

57 Tweet

97 Followers

24 Following

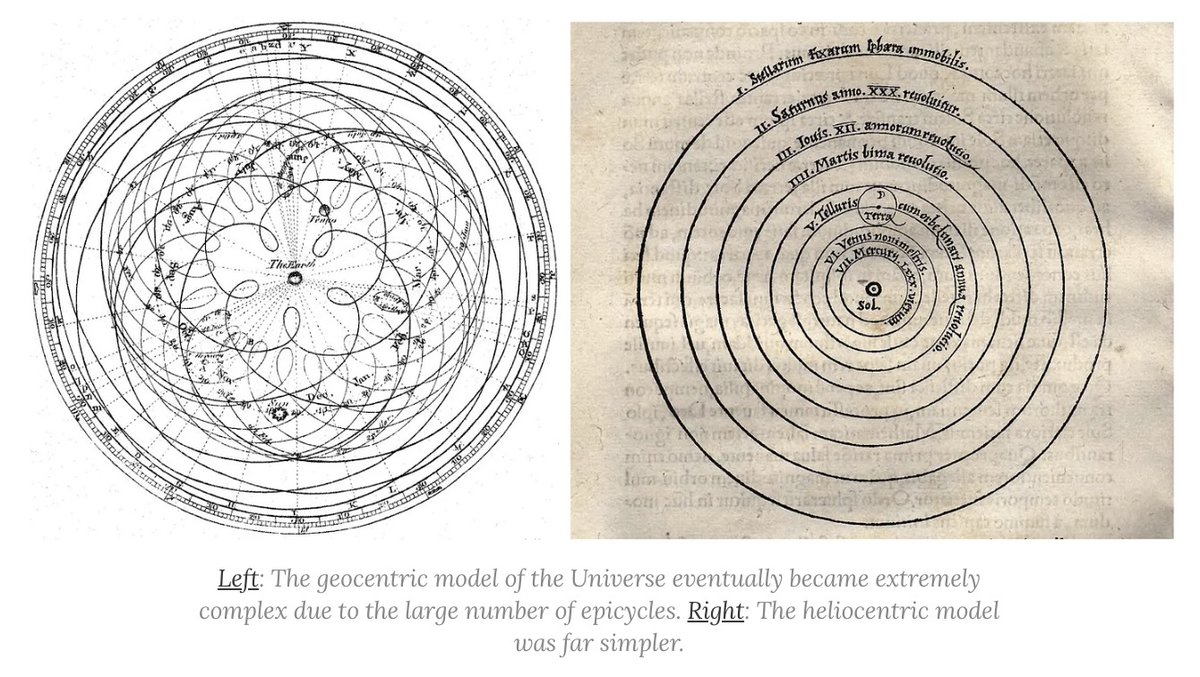

The mainstream view of AI for science says AI will rapidly accelerate science, and that we're on track to cure cancer, double the human lifespan, colonize space, and achieve a century of progress in the next decade. In a new AI Snake Oil essay, Arvind Narayanan and I argue that

1/10. In a new paper with Hamidah Oderinwale and Jon Kleinberg, we mapped the family trees of 1.86 million AI models on Hugging Face — the largest open-model ecosystem in the world. AI evolution looks kind of like biology, but with some strange twists. 🧬🤖

🚨 New postdoc position in our lab UC Berkeley EECS! 🚨 (please retweet + share with relevant candidates) We seek applicants with experience in language modeling who are excited about high-impact applications in the health and social sciences! More info in thread 1/3

Many thanks to Open Philanthropy for supporting our work on sparse autoencoders for hypothesis generation (arxiv.org/abs/2502.04382) - in particular, using these techniques to build safer and better-aligned LLMs! openphilanthropy.org/grants/uc-berk…