Junlin Wang

@junlinwang3

PhD Student @duke_nlp. Interning at @togethercompute. Inference-time scaling, multi-agent systems

ID: 2224217683

http://junlinwang.com 01-12-2013 04:34:47

35 Tweet

185 Followers

225 Following

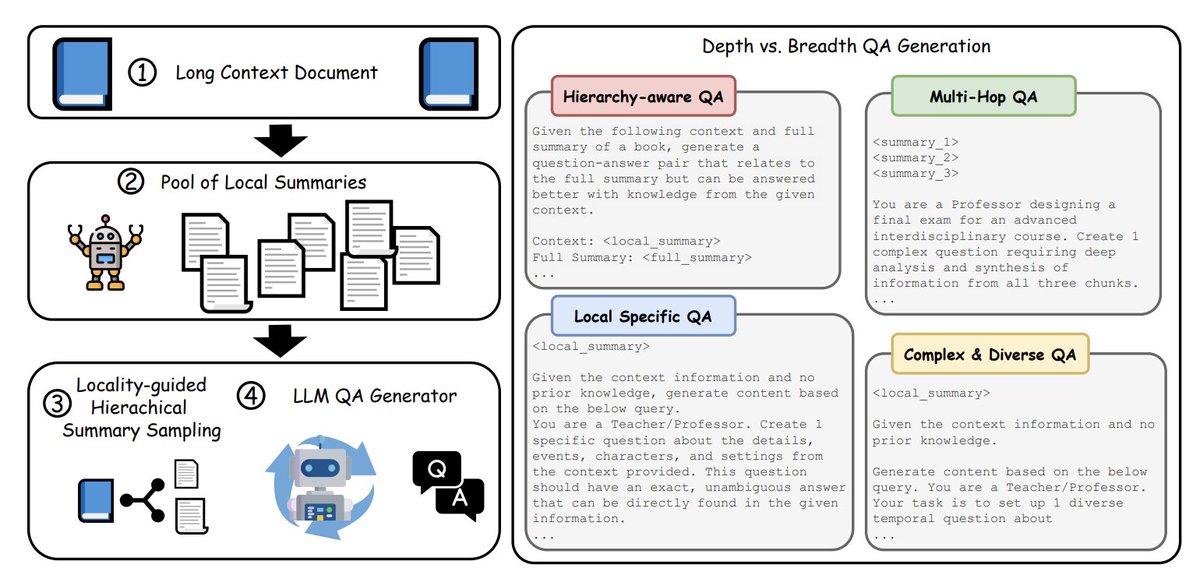

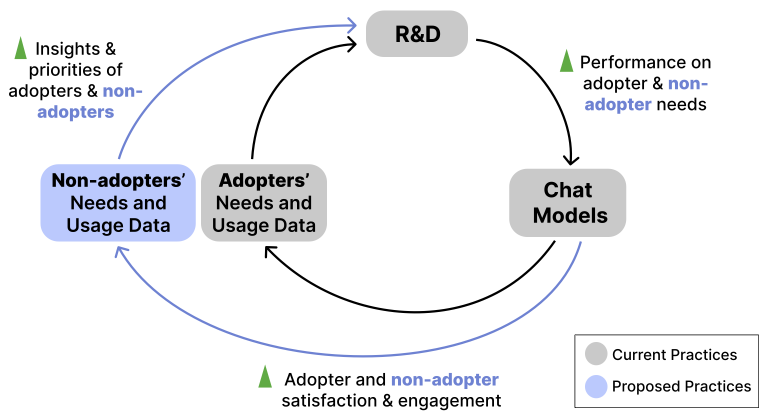

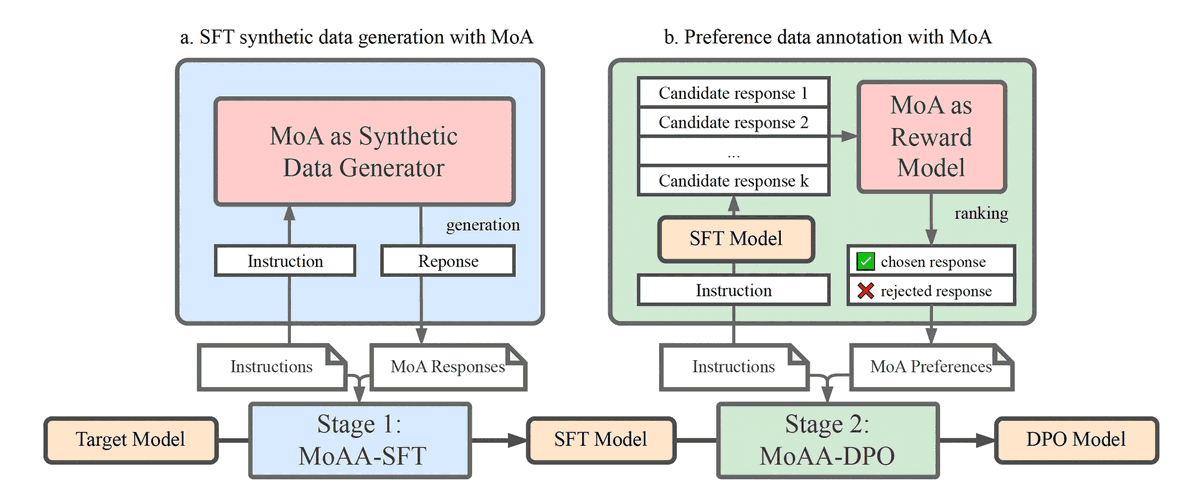

Our new #icml2025 paper w/Together AI shows how to use synthetic data from Mixture-of-Agents to boost LM fine-tuning + RL. Turns out a mixture of small agents is much more effective/cheaper than using a large LM as teacher 🌐together.ai/blog/moaa 📜arxiv.org/abs/2505.03059

Most AI benchmarks test the past. But real intelligence is about predicting the future. Introducing FutureBench — a new benchmark for evaluating agents on real forecasting tasks that we developed with Hugging Face 🔍 Reasoning > memorization 📊 Real-world events 🧠 Dynamic,

No better time to learn about that #AI thing everyone's talking about... 📢 I'm recruiting PhD students in Computer Science or Information Science Cornell Bowers Computing and Information Science! If you're interested, apply to either department (yes, either program!) and list me as a potential advisor!