Jiaxin Huang

@jiaxinhuang0229

Assistant professor @WUSTL CSE.

LLM, NLP, ML, Data Mining. PhD from @IllinoisCS. Microsoft Research PhD Fellow.

ID: 1532511461133541376

https://teapot123.github.io/ 02-06-2022 23:56:32

23 Tweet

438 Followers

76 Following

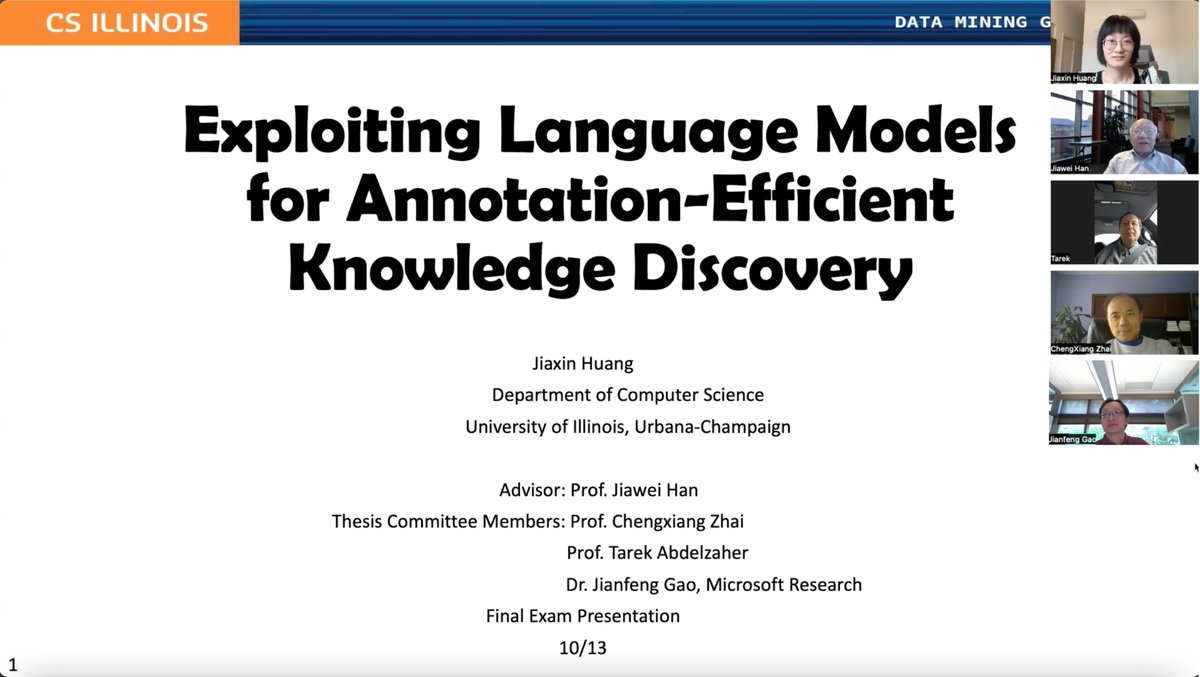

🎓 Just passed my PhD Thesis Defense! 🎉 Super lucky to have Prof. Jiawei Han as my advisor, and huge thanks to my Thesis Committee members Prof. Chengxiang Zhai, Prof. Tarek Abdelzaher, and Dr. Jianfeng Gao. Shoutout to my awesome co-authors for their unwavering support!

Curious about efficient many-shot ICL with LLMs? Our new paper led by ChengSong Huang introduces LARA that divides & reweights in-context examples to ensure ✅ Better Performance ✅ Improved Scalability ✅ No need to Access Model Parameters ✅ Less Memory Usage

🤨Ever wonder why RLHF-trained LLMs are overconfident? 🚀Check out our new work led by Jixuan Leng, revealing that reward models themselves are biased towards high-confidence responses!😯 🥳We introduce two practical solutions (PPO-M & PPO-C) to improve language model

Our paper was accepted by ICML Conference 2025! If you're working on RL for reasoning, consider adding more logical puzzle data to your training and eval. Share your ideas for logical reasoning tasks for ZebraLogic v2 and interesting RL studies you want to see! Many thanks to my

Excited to share our #ICML25 paper (led by Zhepei Wei) on accelerating LLM decoding! ⚡️ AdaDecode predicts tokens early from intermediate layers 🙅♂️No drafter model needed 🪶Just lightweight LM heads ✨Output consistency with standard autoregressive decoding Thread👇

🚀🚀Excited to share our new work on Speculative Decoding by Langlin Huang! We tackle a key limitation in draft models which predict worse tokens at later positions, and present PosS that generates high-quality drafts!

Thrilled to share this exciting work, R-Zero, from my student ChengSong Huang where LLM learns to reason from Zero human-curated data! The framework includes co-evolution of a "Challenger" to propose difficult tasks and a "Solver" to solve them. Check out more details in the