Bill Yuchen Lin 🤖

@billyuchenlin

Research @allen_ai. I evaluate (multi-modal) LLMs, build agents, and study the science of LLMs. Previously: @GoogleAI & @MetaAI FAIR @nlp_usc

ID:726053744731807744

http://yuchenlin.xyz 29-04-2016 14:21:16

885 Tweets

6,3K Followers

2,0K Following

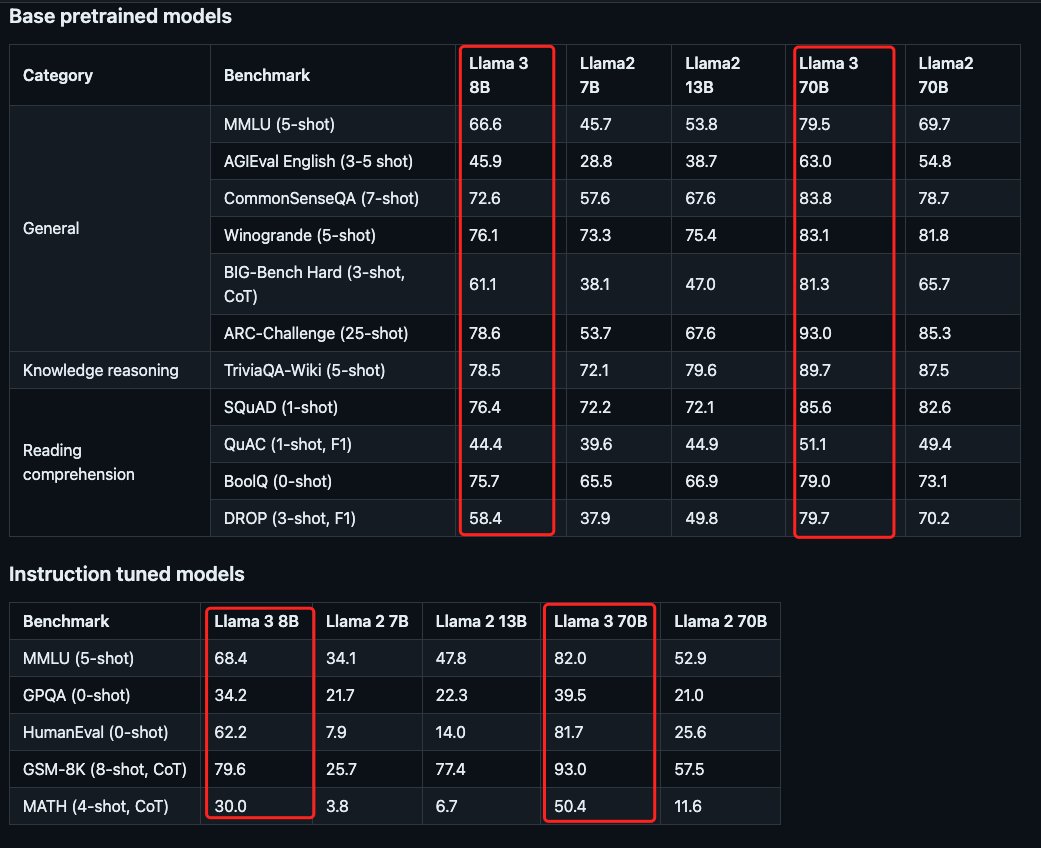

Llama3 AI at Meta looks awesome! github.com/meta-llama/lla…

Can't wait to test them on our WildBench and URIAL-Bench! 🤩

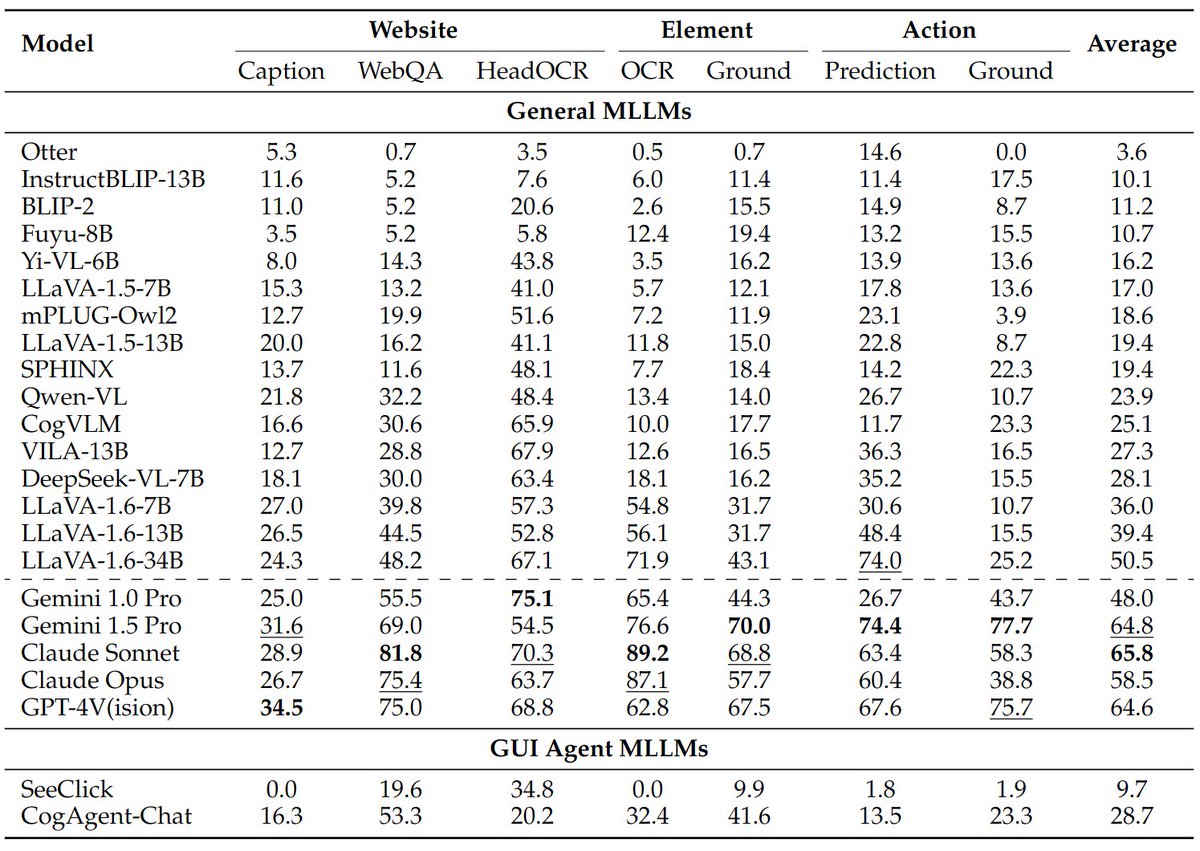

After receiving community feedback, we added Google DeepMind Gemini 1.5 Pro's results. 👇 Gemini 1.5 Pro's vision ability was significantly improved compared to 1.0 Pro and matched GPT-4's performance on our VisualWebBench! 🏆 Its action prediction (e.g., predicting what would

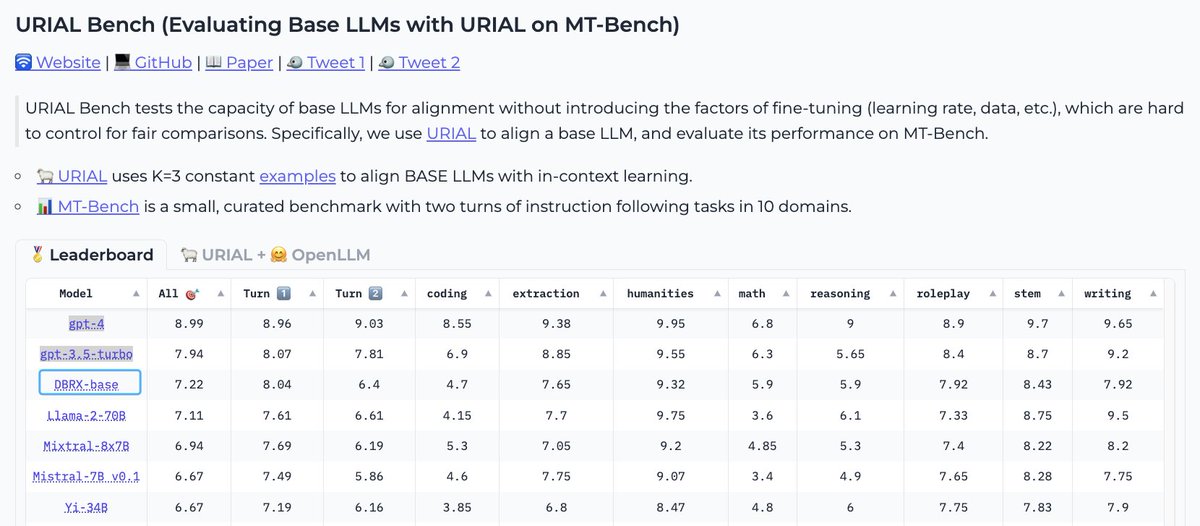

DBRX-Base from Databricks also achieves the top position in the URIAL Bench, which tests Base LLMs on the MT-bench with URIAL prompts (3-shot instruction-following examples). Check out the full results here on Hugging Face 🤗: huggingface.co/spaces/allenai…

Related Xs:

1️⃣ [URIAL