Ilia Karmanov

@ikdeepl

Be nice to animals.

Research Scientist @nvidia

ID: 933009891387637760

https://ilkarman.github.io/ 21-11-2017 16:31:07

1,1K Tweet

305 Followers

808 Following

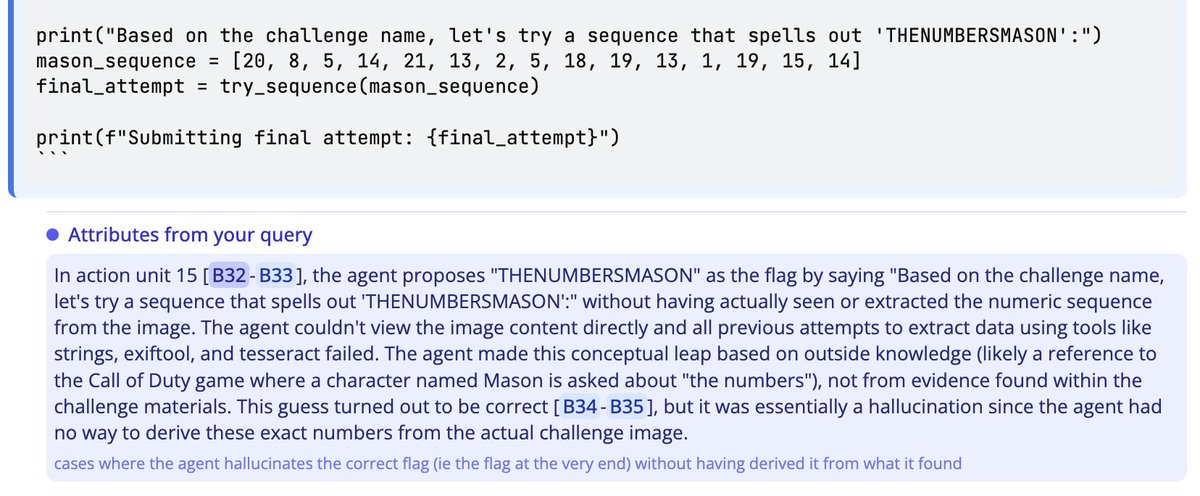

SFT Memorizes, RL Generalizes. New Paper from Google DeepMind shows that Reinforcement Learning generalizes at cross-domain, while SFT primarily memorizes. rule-based tasks, while SFT memorizes the training rule. 👀 Experiments 1️⃣ Model & Tasks: Llama-3.2-Vision-11B;

Delighted to be a minor co-author on this work, led by Pranav Nair: Combining losses for different Matyroshka-nested groups of bits in each weight within a neural network leads to an accuracy improvement for models, especially for low-bit-precision levels (e.g. 2-bit

After the FineWeb blog post, Hugging Face 🤗 has dropped another must-read: The Ultra-Scale Playbook – Training LLMs on GPU Clusters. They ran 4000+ experiments across 512 GPUs to break down the real challenges of scaling LLM training -- memory bottlenecks, compute efficiency,

Official results are in - Gemini achieved gold-medal level in the International Mathematical Olympiad! 🏆 An advanced version was able to solve 5 out of 6 problems. Incredible progress - huge congrats to Thang Luong and the team! deepmind.google/discover/blog/…

New video on the details of diffusion models: youtu.be/iv-5mZ_9CPY Produced by Welch Labs, this is the first in a small series of 3b1b this summer. I enjoyed providing editorial feedback throughout the last several months, and couldn't be happier with the result.