Barney Pell

@barneyp

Barney Pell is an entrepreneur and VC. Barney Pell's Syndicate, Ecoation, Moon Express, Singularity U. Prev: Bing, Powerset, Mayfield, NASA, AI games pioneer.

ID: 17657037

http://www.barneypell.com 26-11-2008 19:20:24

16,16K Tweet

6,6K Followers

2,2K Following

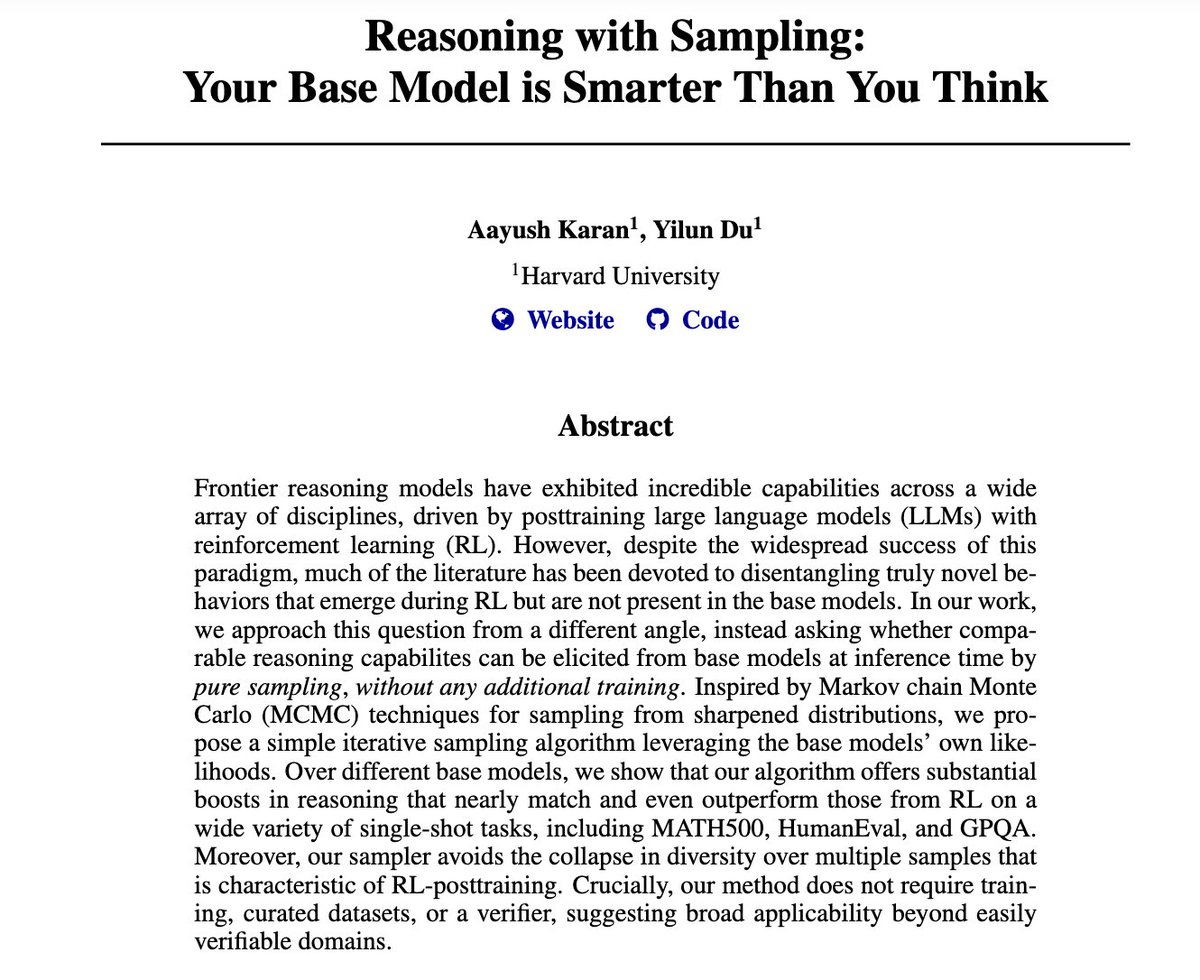

A beautiful paper from MIT+Harvard+ Google DeepMind and other top uni. Explains why Transformers miss multi digit multiplication and shows a simple bias that fixes it. The researchers trained two small Transformer models on 4-digit-by-4-digit multiplication. One used a special