Zhuo Xu

@drzhuoxu

Research Scientist @GoogleDeepMind, PhD @Berkeley, previously @Tsinghua_Uni.

ID: 1726882175386345472

https://drzhuoxu.github.io/ 21-11-2023 08:36:24

24 Tweet

137 Followers

85 Following

Humans are capable of vision based 3D spatial estimation, why shouldn't VLMs be able to do the same? We believe VLM architectures are capable enough but just lack sufficient training data, and supply such data via careful synthesis from 2D web images. Check out Boyuan Chen post

Exciting to see this new partnership with Hugging Face to support developers building new AI applications.

Can we collect robot data without any robots? Introducing Universal Manipulation Interface (UMI) An open-source $400 system from Stanford University designed to democratize robot data collection 0 teleop -> autonomously wash dishes (precise), toss (dynamic), and fold clothes (bimanual)

Achieving bimanual dexterity with RL + Sim2Real! toruowo.github.io/bimanual-twist/ TLDR - We train two robot hands to twist bottle lids using deep RL followed by sim-to-real. A single policy trained with simple simulated bottles can generalize to drastically different real-world objects.

Introducing 𝐀𝐋𝐎𝐇𝐀 𝐔𝐧𝐥𝐞𝐚𝐬𝐡𝐞𝐝 🌋 - Pushing the boundaries of dexterity with low-cost robots and AI. Google DeepMind Finally got to share some videos after a few months. Robots are fully autonomous filmed in one continuous shot. Enjoy!

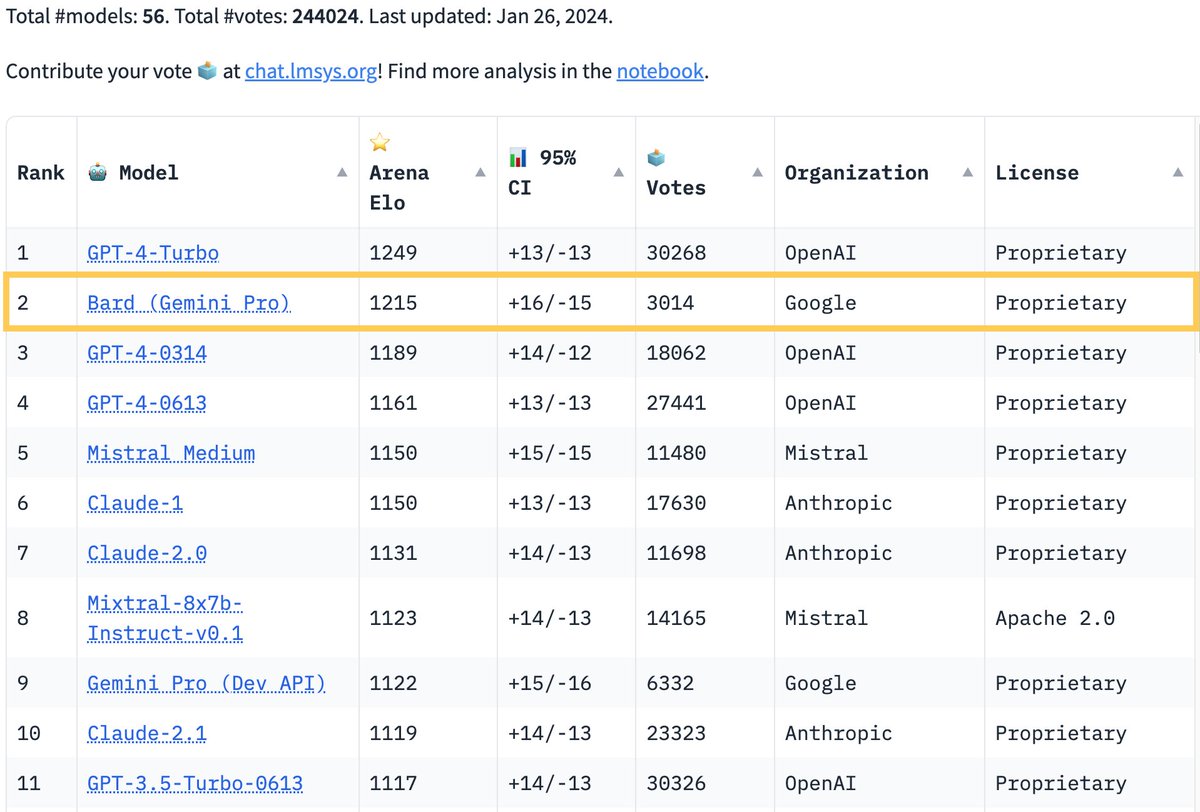

Exciting News from Chatbot Arena! Google DeepMind's new Gemini 1.5 Pro (Experimental 0801) has been tested in Arena for the past week, gathering over 12K community votes. For the first time, Google Gemini has claimed the #1 spot, surpassing GPT-4o/Claude-3.5 with an impressive

Congrats Pannag Sanketi and team! Had lots of fun playing with the robot!