David Marx (@digthatdata.bsky.social)

@digthatdata

Generative AI MLE, FOSS toolmaker, innovation catalyst @CoreWeave + @AiEleuther. bsky.app/profile/digtha…

ID: 2211601081

https://github.com/dmarx 24-11-2013 00:56:11

10,10K Tweet

4,4K Followers

1,1K Following

Because X has tended to censor discussion of social networks I won't link directly, but look for this post to get an instant AI/ML feed thanks to M A Osborne

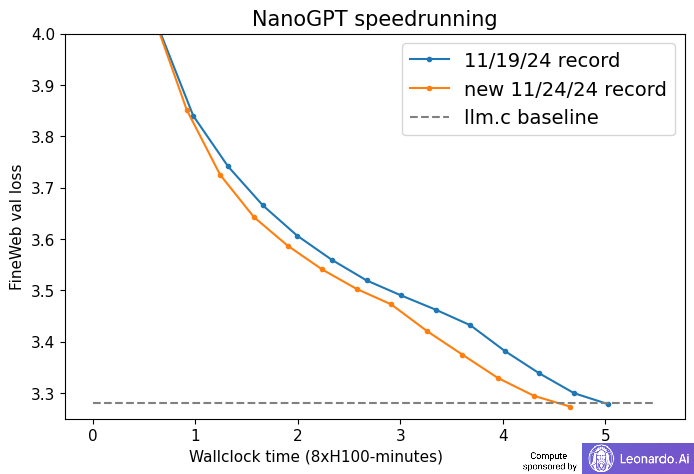

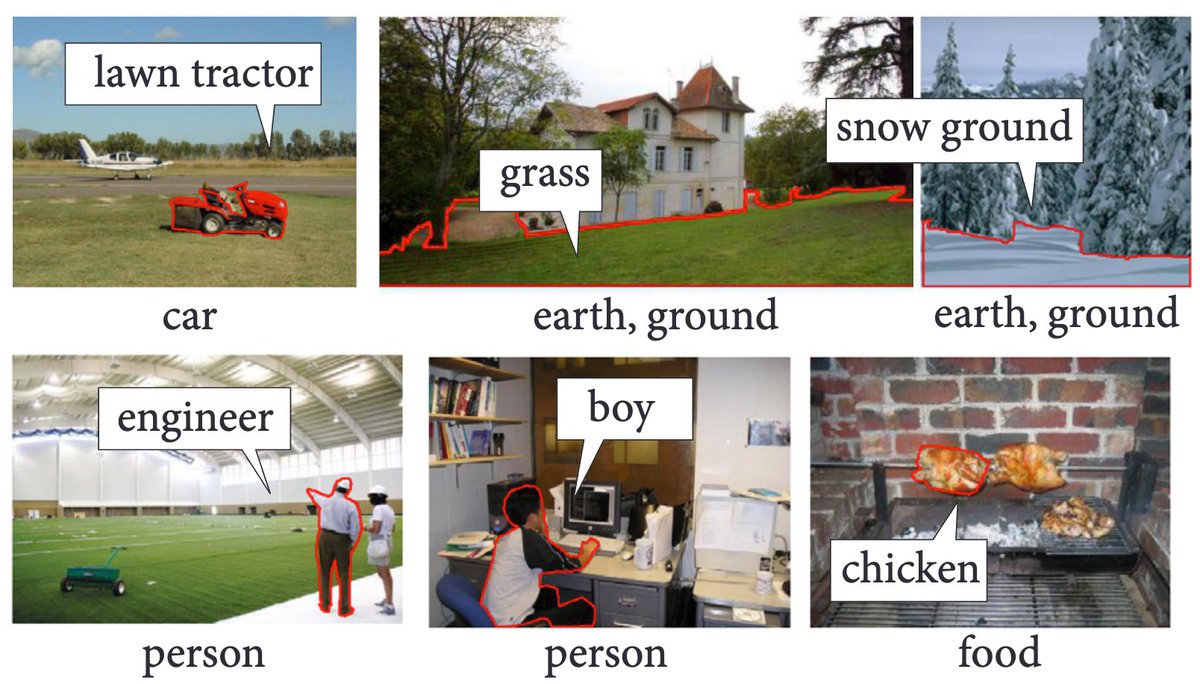

![Tanishq Kumar (@tanishqkumar07) on Twitter photo [1/7] New paper alert! Heard about the BitNet hype or that Llama-3 is harder to quantize? Our new work studies both! We formulate scaling laws for precision, across both pre and post-training arxiv.org/pdf/2411.04330. TLDR;

- Models become harder to post-train quantize as they [1/7] New paper alert! Heard about the BitNet hype or that Llama-3 is harder to quantize? Our new work studies both! We formulate scaling laws for precision, across both pre and post-training arxiv.org/pdf/2411.04330. TLDR;

- Models become harder to post-train quantize as they](https://pbs.twimg.com/media/GcH1RBoWwAAQp1q.jpg)