Tim Bakker🔸

@timbbakker

Senior ML researcher at Qualcomm. Previously PhD ML with Max Welling at AMLab, UvA. AI safety, effective altruism, and everything Bayesian. Sings a lot.

ID: 1326525380082208768

http://tbbakker.nl 11-11-2020 14:01:21

146 Tweet

752 Followers

429 Following

Today, our Otto Barten⏸ and Tim Bakker🔸 are publishing a new oped in Dutch @Nrc Handelsblad, in which we argue that we should prepare for AGI. What are the risks that we see, and what do we propose to mitigate them? nrc.nl/nieuws/2025/02…

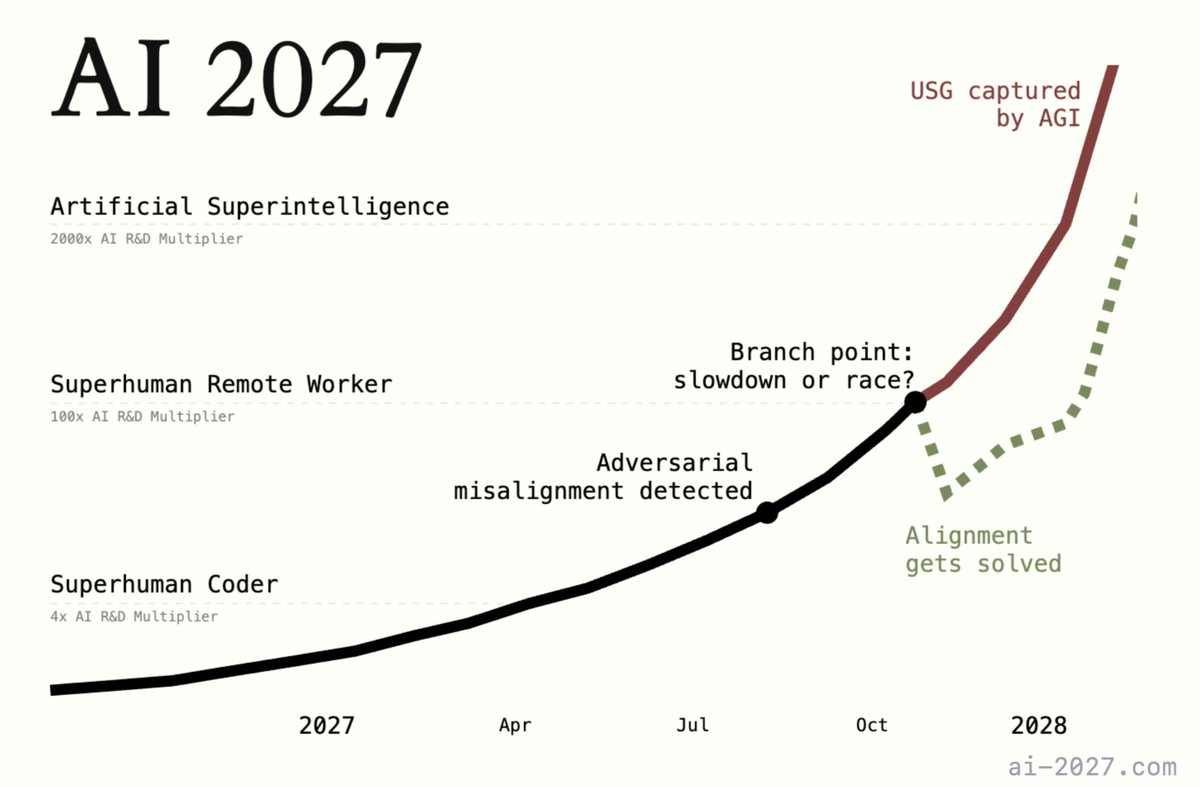

"How, exactly, could AI take over by 2027?" Introducing AI 2027: a deeply-researched scenario forecast I wrote alongside Scott Alexander, Eli Lifland, and Thomas Larsen

.Ronny Chieng from The Daily Show talks about shrimp welfare. I unironically agree.