Maithri

@maithrivm

ID: 2440149387

27-03-2014 15:34:01

1,1K Tweet

227 Followers

267 Following

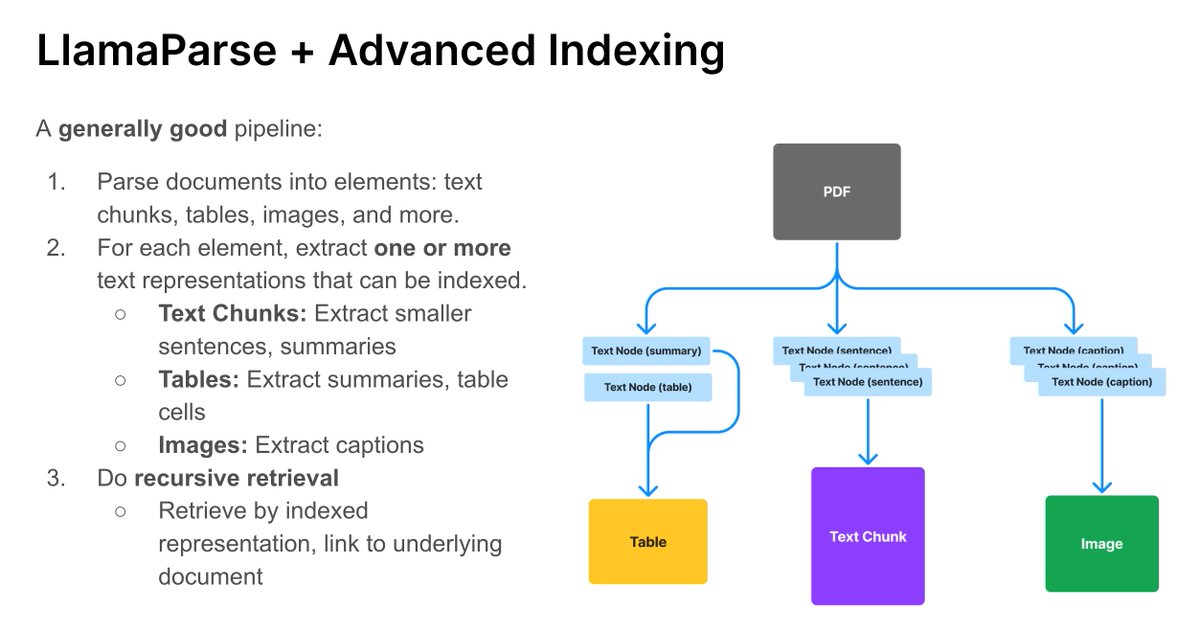

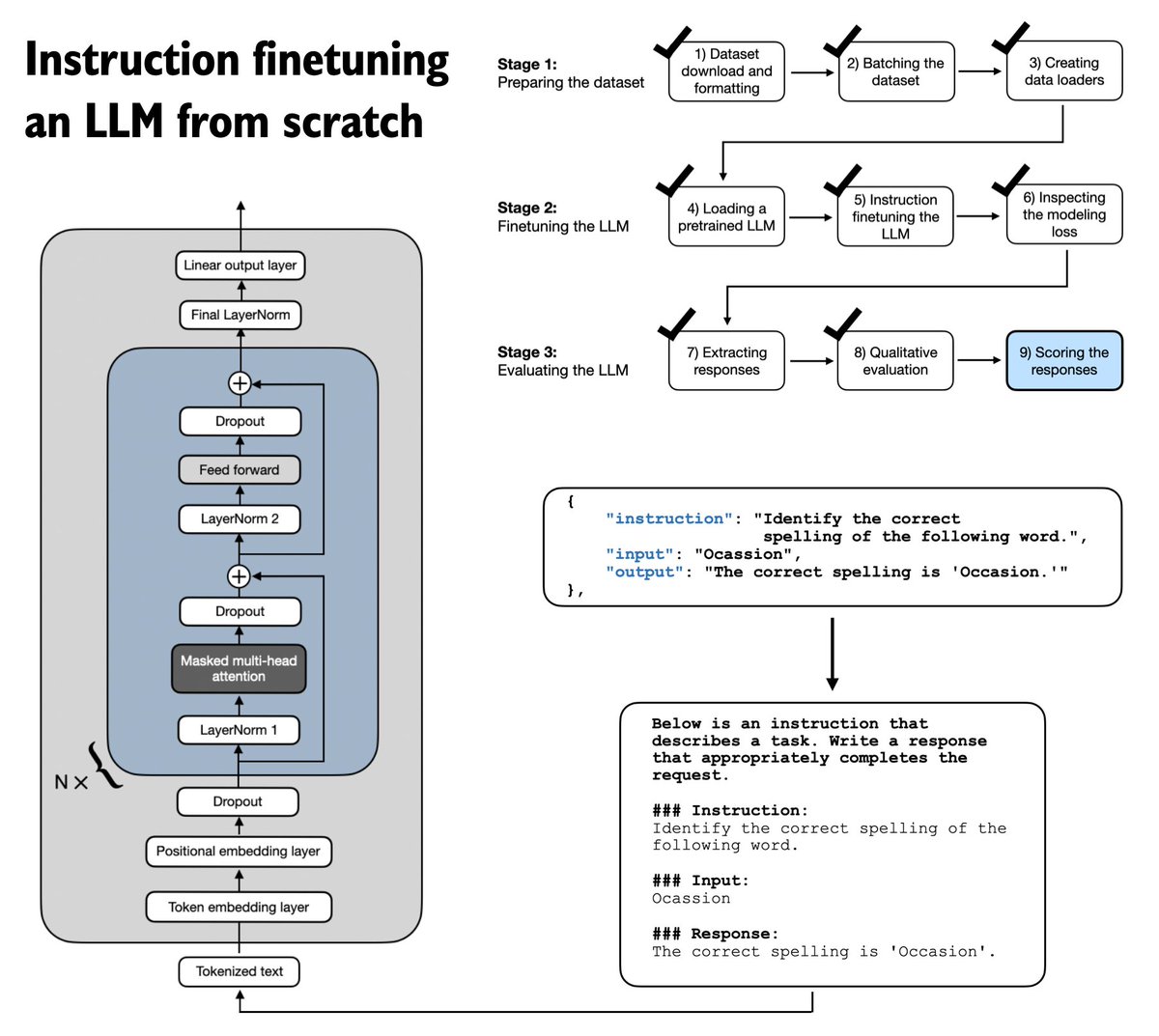

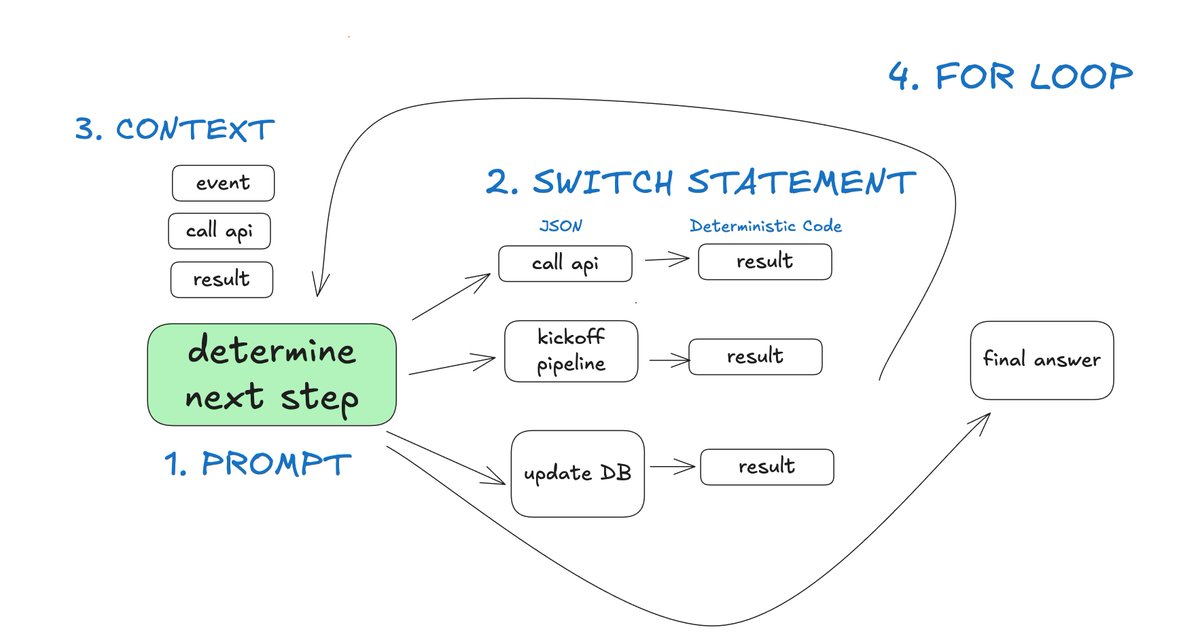

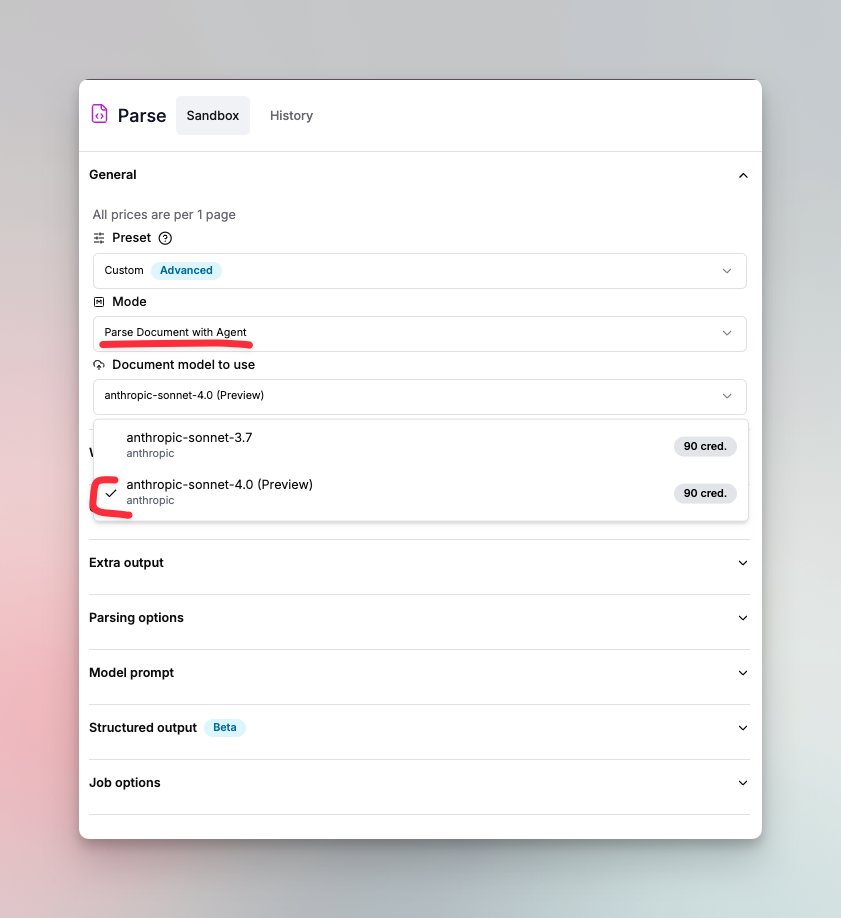

For folks looking to optimize their RAG using simple, proven approaches check out these talks, notes, slides we’ve made available for free from our course thanks to Jo Kristian Bergum Ben Clavié jason liu (More coming soon) parlance-labs.com/education/rag/

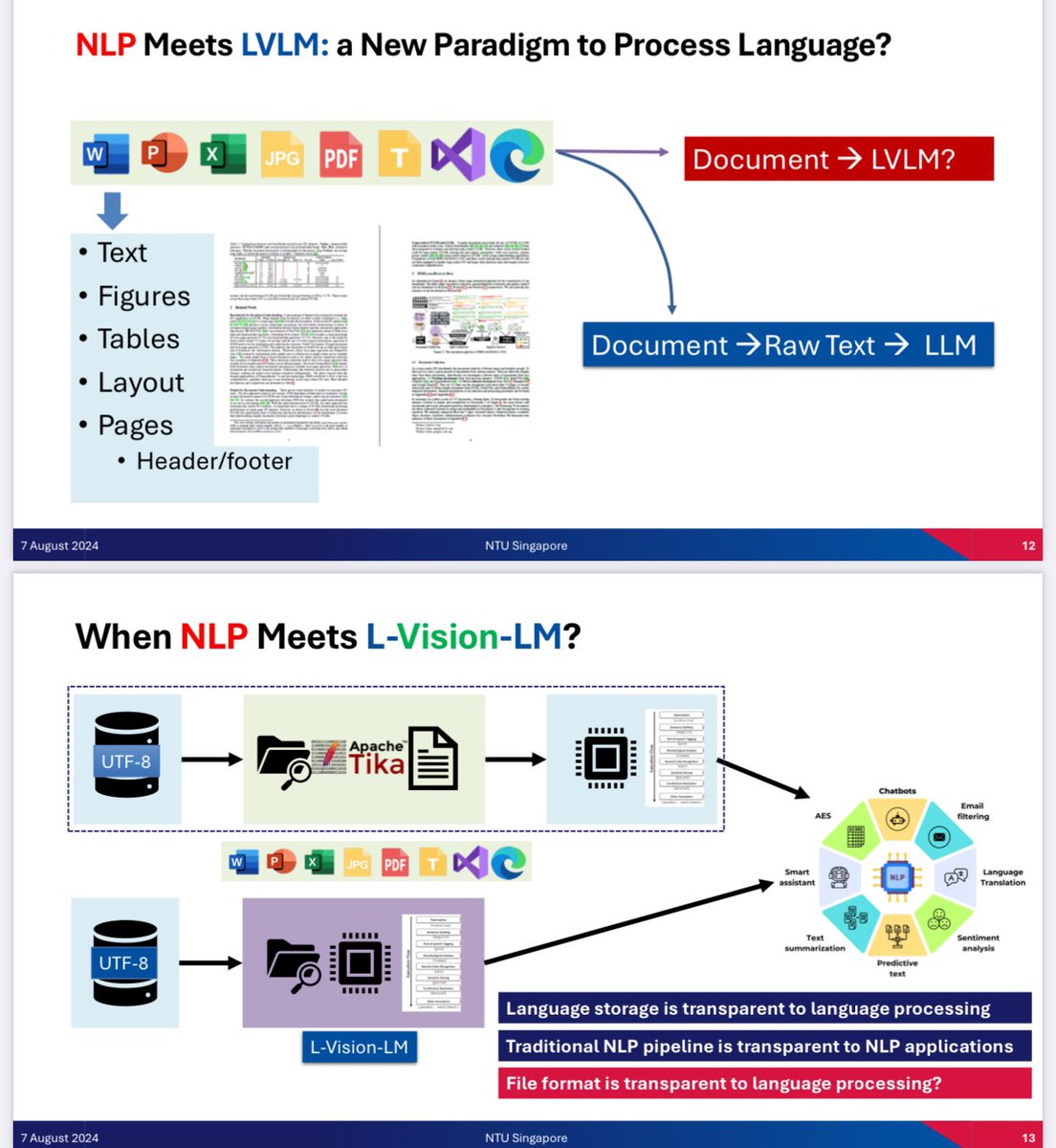

Join Manuel Faysse and me for the LlamaIndex Webinar this Friday, July 26th, at 9 AM PT! We'll be discussing our latest model for document retrieval: ColPali. Don't miss out! 👋🏼

1/ The Bloom-Bilal Rule: When bored and lacking ideas, keep walking until the day becomes interesting. (via @sahilbloom+ Bilal Zaidi)