Inferact

@inferact

ID: 1998160192903745536

08-12-2025 22:38:37

58 Tweet

3,3K Followers

3 Following

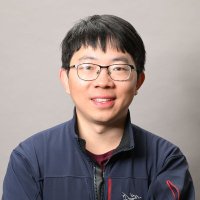

Woosuk Kwon Inferact vLLM Congratulations Woosuk Kwon and the Vllm team!

We're excited to lead the $150m seed round for Inferact and to support the vLLM and the future of inference. The vLLM community is already thriving and will continue to be a critical inference backbone. Congrats Simon Mo, Woosuk Kwon, Kaichao You, and Roger Wang!

Congratulations Simon Mo, Woosuk Kwon , Roger Wang, Ion Stoica , Kaichao You and vLLM team.

Very excited to partner with Inferact in support of their mission to build the inference engine for AI. ZhenFund is proud to have been an early supporter of vLLM. Huge congrats to Simon Mo, Woosuk Kwon, Kaichao You, Roger Wang, Ion Stoica, and the rest of the founding

Woosuk Kwon Inferact vLLM What marvelous news!! Super congrats Woosuk Kwon, Simon Mo, Kaichao You, Roger Wang, Ion Stoica and Inferact team! Super excited for you guys and what's to come!!