Carl Vondrick

@cvondrick

Associate Professor at @Columbia. PC for @iclr_conf

ID: 870606756

http://www.cs.columbia.edu/~vondrick/ 09-10-2012 21:14:35

761 Tweet

6,6K Followers

574 Following

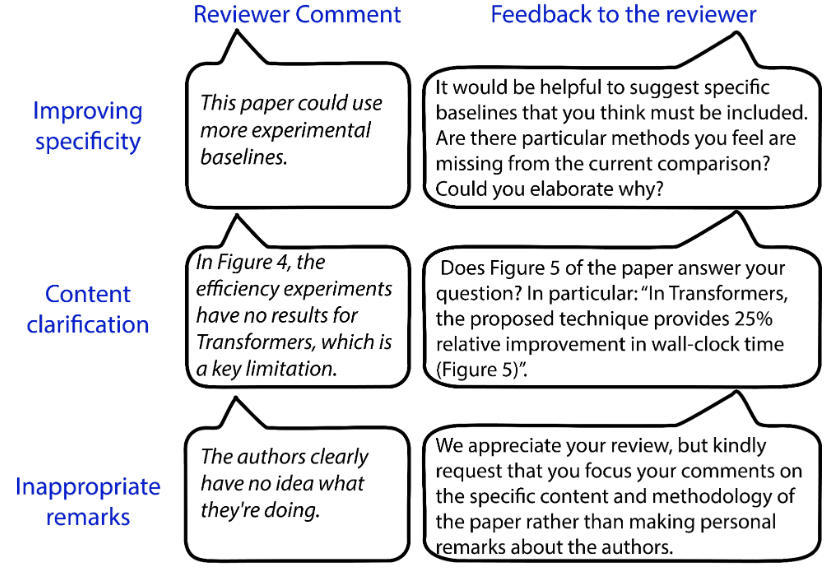

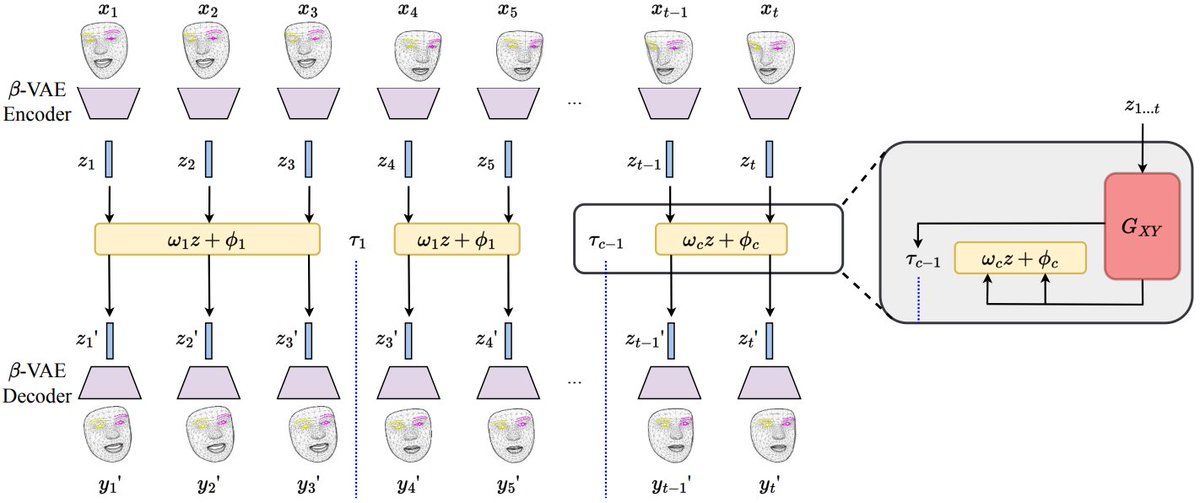

Have you wondered "How Video Meetings Change Your Expression?" We will be presenting our work at #ECCV2024 European Conference on Computer Vision #ECCV2026 tomorrow Oct 1 @ 10:30AM (poster #195). Come say hi! facet.cs.columbia.edu Huge thanks to my amazing collaborators Utkarsh Mall Purva Tendulkar Carl Vondrick

At #ECCV2024, we presented Minimalist Vision with Freeform Pixels, a new vision paradigm that uses a small number of freeform pixels to solve lightweight vision tasks. We are honored to have received the Best Paper Award! Check out the project here: cave.cs.columbia.edu/projects/categ…