Abdulkadir Canatar

@canatar_a

Research Fellow @FlatironCCN. Theoretical Neuroscience, Machine Learning, Physics. Prev: @Harvard and @sabanciu

ID: 1504453316

11-06-2013 15:55:00

157 Tweet

369 Followers

667 Following

Congratulations to SueYeon Chung on being awarded the Klingenstein-Simons Fellowship Award in Neuroscience! SueYeon Chung is an associate research scientist & project leader at Flatiron CCN - and an assistant professor NYU Center for Neural Science. Read more here: simonsfoundation.org/2023/07/05/neu…

How can you mitigate double-descent without relying on a task-tuned regularization? In my new preprint with Cengiz Pehlevan, we show that ensembling over models of different size does the trick. (1/n) arxiv.org/abs/2307.03176

🎉Happy to share an exciting new theory paper our group, just published in Physical Review Letters with Editor's suggestion📖. Also featuring in Physics Magazine. Congratulations Albert Wakhloo on this milestone! Title: Linear Classification of Neural Manifolds with Correlated Variability

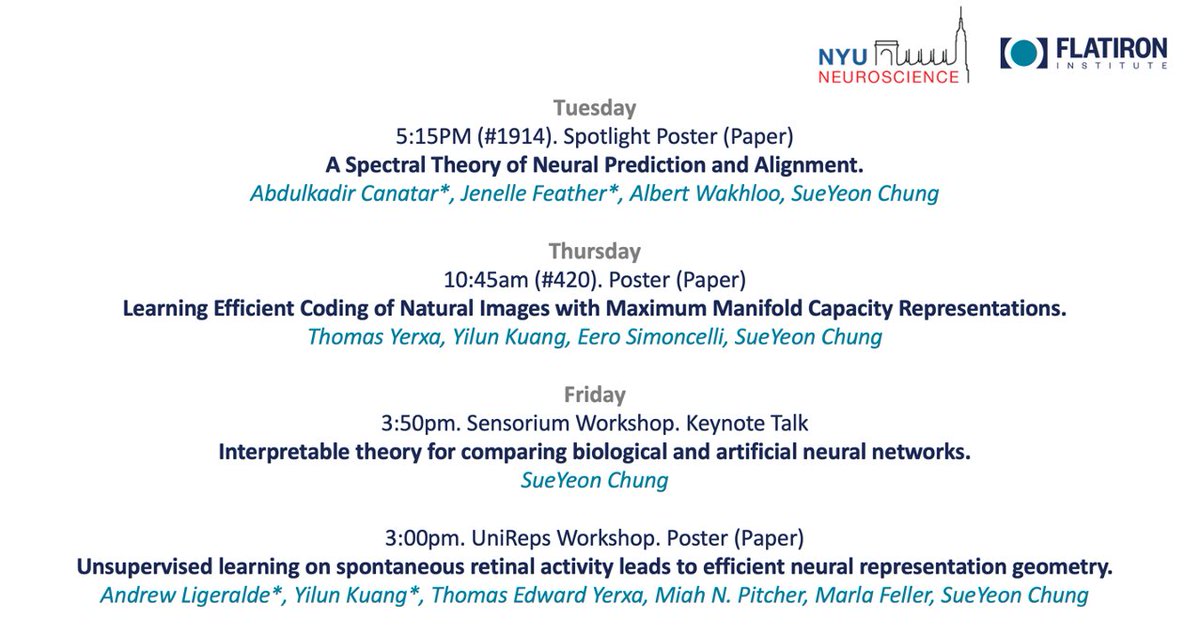

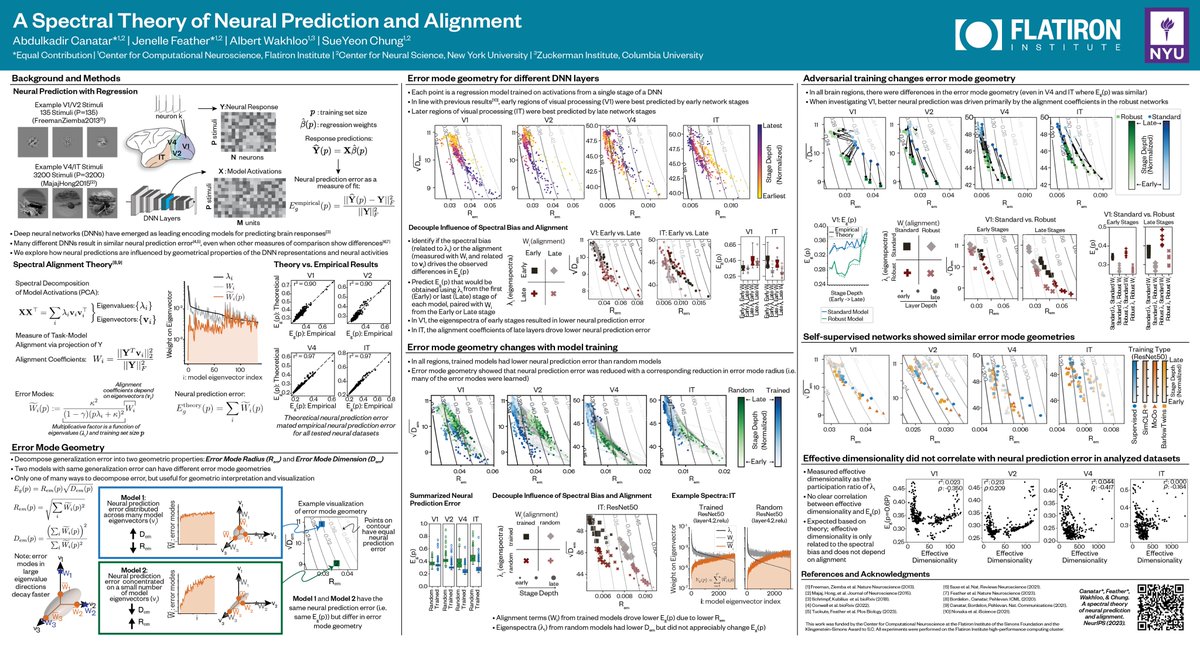

How do the spectral properties of a model influence neural prediction benchmarks? Check out our *new* paper, “A Spectral Theory of Neural Prediction and Alignment,” accepted to #NeurIPS2023 as a spotlight! arxiv.org/abs/2309.12821 w/ Abdulkadir Canatar SueYeon Chung Albert Wakhloo 🧵1/11

A biologically plausible neural network for online whitening with slow (synaptic plasticity) and fast (gain control) learning accepted as a spotlight at #NeurIPS2023! A collaboration with the Simoncelli group led by the brilliant Lyndon Duong, David Lipshutz arxiv.org/abs/2308.13633

Hot off the presses: ResNet hyperparameter transfer across depth and width! Tl;dr transfer for LR+schedules, momentum, L2 reg., etc. for wide ResNets and ViTs, with and without Batch/LayerNorm w/ Lorenzo Noci Mufan (Bill) Li Boris Hanin Cengiz Pehlevan arxiv.org/abs/2309.16620

Check out my most recent paper on the origins of homochirality—just published in Nature Communications today! #homochirality #chirality This paper marks the conclusion of a four-part series on this subject. nature.com/articles/s4146…

A great piece by Simons Foundation about our theory on the capacity of neural manifolds with correlated variability, led by Albert Wakhloo. Many thanks Mara Johnson-Groh & Lucy Reading-Ikkanda.

Very happy to share this work in NeurIPS 2023 with Nikhil Vyas, Blake Bordelon ☕️🧪👨💻, Sab Sainathan, @DepenKenpachi, and Cengiz Pehlevan on the consistent behavior of feature-learning networks across large widths arxiv.org/abs/2305.18411. What is large width consistency? Read on! 1/n

At #NeurIPS2023? Interested in brains, neural networks, and geometry? Come by our **Spotlight Poster** Tuesday @ 5:15PM (#1914) on A Spectral Theory of Neural Prediction and Alignment. w/ Abdulkadir Canatar SueYeon Chung Albert Wakhloo

👋Check out the latest findings from our lab at #COSYNE24 CosyneMeeting Thread & links to preprints to follow (1/n)🧵👇

[1/n] Thrilled that this project with Jacob Zavatone-Veth and @cpehlevan is finally out! Our group has spent a lot of time studying high dimensional regression and its connections to scaling laws. All our results follow easily from a single central theorem 🧵 arxiv.org/abs/2405.00592