Nicola Branchini

@branchini_nic

🇮🇹 3rd yr Stats PhD @EdinUniMaths 🏴.🤔💭 about reliable uncertainty quantification. Interested in sampling and measure transport methodologies.

ID: 3275439755

http://branchini.fun/about 17-05-2015 14:43:47

1,1K Tweet

738 Followers

2,2K Following

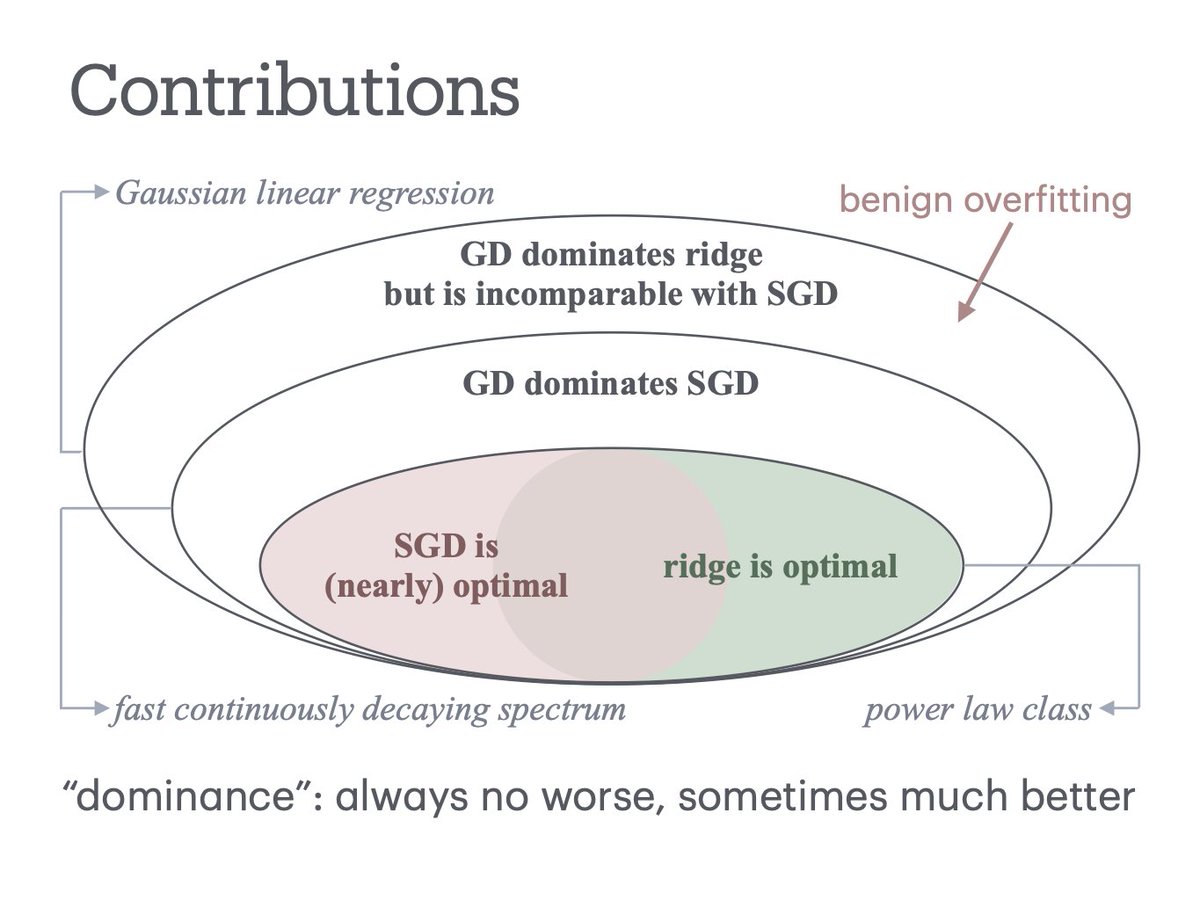

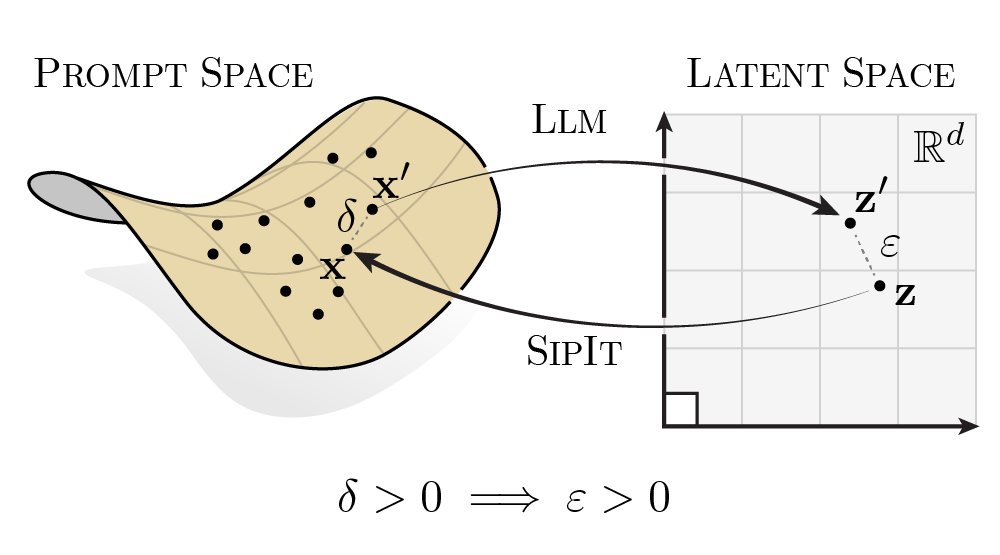

One of our three papers in “Frontiers in Probabilistic Inference” @ NeurIPS’25, along with arxiv.org/abs/2509.26364 and arxiv.org/abs/2510.01159. Pleasure to work with the brilliant tamogashev on all of them!