Benjamin Hilton

@benjamin_hilton

Semi-informed about economics, physics and governments. views my own

ID: 400251445

28-10-2011 18:46:21

1,1K Tweet

3,3K Followers

858 Following

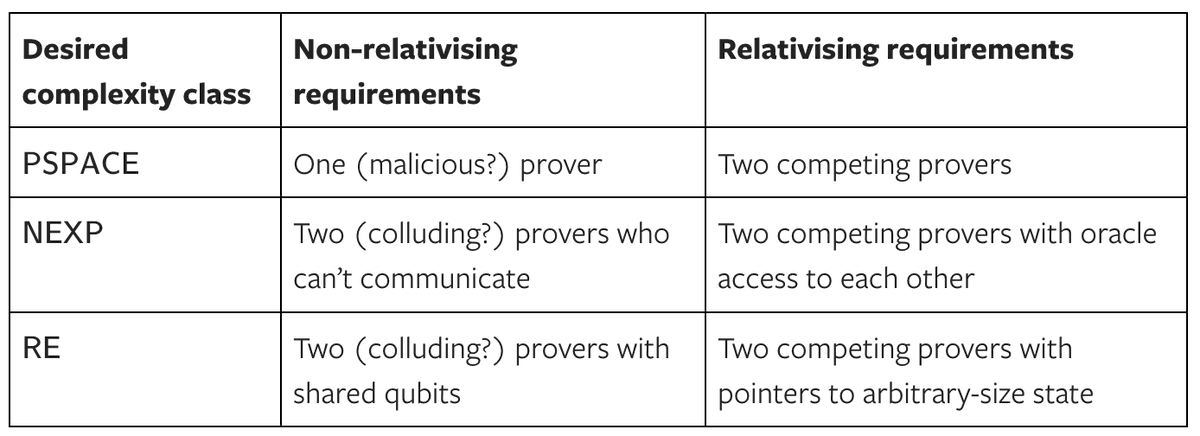

What debate structure will always let an honest debater win? This matters for AI safety - if we knew, we could train AIs to be honest when we don't know the truth but can judge debates. New paper by Jonah Brown-Cohen & Geoffrey Irving proposes an answer: arxiv.org/abs/2506.13609

This is a great initiative that brings together a range of resources in order to do alignment research at scale. Another good example of creative project funds from the AI Security Institute that draws on a range of different partners.

Very excited to see this come out, and to be able to support! Beyond the funding itself, the RfP itself is a valuable resource & great effort by the AI Security Institute team! It shows there is a lot of valuable, scientifically rigorous work to be done.