Arnav Singhvi

@arnav_thebigman

Working on DSPy under the mentorship of Stanford Ph.D. student Omar Khattab @lateinteraction

ID: 741014492687925248

https://arnavsinghvi11.github.io/ 09-06-2016 21:09:57

106 Tweet

653 Followers

345 Following

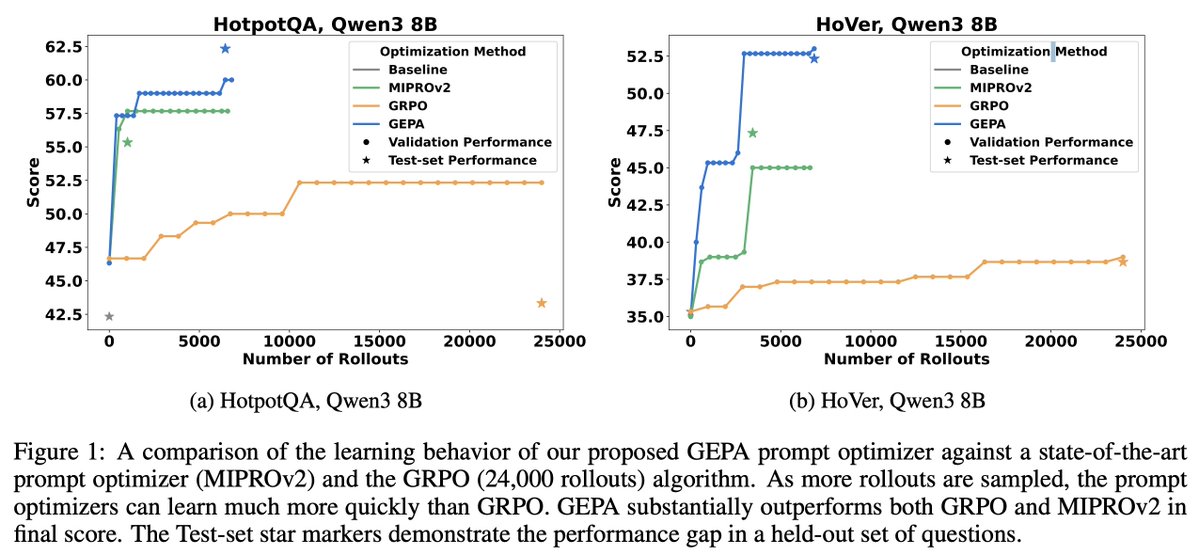

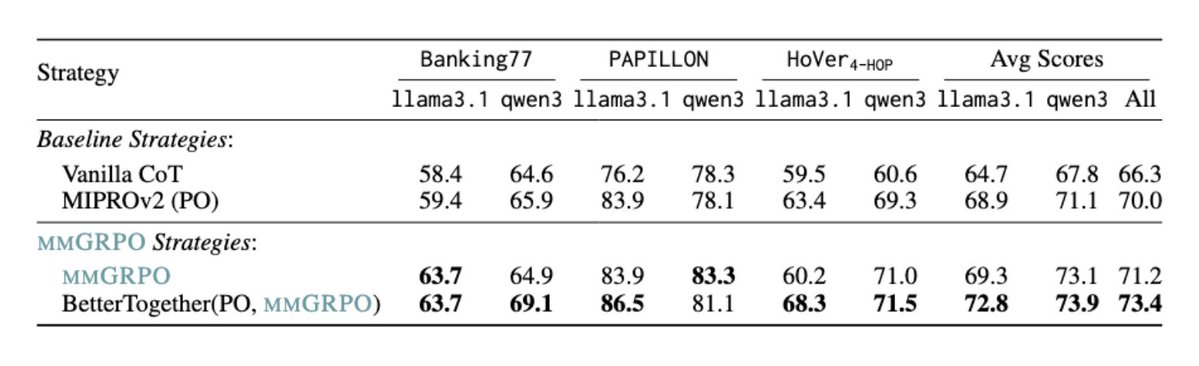

Should you RL your compound AI system or optimize its prompts? We think both! 🤯 A short preview of work co-led with Noah Ziems and Lakshya A Agrawal!👇

Automated prompt optimization (GEPA) can push open-source models beyond frontier performance on enterprise tasks — at a fraction of the cost! 🔑 Key results from our research Databricks Mosaic Research: 1⃣ gpt-oss-120b + GEPA beats Claude Opus 4.1 on Information Extraction (+2.2 points) —

I'm getting really excited about prompt optimization as a cost vs. quality sweet spot for enterprises. These results from Ivan Zhou Arnav Singhvi Krista Opsahl-Ong and team make a compelling case.

The DSPy community is growing in Boston! ☘️🔥 We are beyond excited to be hosting a DSPy meetup on October 15th! Come meet DSPy and AI builders and learn from talks by Omar Khattab (Omar Khattab), Noah Ziems (Noah Ziems), and Vikram Shenoy (Vikram Shenoy)! See you in

If you've been trying to figure out DSPy - the automatic prompt optimization system - this talk by Drew Breunig is the clearest explanation I've seen yet, with a very useful real-world case study youtube.com/watch?v=I9Ztkg… My notes here: simonwillison.net/2025/Oct/4/dre…

Simon Willison Drew Breunig Have heard great things about DSPy plus GEPA, which is an even stronger prompt optimizer than miprov2 — repo and (fascinating) examples of generated prompts at github.com/gepa-ai/gepa and paper at arxiv.org/abs/2507.19457