Pablo Contreras Kallens

@pcontrerask

Ph. D. candidate, Cornell Psychology in the Cognitive Science of Language lab. Serious account.

ID: 1310578687553789953

http://www.contreraskallens.com 28-09-2020 13:55:00

89 Tweet

128 Takipçi

56 Takip Edilen

Another day, another opinion essay about ChatGPT in the The New York Times. This time, Noam Chomsky and colleagues weigh in on the shortcomings of language models. Unfortunately, this is not the nuanced discussion one could have hoped for. 🧵 1/ nytimes.com/2023/03/08/opi…

Pablo Contreras Kallens Ross And for those who’d like a less academic take on the issues in this article, take a look at our short piece in the The Conversation U.S.: theconversation.com/ai-is-changing…

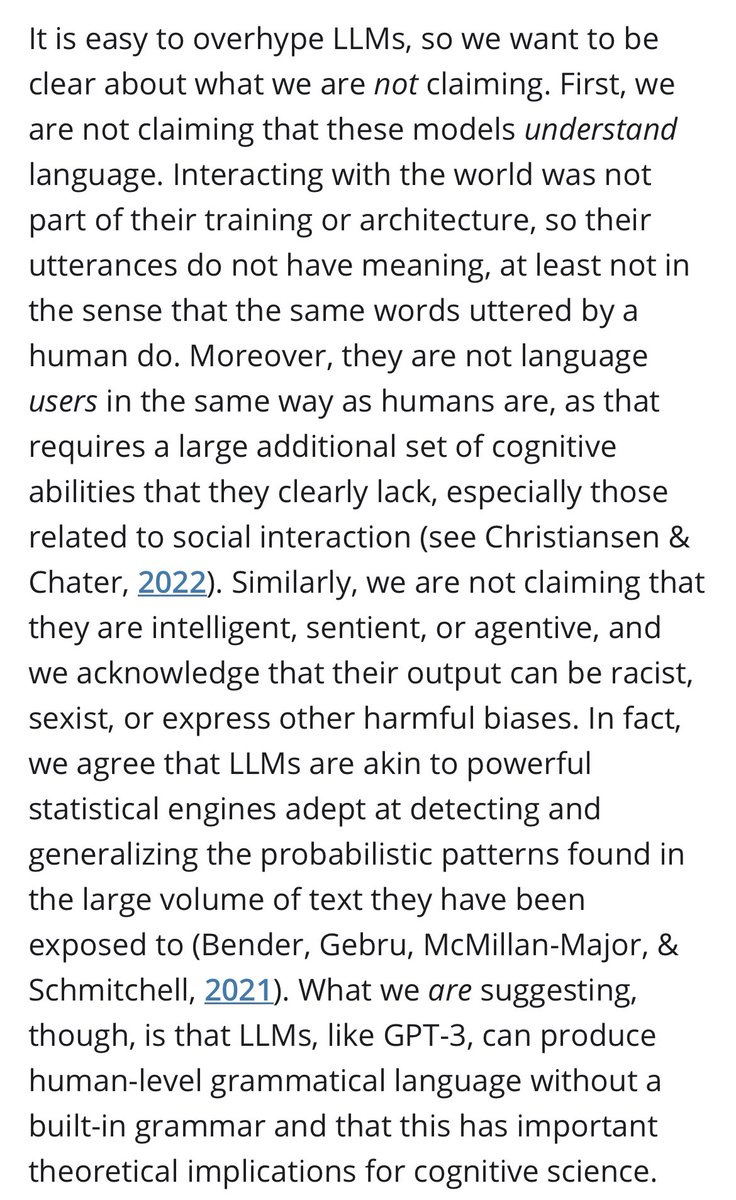

For the Friday evening crowd still mulling over Chomsky’s op-ed, here’s a key passage from our recent letter in CogSci Society. There are absolutely some things LLMs cannot do. But what they can do, they do very well - and that demands attention. onlinelibrary.wiley.com/share/6REPM3XF…

Information density as a predictor of communication dynamics Spotlight by Gary Lupyan (Gary Lupyan), Pablo Contreras Kallens (Pablo Contreras Kallens), & Rick Dale on recent @NatureHumanBehav work by Pete Aceves (Pete Aceves) & James Evans (James Evans) authors.elsevier.com/a/1ixK34sIRvTA…

Cognitive Science of Language Lab alumn Pablo Contreras Kallens talks about how feedback is crucial for getting large language models to produce more human-like language output, such as making similar agreement errors and being sensitive to subtle semantic distinctions

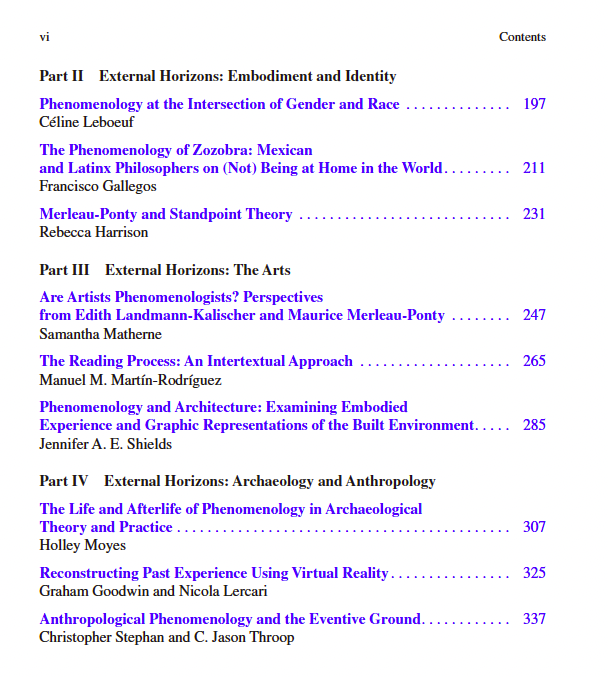

I've been having a nice time talking to Deniz Cem Önduygu about maps and graphs of philosophical discourse, and thought I'd repost my bibliometric map of the phenomenology literature. Dots are authors, links are citations, and colors are clusters.