linker

@keylinker

computer vision/deep learning, Prev. leading ai part @ dealicious, leading ai research team @ oddconcepts, naver search & naverlabs's researcher @ naver.

ID: 1667766445

13-08-2013 12:59:09

6,6K Tweet

193 Takipçi

927 Takip Edilen

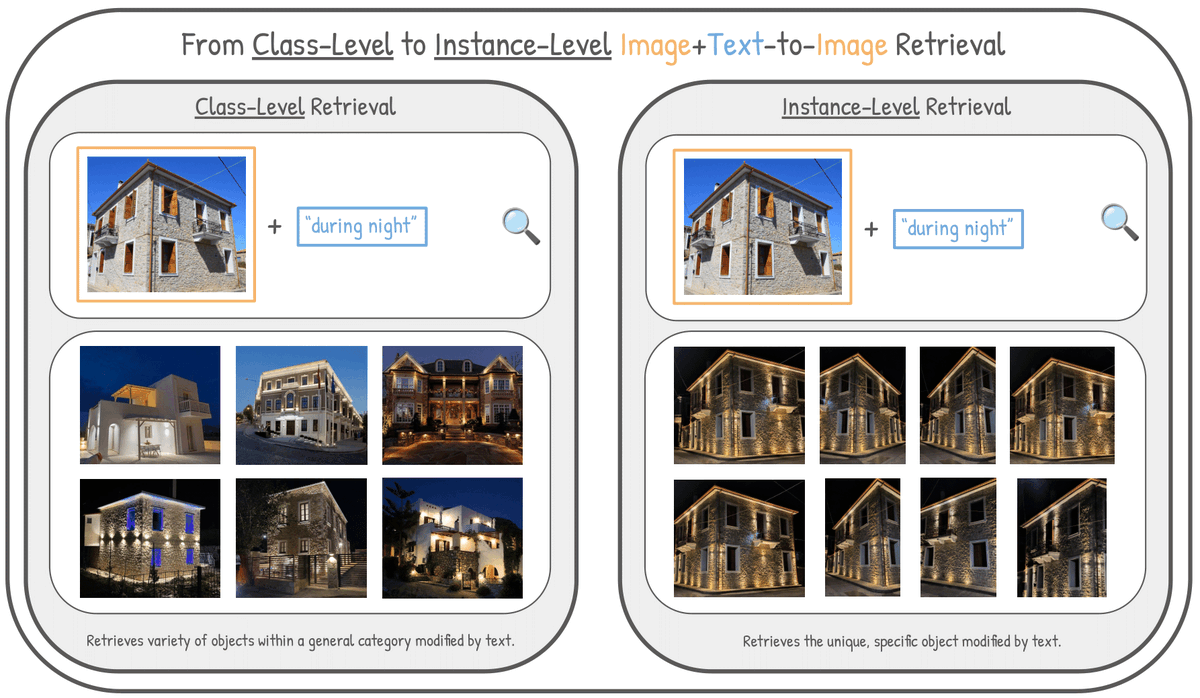

Instance-Level Composed Image Retrieval Bill Psomas George Retsinas, Nikos Efthymiadis, Panagiotis Filntisis,Yannis Avrithis, Petros Maragos, Ondrej Chum, Giorgos Tolias tl;dr: condition-based retrieval (and dataset) - old photo/sunset/night/aerial/model arxiv.org/abs/2510.25387

This is a phenomenal video by Jia-Bin Huang explaining seminal papers in computer vision, including CLIP, SimCLR, DINO v1/v2/v3 in 15 minutes DINO is actually a brilliant idea, I found the decision of 65k neurons in the output head pretty interesting

This is done with Delong Chen (陈德龙) Théo Moutakanni Willy Jade Lei Yu Tejaswi Kasarla Allen Bolourchi Yann LeCun Pascale Fung Paper: arxiv.org/abs/2512.10942 (8/8)

![DailyPapers (@huggingpapers) on Twitter photo Black Forest Labs just released a quantized FLUX.2 [dev] on Hugging Face

32B-parameter image generation & editing with multi-reference support for up to 10 images, 4MP resolution, and optimized text rendering—now optimized for NVIDIA GPUs. Black Forest Labs just released a quantized FLUX.2 [dev] on Hugging Face

32B-parameter image generation & editing with multi-reference support for up to 10 images, 4MP resolution, and optimized text rendering—now optimized for NVIDIA GPUs.](https://pbs.twimg.com/media/G-COnxMXIAAv8hH.jpg)