An-Chieh Cheng

@anjjei

PhD student @UCSanDiego; prev intern: @AdobeResearch; I love 3D vision.

ID: 203924729

http://anjiecheng.me 17-10-2010 14:20:00

226 Tweet

527 Followers

355 Following

Test-Time Training (TTT) is available in video generation now! We can directly generate complete one-minute video, with great temporal and spatial coherence. We created more episodes of Tom and Jerry (my favorite cartoon in childhood) with our model. test-time-training.github.io/video-dit/

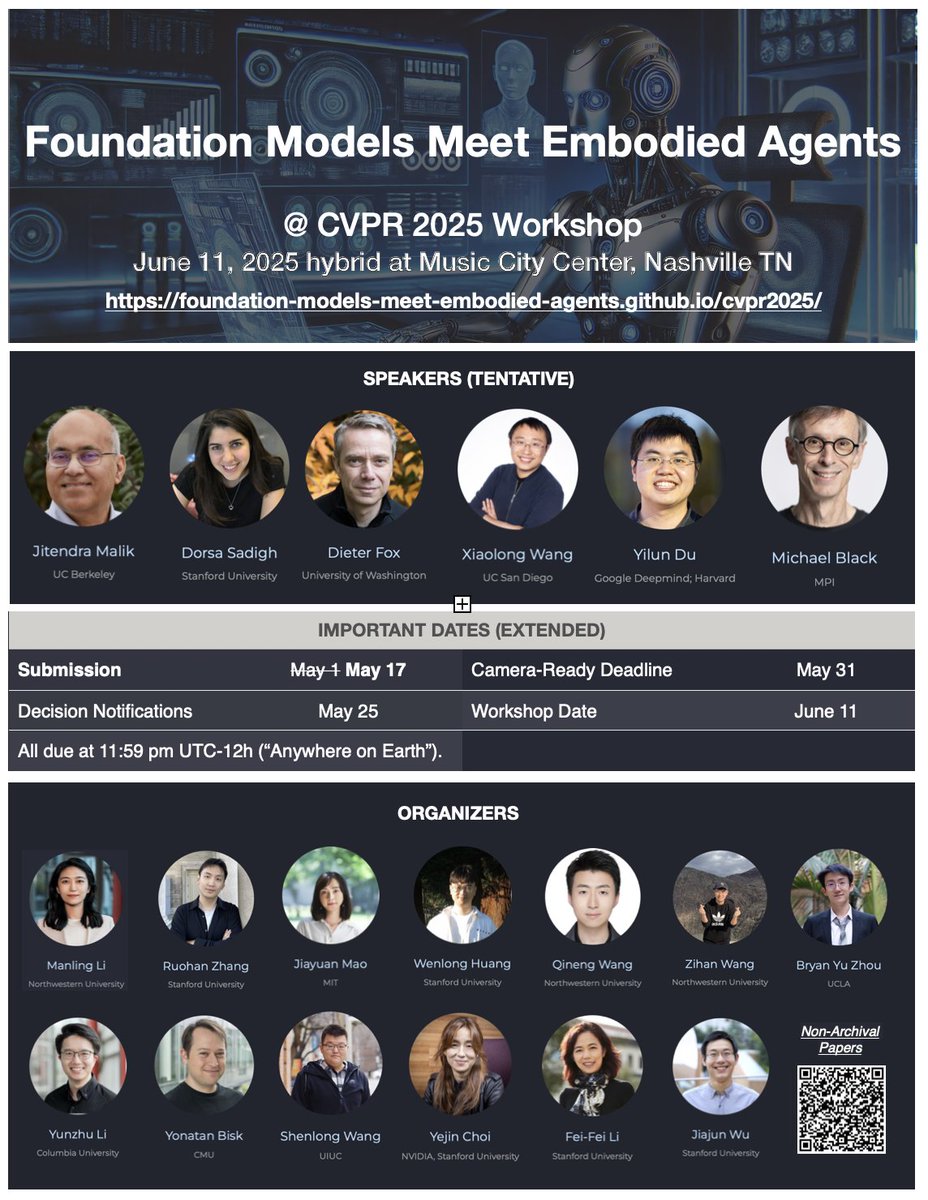

🚨CVPR Workshop on Foundation Models + Embodied Agents Extending non-archival submission deadline to be after NeurIPS, May 17th! 🌐Website: …models-meet-embodied-agents.github.io/cvpr2025/ 📜OpenReview: openreview.net/group?id=thecv… 👥Program committee sign up form forms.gle/bL17vmr7ZbybxE… ✉️mailing list:

Full episode dropping soon! Geeking out with Roger Qiu on Humanoid Policy ~ Human Policy human-as-robot.github.io Co-hosted by Chris Paxton & Michael Cho - Rbt/Acc