Alon Jacovi

@alon_jacovi

ML/NLP, XAI @google. previously: @biunlp @allen_ai @IBMResearch @RIKEN_AIP

ID: 1101220947607146497

https://alonjacovi.github.io/ 28-02-2019 20:41:48

666 Tweet

1,1K Followers

433 Following

Do you have a "tell" when you are about to lie? We find that LLMs have “tells” in their internal representations which allow estimating how knowledgeable a model is about an entity 𝘣𝘦𝘧𝘰𝘳𝘦 it generates even a single token. Paper: arxiv.org/abs/2406.12673… 🧵 Daniela Gottesman

new models have an amazingly long context. but can we actually tell how well they deal with it? 🚨🚨NEW PAPER ALERT🚨🚨 with Alon Jacovi lovodkin93 Aviya Maimon, Ido Dagan and Reut Tsarfaty arXiv: arxiv.org/abs/2407.00402… 1/🧵

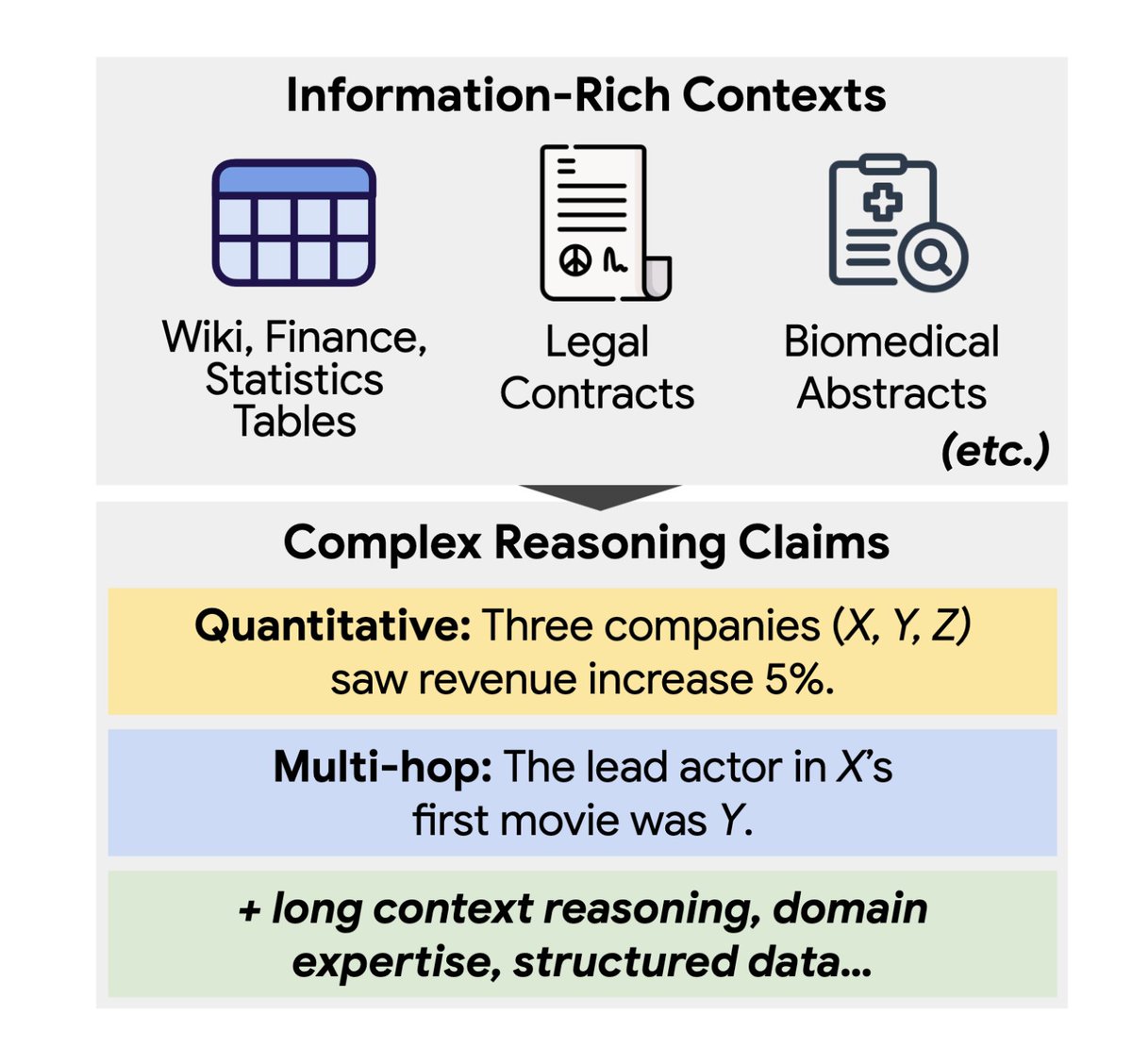

Cool dataset from Alon Jacovi et al.! Similar to NLI: you're given a context and a claim (and need to confirm/deny the claim). But the contexts are ~3.5K tokens + claims require different skills like multi-hop/table reasoning, etc. 70B+ models are close to random performance

The main conference has ended, but the ACL 2025 is not over. Tomorrow, Friday the 16th, come to the CONDA Workshop (Lotus Suite 4) for amazing talks by Anna Rogers, Jesse Dodge, @dieuwke, and MMitchell. Schedule: conda-workshop.github.io

The Data Contamination Workshop is tomorrow at #ACL2024NLP ! Come listen to MMitchell Dieuwke Hupkes Jesse Dodge Anna Rogers and a collection of amazing data contamination papers at Lotus Suite 4! Program: conda-workshop.github.io