Yossi Adi

@adiyosslc

Assistant Professor @ The Hebrew University of Jerusalem, CSE; Research Scientist @ Meta AI (FAIR); Drummer @ Lucille Crew 🤖🥁🎤🎧🌊

ID: 744846674384789504

https://www.cs.huji.ac.il/~adiyoss/ 20-06-2016 10:57:40

221 Tweet

845 Takipçi

356 Takip Edilen

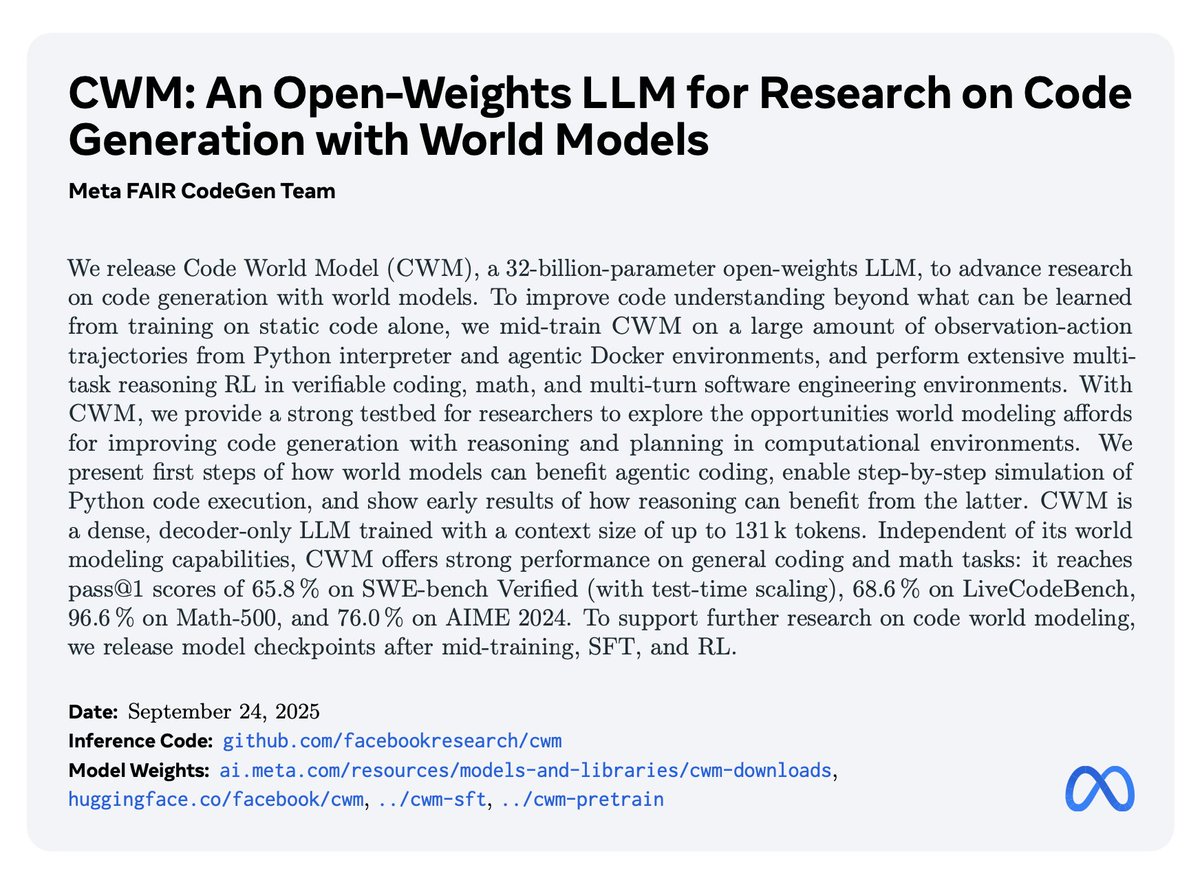

Incredibly excited by this work, congrats Gabriel Synnaeve + AI at Meta codegen! 32b model that hits 65.8% on SWE-bench w/ TTS is incredible. A year ago that would've been unimaginable to me. Section 2 is a great read - resonates so much w/ what SWE-smith is trying to achieve in the open.

Kilian Lieret carlos Ofir Press Karthik Narasimhan Ludwig Schmidt Diyi Yang This table really puts things into perspective for me. SWE-smith has 250 repos (and 250 images, 1 per repo), and 26k OS trajectories (and counting). And these numbers were 10x more than any existing dataset at release. But CWM goes **another** order of magnitude larger 🤯

🚨 Attention aspiring PhD students 🚨 Meta / FAIR is looking for candidates for a joint academic/industry PhD! Keywords: AI for Math & Code. LLMs, RL, formal and informal reasoning. You will be co-advised by prof. Amaury Hayat from ecole des ponts and yours truly. You'll have