Adam Zweiger

@adamzweiger

ID: 1571391036416724992

18-09-2022 06:50:11

0 Tweet

14 Followers

197 Following

re Scott Alexander on the human analogue of LLM hallucination: There is simply no human equivalent to what gpt4 does when being asked "what does GRPO stand for" or even "What is the capital of France" without anything else prompted in-context. The closest thing is someone

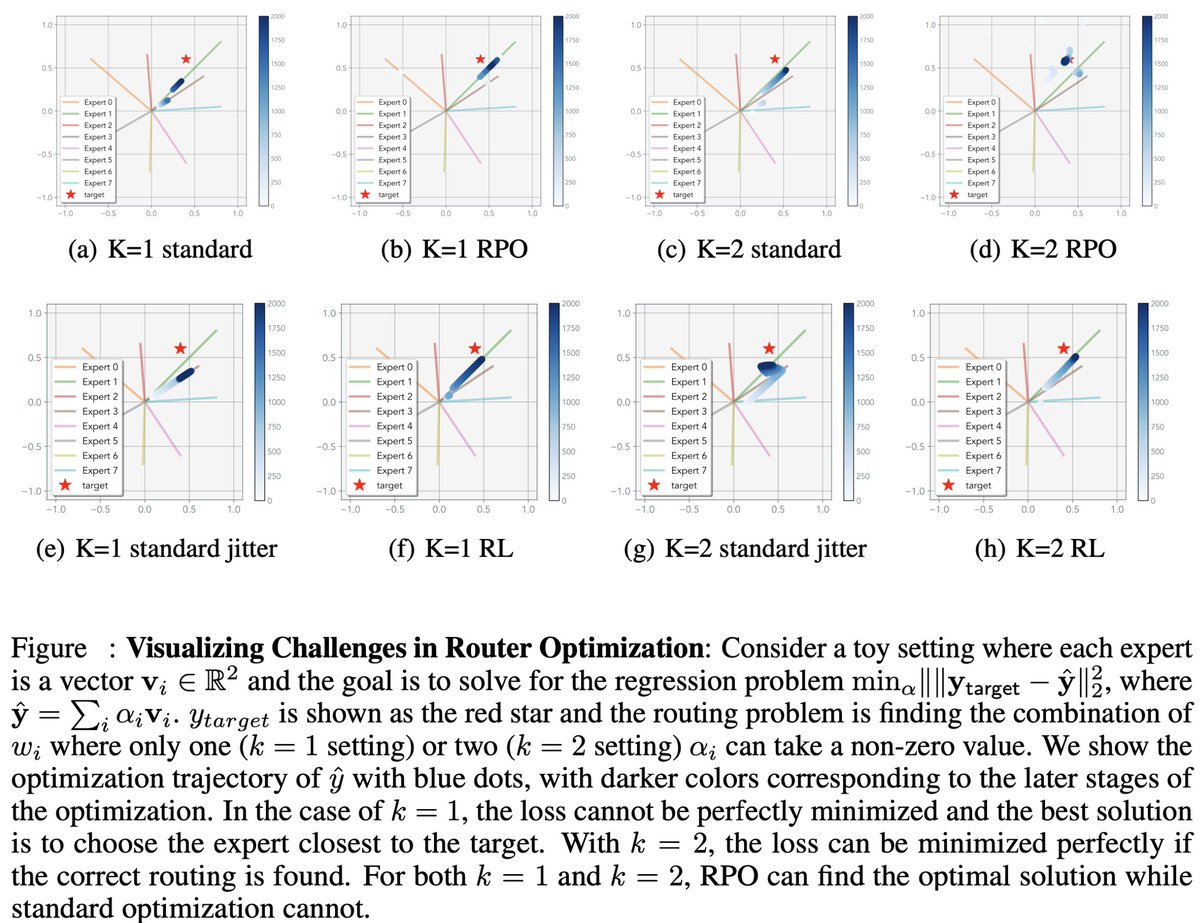

MoE Routers are trained a bit strangely but things seem to still work. minyoung huh (jacob) and I got curious about combining specialized experts at test time through routing… and ended up deep in the weeds of MoE optimization. Here's a blog post! jyopari.github.io/posts/peculiar…