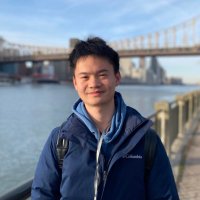

Wenli Xiao

@_wenlixiao

Graduate Researcher @CMU_Robotics | Intern at GEAR Lab

@NvidiaAI | I build AI brains for robots

ID: 1160530286297305093

http://wenlixiao-cs.github.io 11-08-2019 12:35:56

87 Tweet

1,1K Takipçi

437 Takip Edilen

our new system trains humanoid robots using data from cell phone videos, enabling skills such as climbing stairs and sitting on chairs in a single policy (w/ Hongsuk Benjamin Choi Junyi Zhang David McAllister)

Impressive work! Yuanhang Zhang

"I believe finding such a scalable off-policy RL algorithm is the most important missing piece in machine learning today." Very insightful blog on offlineRL by Seohong Park 🫡 It's quite painful that offlineRL only works for "reduced horizon" at this stage. looking forward to