Christina Baek

@_christinabaek

PhD student @mldcmu | Past: intern @GoogleAI

ID: 1409604978004594688

http://kebaek.github.io 28-06-2021 20:11:47

53 Tweet

978 Followers

309 Following

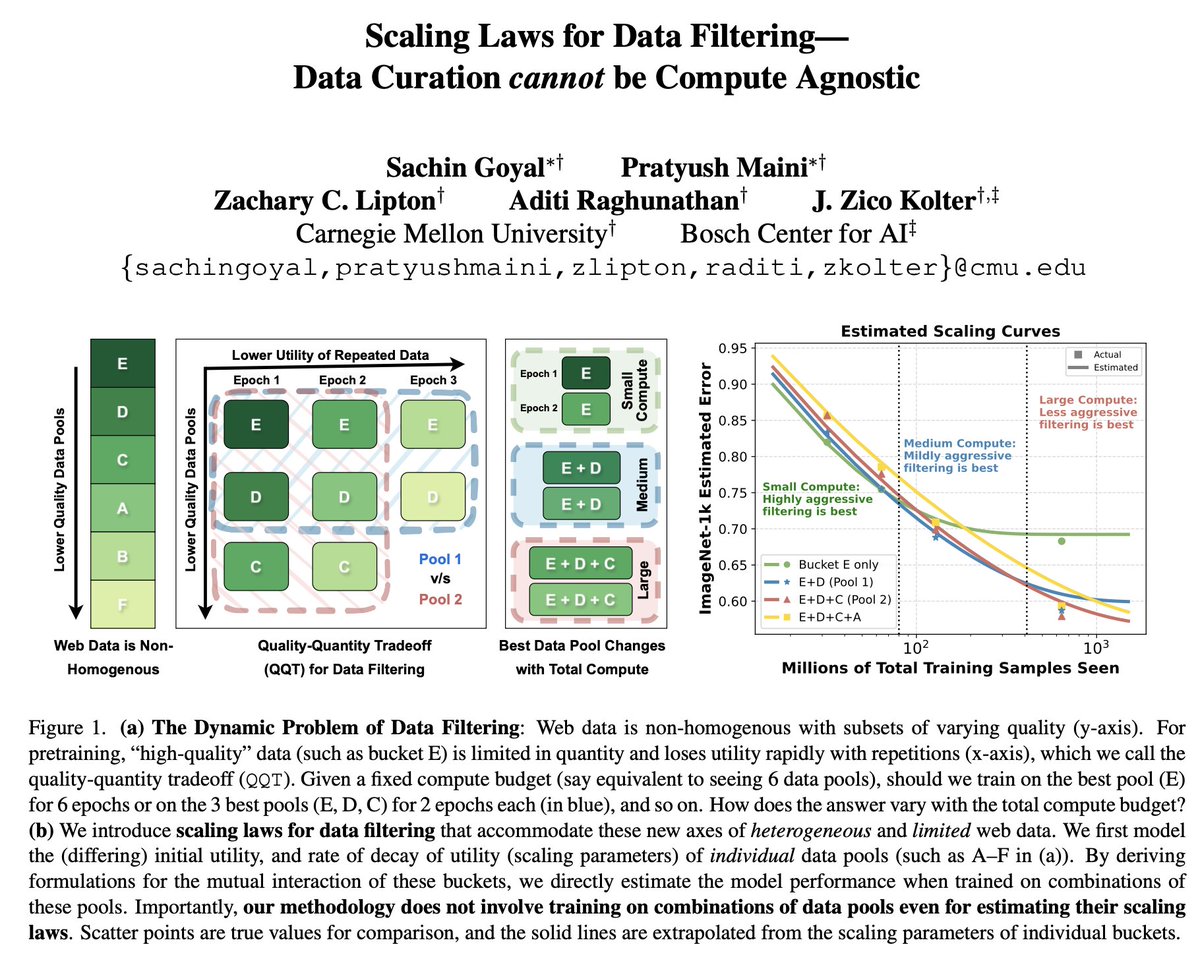

1/ 🥁Scaling Laws for Data Filtering 🥁 TLDR: Data Curation *cannot* be compute agnostic! In our #CVPR2024 paper, we develop the first scaling laws for heterogeneous & limited web data. w/Sachin Goyal Zachary Lipton Aditi Raghunathan Zico Kolter 📝:arxiv.org/abs/2404.07177

1/What does it mean for an LLM to “memorize” a doc? Exactly regurgitating a NYT article? Of course. Just training on NYT?Harder to say We take big strides in this discourse w/*Adversarial Compression* w/Avi Schwarzschild Zhili Feng Zachary Lipton Zico Kolter 🌐:locuslab.github.io/acr-memorizati…🧵