Mikhail Yurochkin

@yurochkin_m

Staff AI Scientist, Institute of Foundation Models @MBZUAI. Previously Research Manager @MITIBMLab @IBMResearch Stats PhD @UMich

ID: 1814152825082974208

https://moonfolk.github.io/ 19-07-2024 04:18:46

27 Tweet

143 Takipçi

147 Takip Edilen

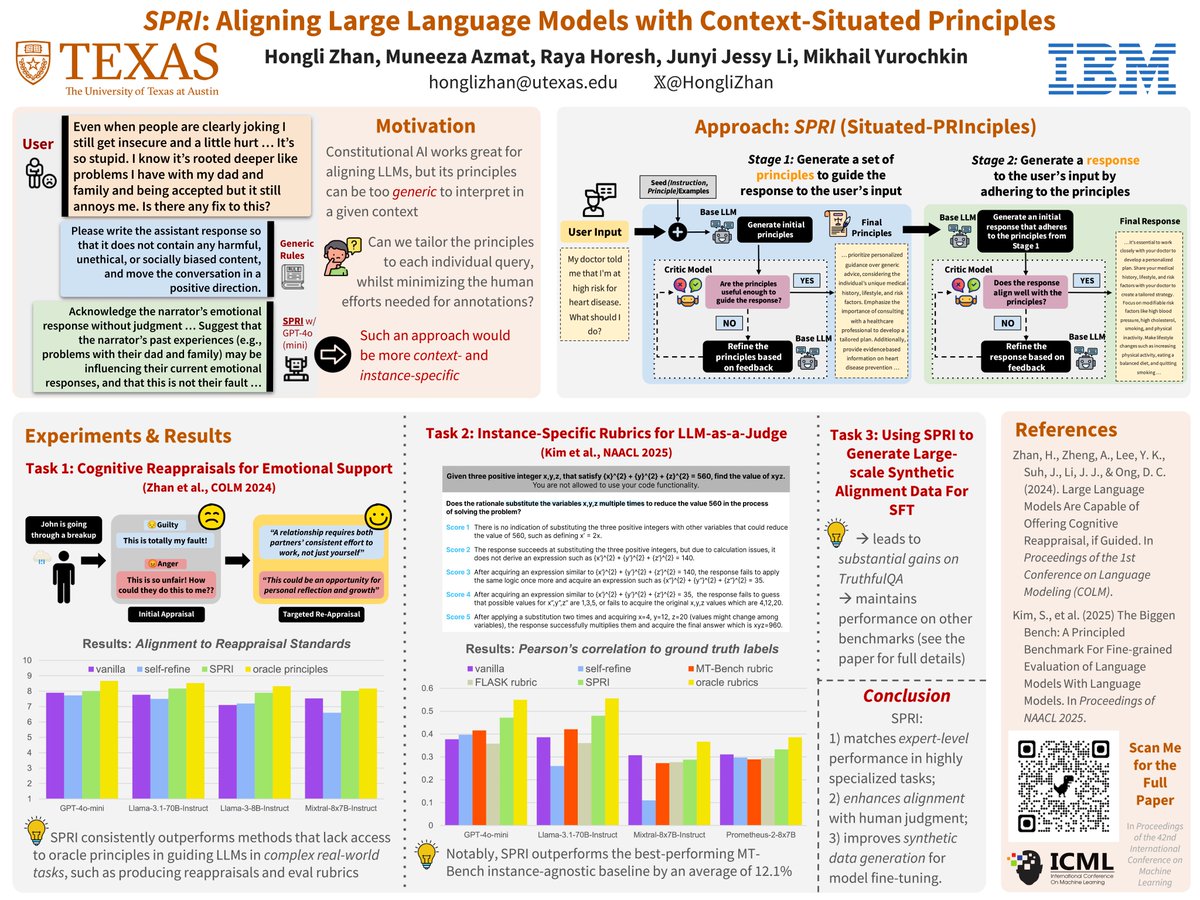

Great work by Hongli Zhan ✈️ ICML (on the job market)! It started during his summer internship at IBM to improve synthetic data generation with principles/constitutions. Allowing LLMs to first "interpret" principles within each query improves the quality, especially in domains requiring subject experts.

Congrats Hongli Zhan ✈️ ICML and thanks for your hard work 🎉 context-situated principles is a very promising approach for alignment and other applications 🔥

I'll be at #icml2025 ICML Conference to present SPRI next week! Come by our poster on Tuesday, July 15, 4:30pm, and let’s catch up on LLM alignment! 😃 🚀TL;DR: We introduce Situated-PRInciples (SPRI), a framework that automatically generates input-specific principles to align

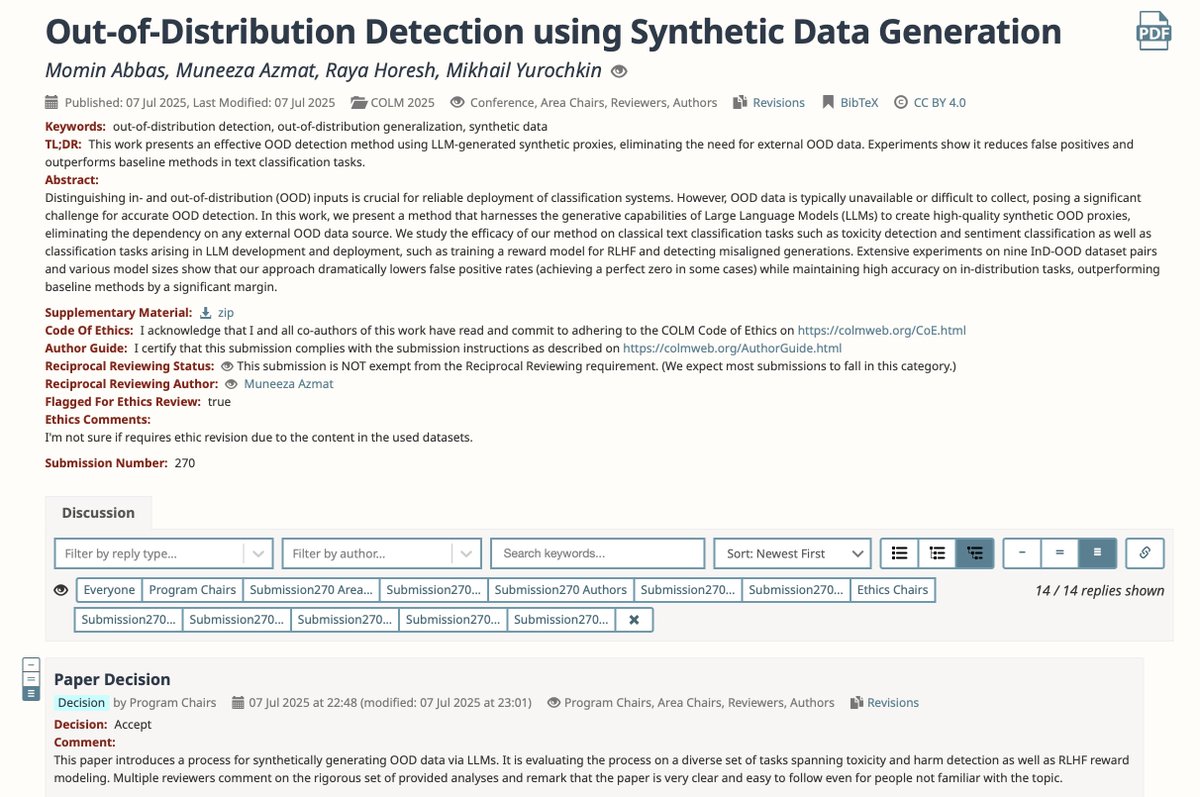

Very happy to share that our work "Out-of-Distribution Detection using Synthetic Data Generation" has been accepted at COLM 2025! 🎉 Grateful to have worked with an incredible team Muneeza Azmat, Rå¥å, Mikhail Yurochkin 👏 Conference on Language Modeling #COLM2025

I had a lot of fun working on this project 😃 Training 1000+ LoRAs to do interesting experiments, digging into vLLM, improving the algorithm to work at scale. Thanks Rickard Brüel Gabrielsson for your hard work and congrats 😎