Explainable AI

@xai_research

Moved to 🦋! Explainable/Interpretable AI researchers and enthusiasts - DM to join the XAI Slack! Twitter and Slack maintained by @NickKroeger1

ID: 1508524378224427008

28-03-2022 19:20:26

1,1K Tweet

2,2K Followers

783 Following

This Thursday (in 3 days), Yishay Mansour will discuss interpretable approximations — learning with interpretable models. Is it the same as regular learning? Attend the lecture to find out! 💻 Website: tverven.github.io/tiai-seminar/ Suraj Srinivas @ ICML Tim van Erven

Chirag Agarwal Follow us for AI safety insights x.com/intent/follow?… And watch the full video youtu.be/nqZ6EiPltSo&li…

In case you missed it: here is the recording of Yishay Mansour's talk about the ability of decision trees to approximate concepts: youtu.be/uOwuho2er58 For upcoming talks, check out the seminar website: tverven.github.io/tiai-seminar/

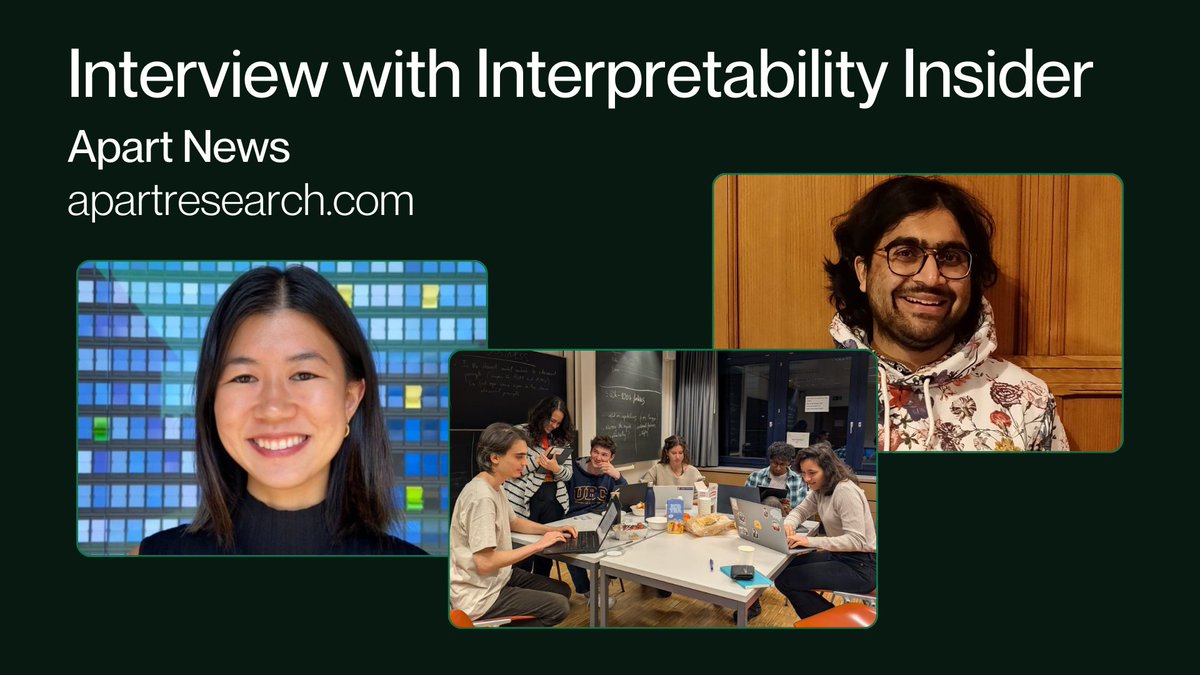

This week's Apart News brings you an *exclusive* interview with interpretability insider Myra Deng of Goodfire & revisits our Sparse Autoencoders Hackathon which featured a memorable talk from Google DeepMind's Neel Nanda.

Hot off the press: my PhD thesis "Foundations of machine learning interpretability" is officially published! Enjoy it at theses.hal.science/tel-04917007 Explainable AI Trustworthy ML Initiative (TrustML)

Our Theory of Interpretable AI (tverven.github.io/tiai-seminar/) will soon celebrate its one-year anniversary! 🥳 As we step into our second year, we’d love to hear from you! What papers would you like to see discussed in our seminar in the future? 📚🔍 Tim van Erven Michal Moshkovitz