Trustworthy ML Initiative (TrustML)

@trustworthy_ml

Latest research in Trustworthy ML. Organizers: @JaydeepBorkar @sbmisi @hima_lakkaraju @sarahookr Sarah Tan @chhaviyadav_ @_cagarwal @m_lemanczyk @HaohanWang

ID:1262375165490540549

https://www.trustworthyml.org 18-05-2020 13:31:24

1,7K Tweets

6,0K Followers

64 Following

We're recruiting two Research Assistants to join us and work on the security of ML-based personal assistants at

Imperial College London. The role will focus on verification, robustification and adversarial attacks for AI assistants. rb.gy/mcxvob.

We are excited to present a new event of our seminar series on ML Security!

We will host Giovanni Cherubin (@Microsoft) on March 26th, 2024 at 15:00 CET.

Free registration: us02web.zoom.us/j/82941308293?…

ELSA - European Lighthouse on Secure and Safe AI Adversarial Machine Learning Trustworthy ML Initiative (TrustML) AI Village @ DEF CON RedTeamVillage

Just one month left before SaTML Conference April 9-11 in Toronto! I am excited to hear from Somesh Jha Deb Raji Yves-A. de Montjoye Sheila McIlraith, as well as the authors of accepted papers, and the competition organizing teams!

There's still time to register! satml.org

Our first Uncommon Good post (with Nick Perello) discusses how to train AI systems that do not propagate discrimination, in compliance with legal provision, based on our research published in ACM FAccT, AI, Ethics, and Society Conference (AIES), and ICML Conference. Stay tuned!

open.substack.com/pub/uncommongo…

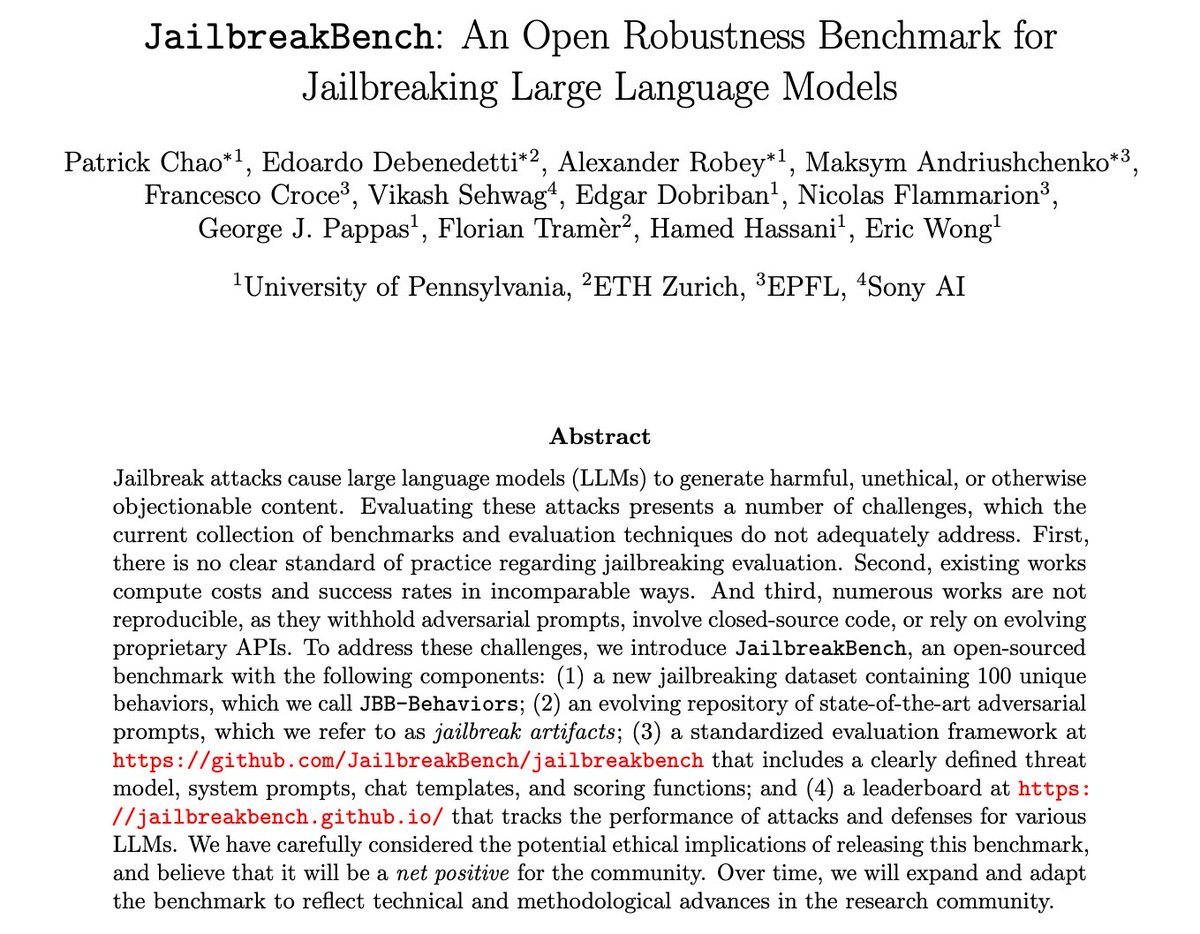

We are announcing the winners of our Trojan Detection Competition on Aligned LLMs!!

🥇 TML Lab (EPFL) (@fra__31, Maksym Andriushchenko 🇺🇦 and Nicolas Flammarion)

🥈 Krystof Mitka

🥉 nev

🧵 With some of the main findings!