Sadhika Malladi

@sadhikamalladi

CS PhD student at Princeton

ID: 1532167008074227713

https://www.cs.princeton.edu/~smalladi/index.html 02-06-2022 01:07:52

211 Tweet

1,1K Followers

199 Following

Thrilled that Abhishek Panigrahi is presenting our paper (joint with Bingbin Liu, Sadhika Malladi, Andrej Risteski) on the benefits of progressive distillation as an oral at #ICLR2025. Talk details below ⬇️ Also check out our blog post: unprovenalgos.github.io/progressive-di…

Announcing the 1st Workshop on Methods and Opportunities at Small Scale (MOSS) at ICML Conference 2025! 🔗Website: sites.google.com/view/moss2025 📝 We welcome submissions! 📅 Paper & jupyter notebook deadline: May 22, 2025 Topics: – Inductive biases & generalization – Training

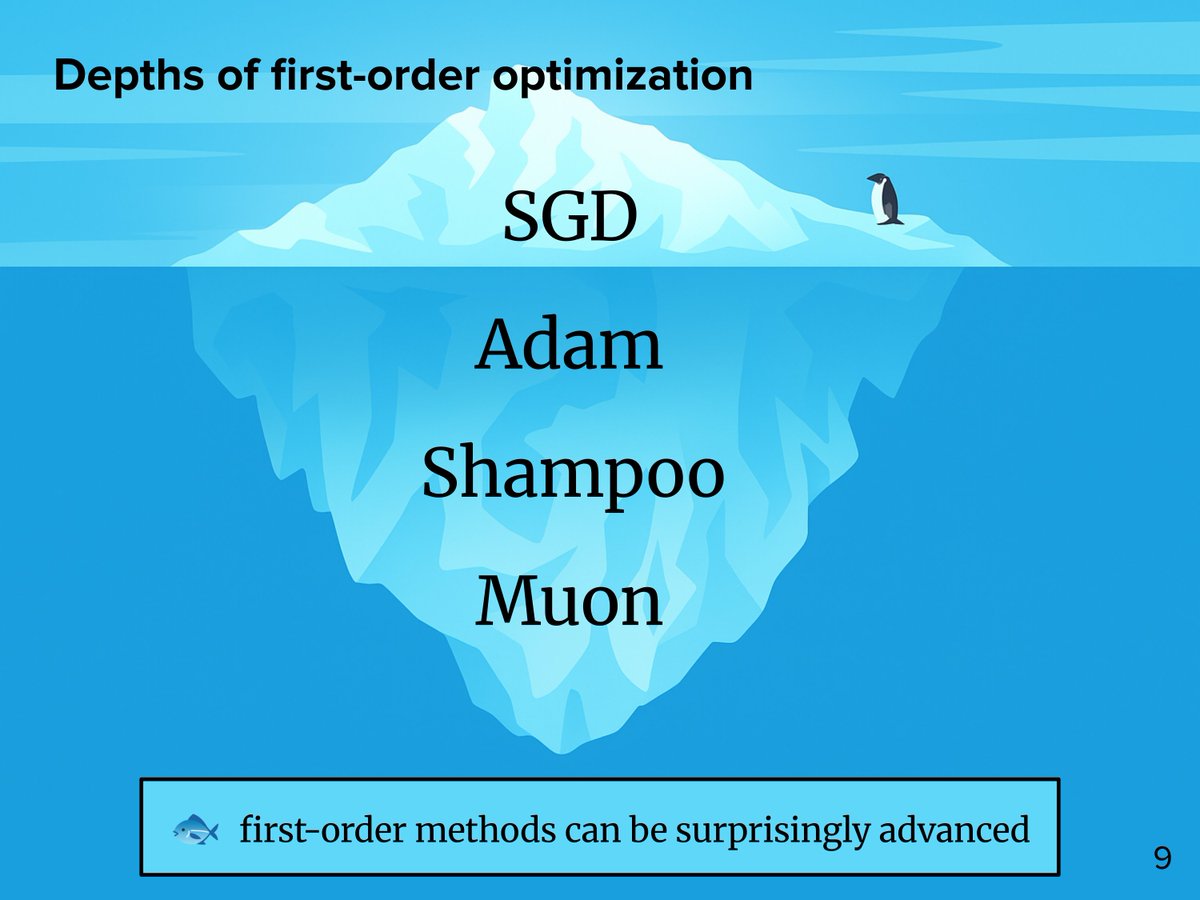

I was really grateful to have the chance to speak at Cohere Labs and ML Collective last week. My goal was to make the most helpful talk that I could have seen as a first-year grad student interested in neural network optimization. Sharing some info about the talk here... (1/6)

Data selection and curriculum learning can be formally viewed as a compression protocol via prequential coding. New blog (with Allan Zhou ) about this neat idea that motivated ADO but didn’t make it into the paper. yidingjiang.github.io/blog/post/curr…

Adam is similar to many algorithms, but cannot be effectively replaced by any simpler variant in LMs. The community is starting to get the recipe right, but what is the secret sauce? Robert M. Gower 🇺🇦 and I found that it has to do with the beta parameters and variational inference.