Souradip Chakraborty

@souradipchakr18

Student Researcher @Google || PhD @umdcs @ml_umd, working on #LLM #Alignment #RLHF #Reasoning

Prev : #JPMC #Walmart Labs, MS #IndianStatisticalInstitute

ID: 1135038999297314817

https://souradip-umd.github.io/ 02-06-2019 04:22:40

1,1K Tweet

1,1K Followers

4,4K Following

Ravid Shwartz Ziv Andrew Gordon Wilson Micah Goldblum Amrit Singh Bedi Furong Huang Philippe Beaudoin Sharon Li 🇺🇦 Dzmitry Bahdanau Dan Roy Lenka Zdeborova Alessandro Sordoni Pablo Samuel Castro David Duvenaud Roger Grosse Percy Liang Leo Dianbo Liu clem 🤗 Zhijing Jin James Zou David Krueger Tanishq Mathew Abraham, Ph.D.

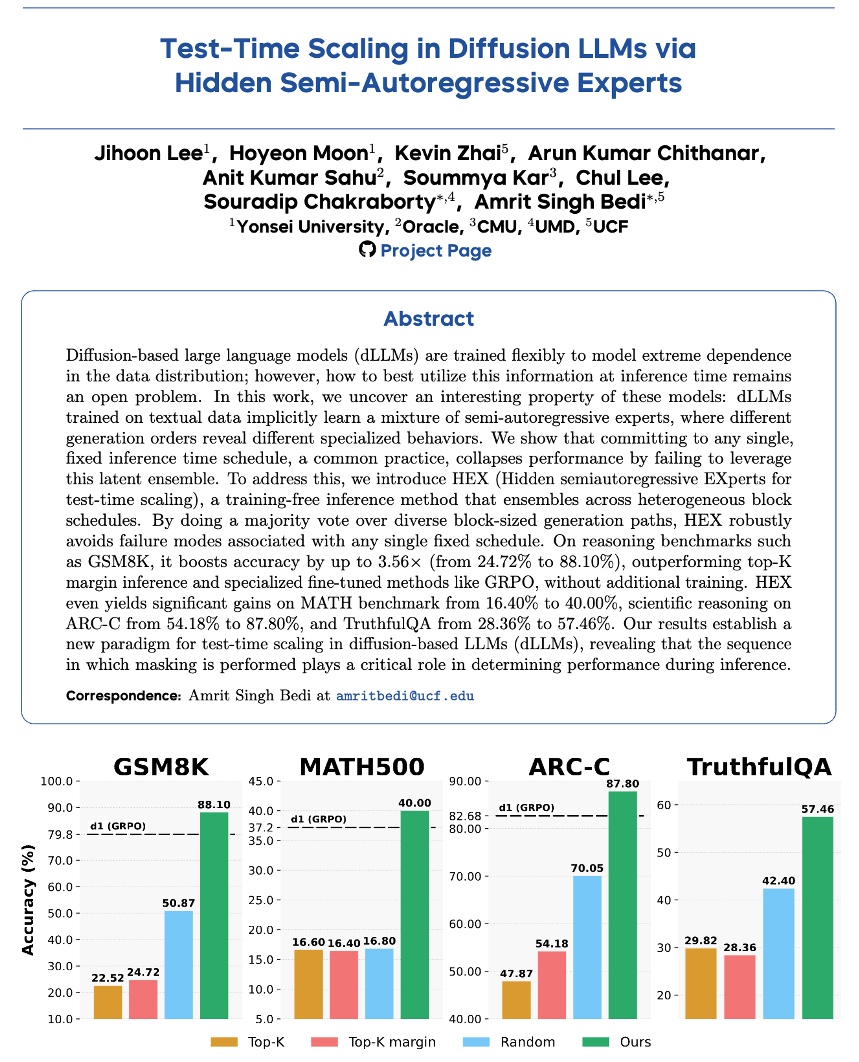

Dimitris Papailiopoulos This is true. But we show a principled method in our #ICLR2025 paper via Controlled Decoding Paper: arxiv.org/abs/2503.21720 x.com/SOURADIPCHAKR1… cc : Amrit Singh Bedi Furong Huang

🚨 #Neurips2025 Workshop Alert: Biosecurity Safeguards for #GenerativeAI Link : biosafe-gen-ai.github.io Deadline extended: August 29, 2025 AOE Yoshua Bengio Peter Henderson Jian Ma Russ Altman Theofanis Karaletsos Megan Blewett george church CL • Le Cong Mengdi Wang Amrit Singh Bedi Zaixi Zhang

Souradip Chakraborty Tagging a few folks who might be interested in this topic. Looking forward to any thoughts, questions, or feedback! Aditya Grover Jaeyeon (Jay) Kim Subham Sahoo Simo Ryu Jiaxin Shi Sasha Rush Peter Holderrieth Alex Tong Quanquan Gu Molei Tao Devaansh Gupta Siyan Zhao

Such an inspiring journey of Furong Huang 🎉

Andrej Karpathy Andrej Karpathy I think it would be good to distinguish RL as a problem from the algorithms that people use to address RL problems. This would allow us to discuss if the problem is with the algorithms, or if the problem is with posing a problem as an RL problem. 1/x

Andrej Karpathy It seems to me that not only you, but too many people talk about RL as if these two things were the same, which prevents a more nuanced discussion. 2/2

Zachary Horvitz Cool work! Indeed, inference time sampling for MDLMs has a lot to offer, our related reference :) x.com/amritsinghbedi…