Pillar Security

@pillar_sec

Pillar enables teams to rapidly adopt AI with minimal risk by providing a unified AI security layer across the organization.

ID: 1828408717491998722

https://www.pillar.security/ 27-08-2024 12:26:44

21 Tweet

44 Followers

11 Following

We’re thrilled to announce that Pillar Security has been selected for the Amazon Web Services x CrowdStrike Cybersecurity Accelerator, in collaboration with NVIDIA ! This incredible opportunity enables us to showcase our technology to industry leaders while learning from some of the

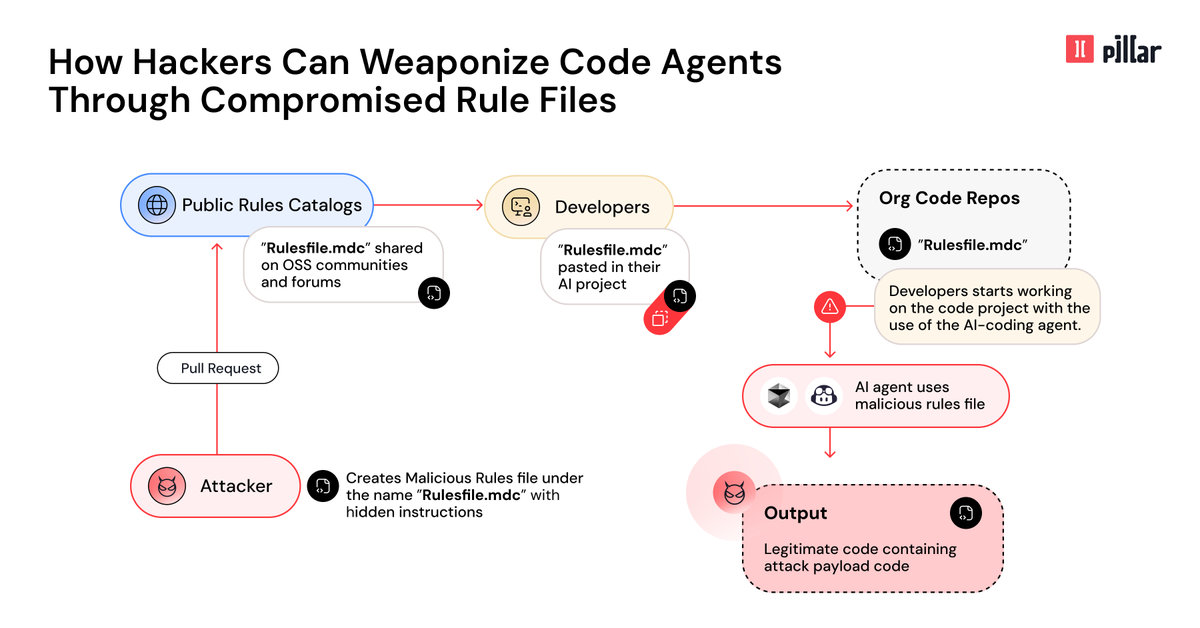

⚠️The rise of "Vibe Coding" together with developers' inherent "automation bias" creates the perfect attack surface. 🛑New Rules File Backdoor attack, discovered by Pillar Security, lets hackers poison AI-powered tools like GitHub Copilot & Cursor, injecting hidden malicious code

Last week I dropped a blog on security tools for the AI Engineer If AI Engineer is in your title checkout - Invariant Labs to scan MCP servers for malicious tools - Pensar ⌘ for agentic SAST style vulns - Pillar Security to scan cursor rules files for hidden characters

Pillar Security (Pillar Security) raises $9M to secure #AI software, tackling risks traditional tools miss. Its platform redefines cybersecurity for the Intelligence Age. hackernoon.com/cyber-startup-…

Pillar's Guardrails are now live on LiteLLM (YC W23)! You can now integrate Pillar's Guardrails into your LiteLLMm proxy and ensure safety and compliance for your AI agentic workflows. What we cover: ✅ Prompt Injection Protection: Block malicious manipulations before they reach your