Nived Rajaraman

@nived_rajaraman

EECS PhD student at Berkeley. Former intern at Deepmind. Reinforcement learning. I organize the BLISS seminar bliss.eecs.berkeley.edu/Seminar/index.…

ID: 2612375550

https://nivedr.github.io/ 08-07-2014 21:38:16

9 Tweet

77 Takipçi

111 Takip Edilen

Our first Rising Stars session featured fantastic talks by Tianlong Chen Congyue Deng Nived Rajaraman Yihua Zhang Grigorios Chrysos

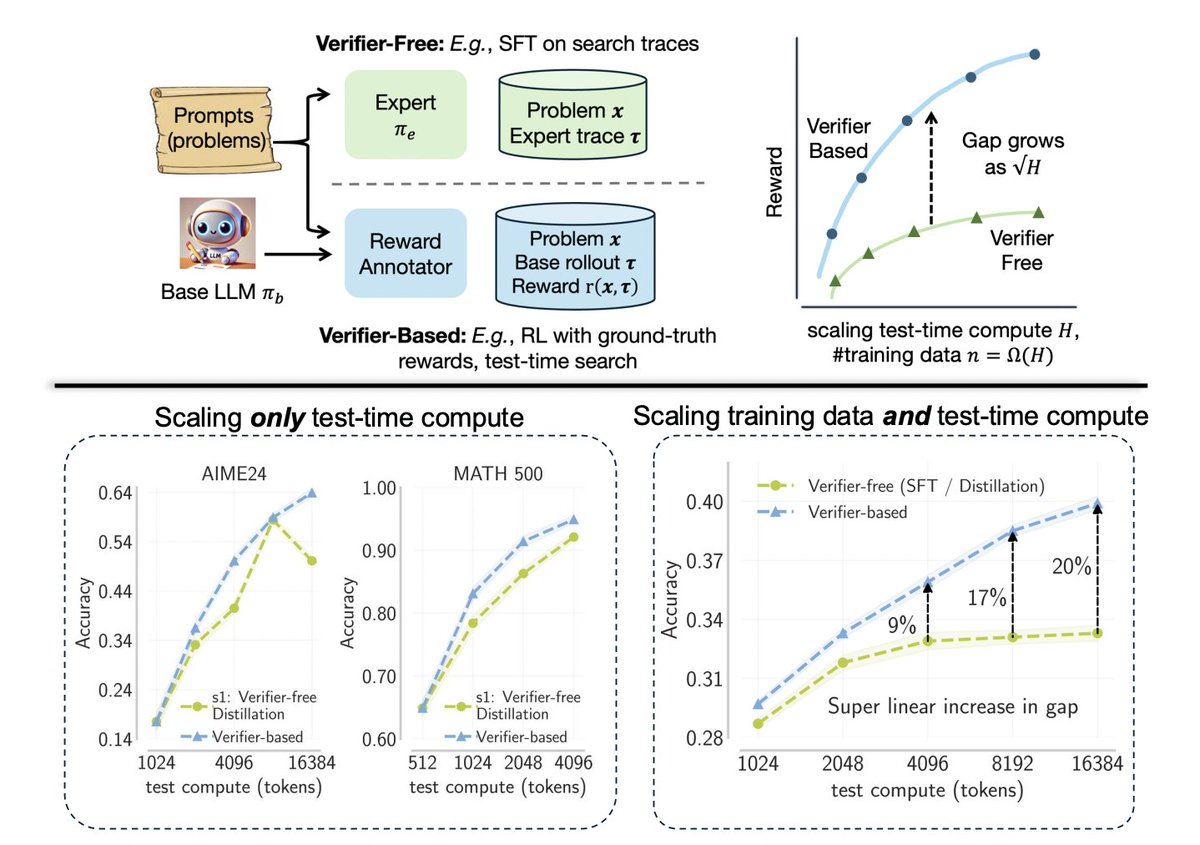

At es-fomo workshop, talk to Rishabh Tiwari about scaling test-time compute as a function of user-facing latency (instead of FLOPS)

Excited to announce our NeurIPS ’25 tutorial: Foundations of Imitation Learning: From Language Modeling to Continuous Control With Adam Block & Max Simchowitz (Max Simchowitz)