Naman Goyal

@namangoyal21

Research @thinkymachines, previously pretraining LLAMA at GenAI Meta

ID: 941156280

11-11-2012 12:10:24

197 Tweet

1,1K Takipçi

591 Takip Edilen

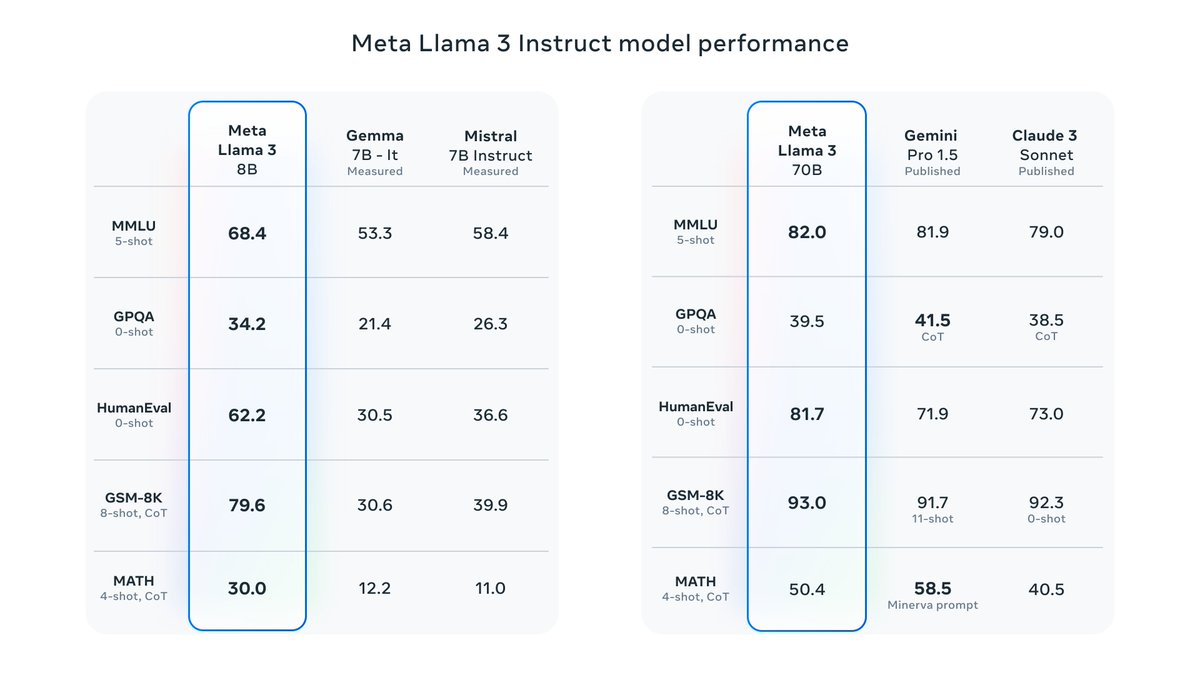

Llama 3 is out in 8B and 70B sizes! (400B still training) Congrats to the AI at Meta team! ai.meta.com/blog/meta-llam…