Aran Komatsuzaki

@arankomatsuzaki

@TeraflopAI

ID:794433401591693312

https://arankomatsuzaki.wordpress.com/about-me/ 04-11-2016 06:57:37

4,9K Tweets

95,7K Followers

78 Following

Follow People

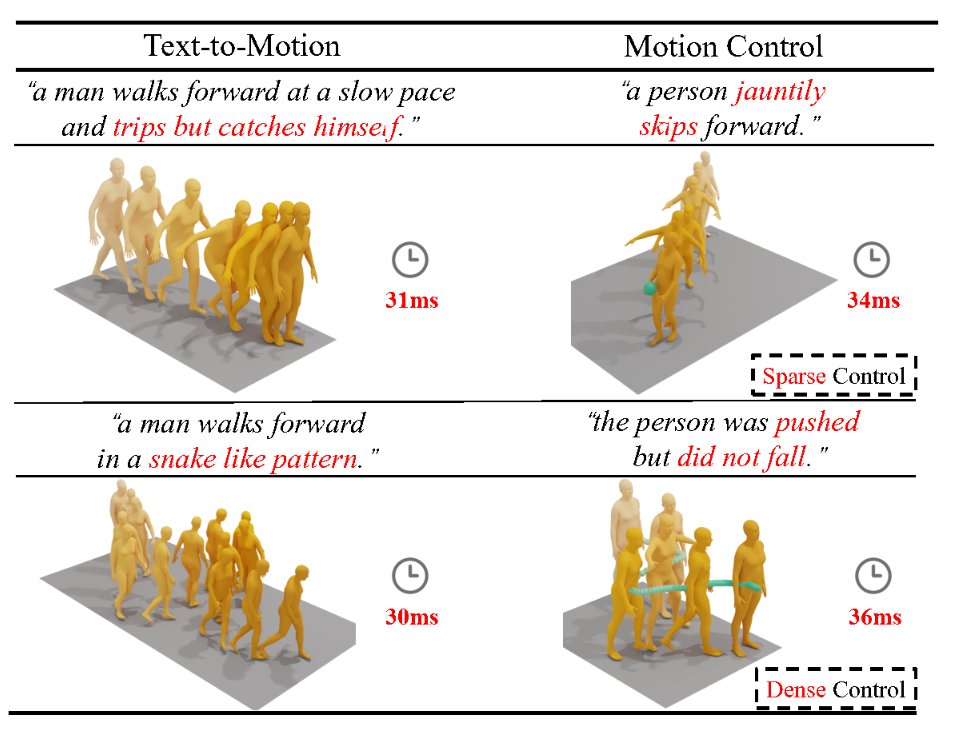

new research: Ultra Inertial Poser: Scalable full-body tracking in the wild using sparse sensing

#SIGGRAPH2024

No cameras—just 6 wearables (IMU+UWB) for our graph model to estimate poses

We are first to process raw IMU signals and need no proprietary sensors for 3D orientation

✨New Paper Alert✨

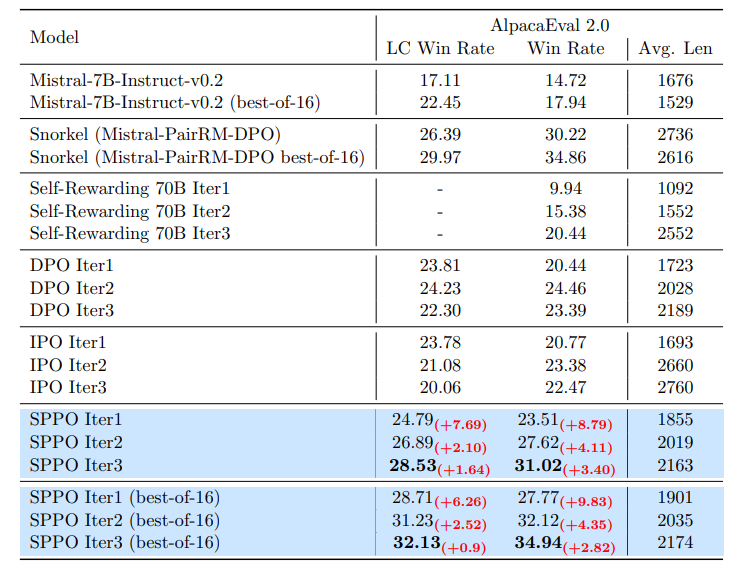

Excited to introduce ExPO, an extremely simple method to boost LLMs' alignment with human preference, via weak-to-strong model extrapolation

👇

#LLMs #MachineLearning #NLProc #ArtificialIntelligence #AI

Aran Komatsuzaki Thanks for sharing our work! In case anyone's interested in digging more, here's my tweet: twitter.com/ZimingLiu11/st…

Awesome to see Joseph Spisak, AI Product Director, Meta, mention our previous research, YaRN, on stage at the Weights & Biases Fully Connected conference. We have another very exciting long-context release coming soon.