Flavio Calmon

@flaviocalmon

Associate Professor @hseas. Information theorist, but only asymptotically. Brasileiro/American.

ID: 52728555

http://people.seas.harvard.edu/~flavio/ 01-07-2009 13:44:53

54 Tweet

369 Followers

97 Following

Back home from FAccT - I thankful for the work our community is doing & the values it stands for. Serving it has been a labor of love for me & I am beyond grateful to have done so this year along my truly wonderful program co-chairs & human beings michael veale Reuben Binns @[email protected] Flavio Calmon

Part 2 of my 2024 publication tweets! Please welcome Multi-group Proportional Representation, a novel metric for measuring representation in image generation and retrieval. This work was recently accepted at NeurIPS Conference 2024. (1/n)

Finally, I am pleased to announce 🪢Interpreting CLIP with Sparse Linear Concept Embeddings (SpLiCE)🪢 Joint work with Usha Bhalla, as well as Suraj Srinivas, Flavio Calmon, and 𝙷𝚒𝚖𝚊 𝙻𝚊𝚔𝚔𝚊𝚛𝚊𝚓𝚞, which was just accepted to NeurIPS 2024! Check out the paper here: arxiv.org/abs/2402.10376

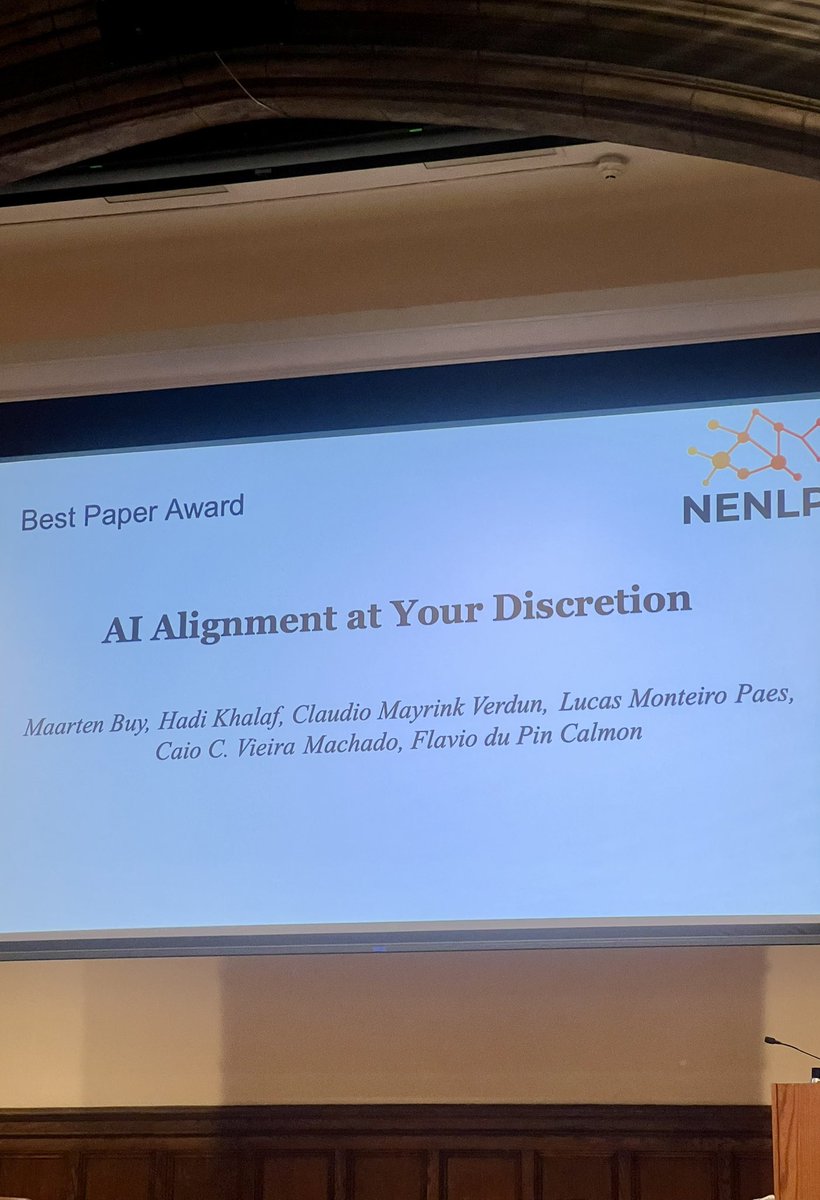

AI is built to “be helpful” or “avoid harm”, but which principles should it prioritize and when? We call this alignment discretion. As Asimov's stories show: balancing principles for AI behavior is tricky. In fact, we find that AI has its own set of priorities (comic Randall Munroe)👇

9/n Full paper here: 🔗 arxiv.org/abs/2502.10441. Huge thanks to my amazing team of co-authors: Maarten Buyl, Hadi Khalaf, Claudio Verdun, Caio C. V. Machado, and Flavio Calmon. Work done Harvard SEAS!

‼️🕚New paper alert with Usha Bhalla: Leveraging the Sequential Nature of Language for Interpretability (openreview.net/pdf?id=hgPf1ki…)! 1/n

![Hao Wang (@hw_haowang) on Twitter photo [1/x] 🚀 We're excited to share our latest work on improving inference-time efficiency for LLMs through KV cache quantization---a key step toward making long-context reasoning more scalable and memory-efficient. [1/x] 🚀 We're excited to share our latest work on improving inference-time efficiency for LLMs through KV cache quantization---a key step toward making long-context reasoning more scalable and memory-efficient.](https://pbs.twimg.com/media/Gnx9W5FWIAA4vbt.jpg)