Oishi Deb

@deboishi

DPhil Candidate @Oxford_VGG & @OxfordTVG in @Oxengsci, @CompSciOxford & @KelloggOx, RG Chair at @ELLISforEurope

@GoogleDeepMind Scholar, Ex @RollsRoyceUK

ID: 1922373703

https://bit.ly/48glsrT 01-10-2013 05:22:30

429 Tweet

600 Followers

680 Following

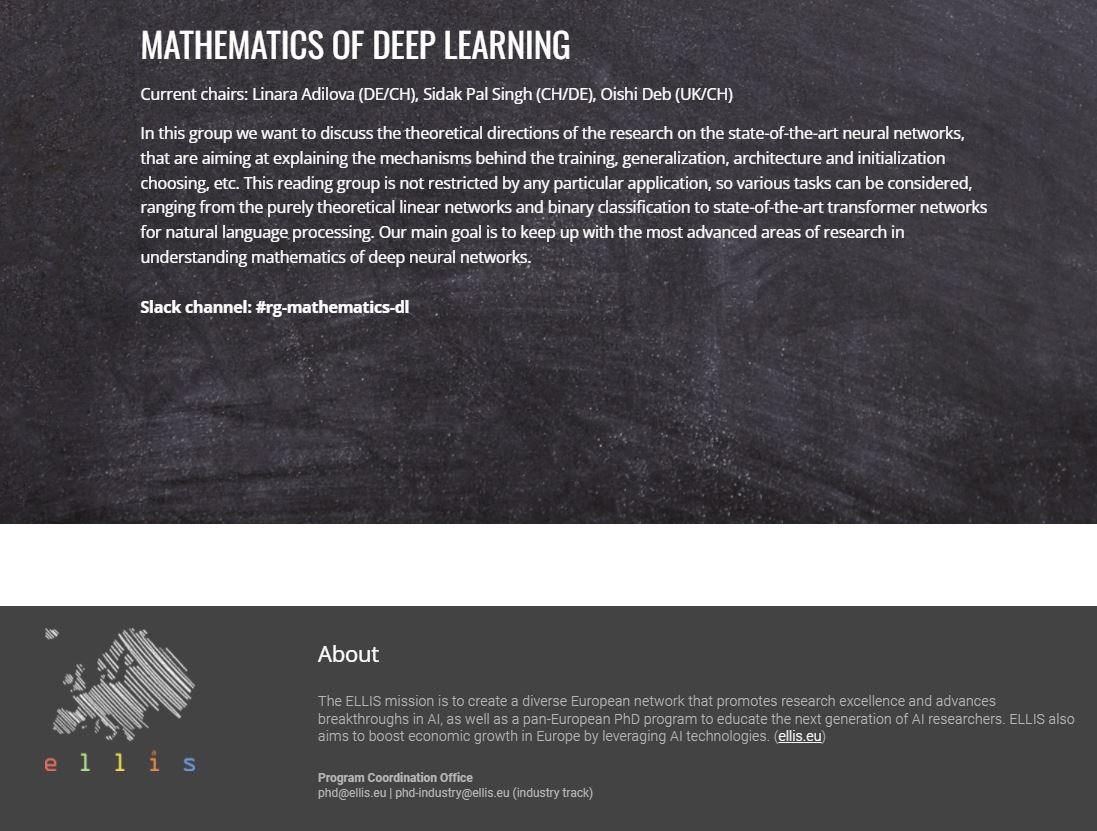

I am delighted to be a chair for an ELLIS Reading Group on Mathematics of Deep Learning along with Linara and Sidak Sidak Pal Singh. The link to join the group is here - bit.ly/3fjh8la, looking forward to meeting new people! Oxford Comp Sci Engineering Science, Oxford

What if LLMs knew when to stop? 🚧 HALT finetuning teaches LLMs to only generate content they’re confident is correct. 🔍 Insight: Post-training must be adjusted to the model’s capabilities. ⚖️ Tunable trade-off: Higher correctness 🔒 vs. More completeness 📝 with AI at Meta 🧵

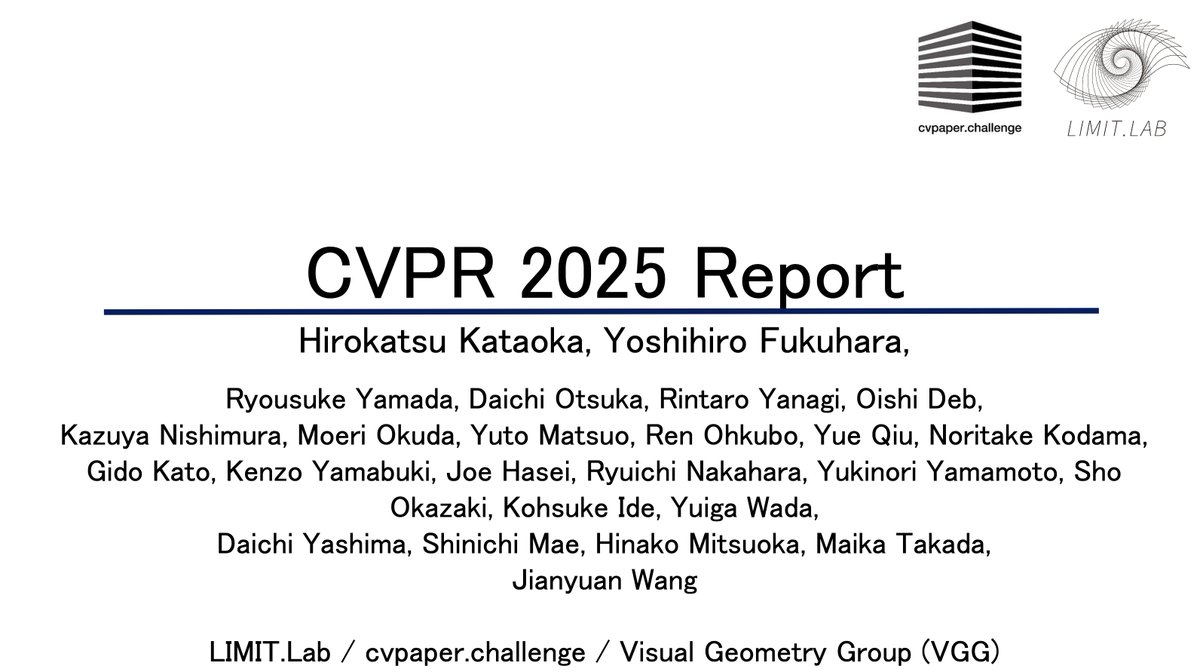

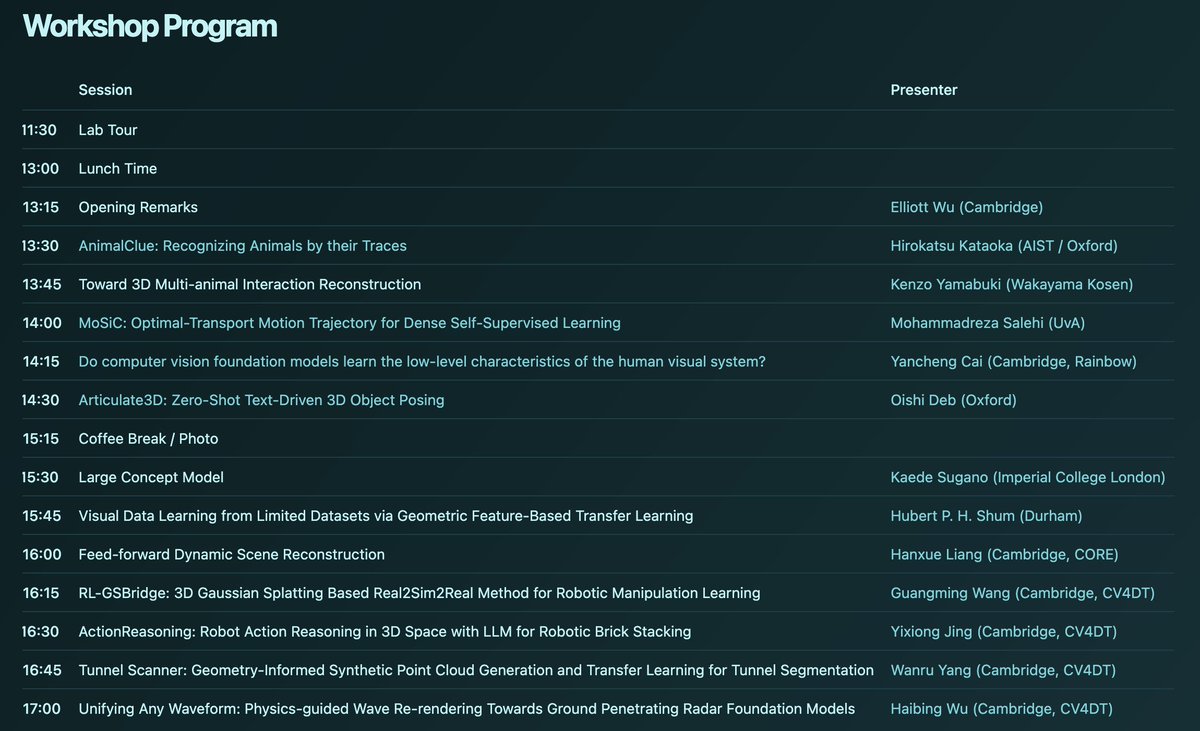

Thank you so much to everyone in the #CVPR25 / #CVPR2025 , LIMIT.Lab, VGG / Visual Geometry Group (VGG) , and cvpaper.challenge / cvpaper.challenge | AI/CV研究コミュニティ , all research communities for your support in organizing the event and making the report.

Meet Oishi Deb, ELLIS PhD Student 🎓 at University of Oxford 🏴 & Google DeepMind. She works on computer vision, gen & responsible AI and chairs ELLIS PhD Reading Groups on CV & deep learning theory. Career high: She won a £25K grant from Sky to advance ML & AI! 👏 #WomenInELLIS

NEW: We’re honoured to be ranked number one in the Times Higher Education World University Rankings for the tenth consecutive year. 👏