Bingbin Liu

@bingbinl

PhD student at CMU machine learning department.

ID: 1039263012123758595

https://clarabing.github.io/ 10-09-2018 21:23:05

102 Tweet

841 Followers

246 Following

The application is now open for our #KempnerInstitute Research #Fellowship! Postdocs studying the foundations of #intelligence or applications of #AI are encouraged to apply. Learn more and apply by Oct. 1: bit.ly/4djPSf8 #LLMs #NeuroAI #ML Sham Kakade Bernardo Sabatini

CFP & join us at M3L Workshop @ NeurIPS 2024 at #NeurIPS2024! We look forward to learning about your insights & findings on the theoretical and scientific understanding of ML phenomena💡

Chatbots are often augmented w/ new facts by context from the user or retriever. Models must adapt instead of hallucinating outdated facts. In this work w/Sachin Goyal, Zico Kolter, Aditi Raghunathan, we show that instruction tuning fails to reliably improve this behavior! [1/n]

Our work has been selected as an Oral at ICLR 25! We find theoretical and empirical explanations for the benefits of progressive distillation. Amazing work led by Abhishek Panigrahi and Bingbin Liu, done in collaboration with Andrej Risteski and Surbhi Goel :)

1⃣ Distillation (Oral; led by Abhishek Panigrahi and Bingbin Liu): theory + exps on when and how progressive distillation (training w a few intermediate teachers) is beneficial for the student. Prog distillation induces a provably beneficial implicit curriculum. arxiv.org/abs/2410.05464

If you're at #ICLR2025, come to our oral talk & poster on progressive distillation presented by the amazing Abhishek Panigrahi! ✨🌴 Joint work with (the equally amazing) Sadhika Malladi, Andrej Risteski, Surbhi Goel More details at our blog: unprovenalgos.github.io/progressive-di…

New in the Deeper Learning blog: Kempner researchers show how VLMs speak the same semantic language across images and text. bit.ly/KempnerVLM by Isabel Papadimitriou, Chloe H. Su, Thomas Fel, Stephanie Gil, and Sham Kakade #AI #ML #VLMs #SAEs

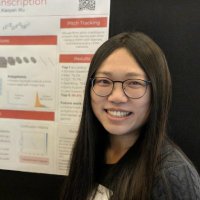

Misha Khodak, Tanya Marwah, along with myself, Nicholas Boffi and Jianfeng Lu are organizing a COLT 2025 workshop on the Theory of AI for Scientific Computing, to be held on the first day of the conference (June 30).

[1/n] New work [JSKZ25] w/ Jikai Jin, Vasilis Syrgkanis, Sham Kakade. We introduce new formulations and tools for evaluating language model capabilities, which help explain recent observations of post-training behaviors of Qwen-series models — there is a sensitive causal link

![Hanlin Zhang (@_hanlin_zhang_) on Twitter photo [1/n] New work [JSKZ25] w/ <a href="/JikaiJin2002/">Jikai Jin</a>, <a href="/syrgkanis/">Vasilis Syrgkanis</a>, <a href="/ShamKakade6/">Sham Kakade</a>.

We introduce new formulations and tools for evaluating language model capabilities, which help explain recent observations of post-training behaviors of Qwen-series models — there is a sensitive causal link [1/n] New work [JSKZ25] w/ <a href="/JikaiJin2002/">Jikai Jin</a>, <a href="/syrgkanis/">Vasilis Syrgkanis</a>, <a href="/ShamKakade6/">Sham Kakade</a>.

We introduce new formulations and tools for evaluating language model capabilities, which help explain recent observations of post-training behaviors of Qwen-series models — there is a sensitive causal link](https://pbs.twimg.com/media/GtviUVkWAAAs6zM.png)